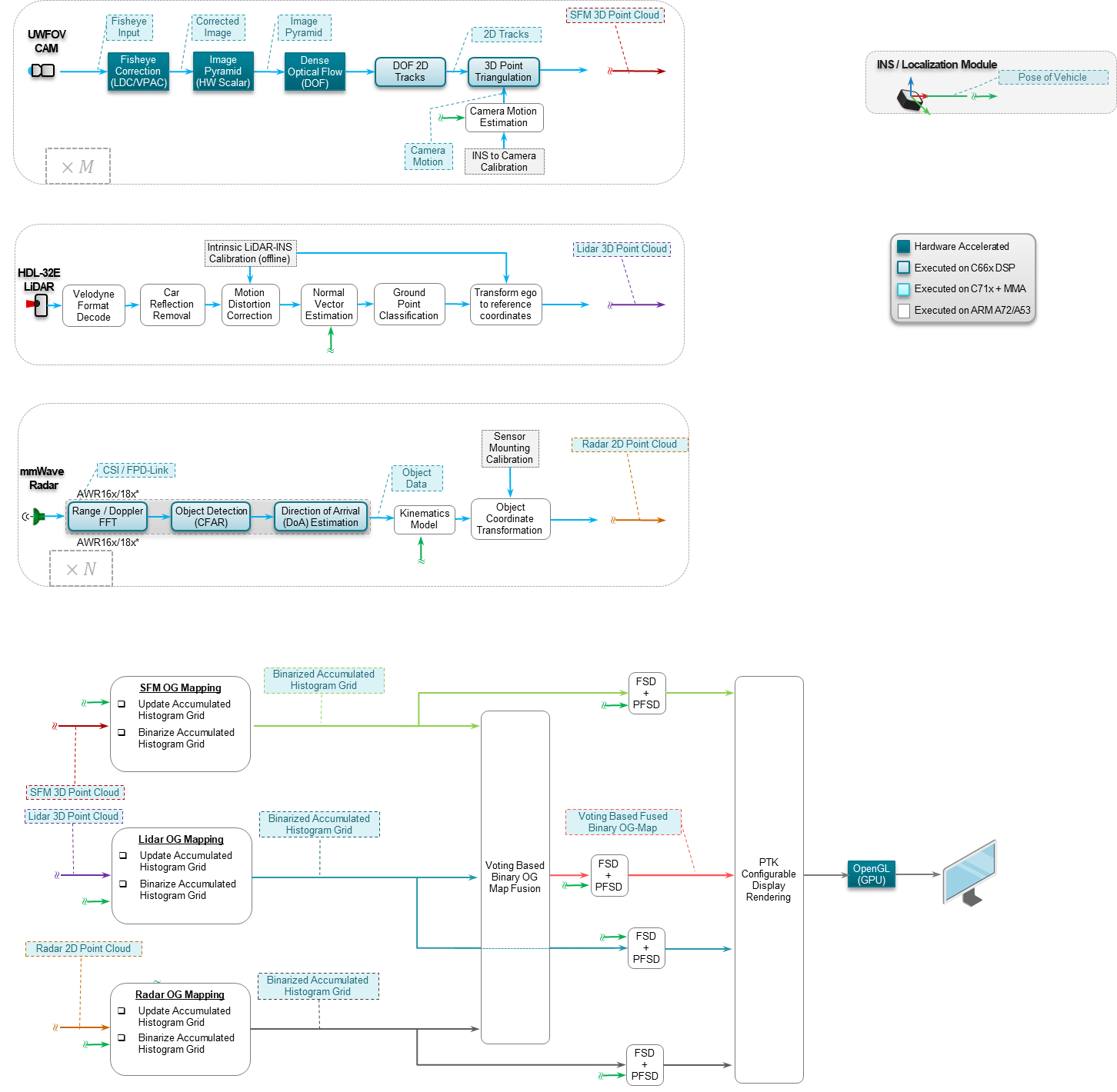

This application shows a multi-modal approach for generating occupancy grid maps indicating free space versus occupied space around the vehicle. 3D data from Structure from Motion (SfM) on a fisheye camera, a Velodyne Lidar and a radar are used to generate one sensor-specific map for each of the three sensors modalities. A map fusion algorithm generates the final combined map and a parking free-space detection algorithm extracts free space from this fused map that is large enough to fit a car for perpendicular parking. Localization of the vehicle in the maps is provided by an Inertial Navigation System (INS) combining GPS (Global Positioning System) and IMU (Inertial Measurement Unit). The demonstrated functions are necessary requirements for automated/valet parking of a vehicle.

The resulting maps can either be visualized in real-time or saved to file.

The application makes use of the below application libraries,

The input data for this application can be generated using the below additional applications

| Platform | Linux x86_64 | Linux+RTOS mode | QNX+RTOS mode | SoC |

|---|---|---|---|---|

| Support | N0 | YES | NO | J721e |

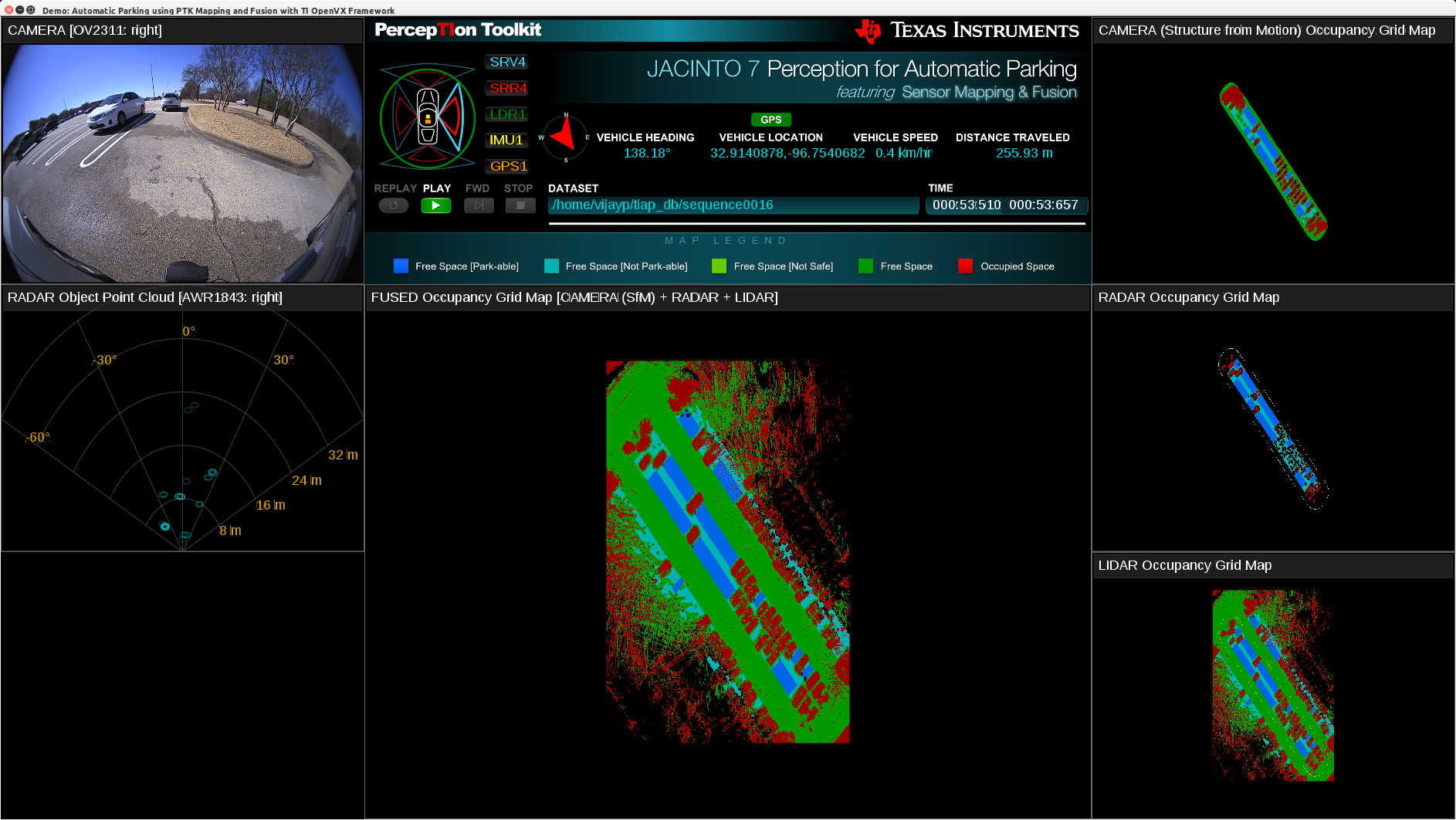

Shown below is an example output visualization showing maps for radar-only, lidar-only, camera-only and fused.