Robotics SDK: Intoduction¶

Overview¶

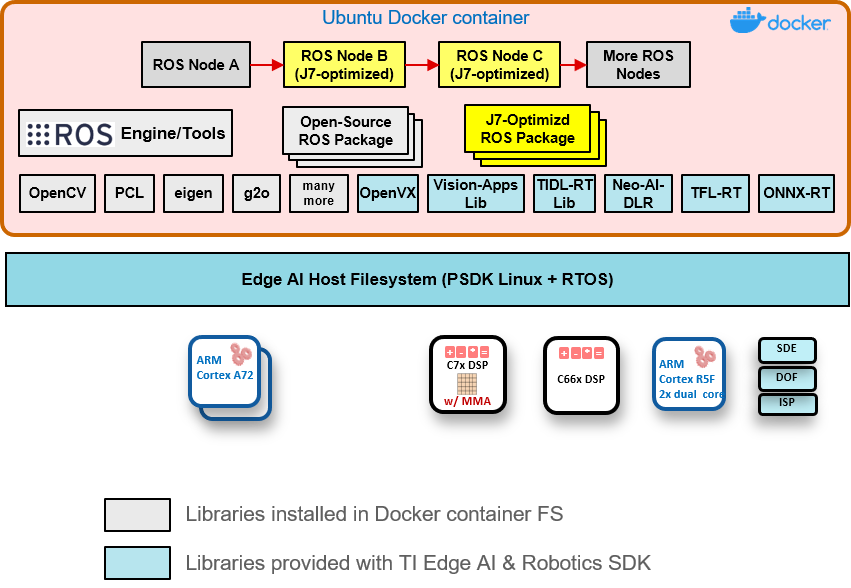

The Jacinto Robotics SDK provides software development environment on the latest TDA4 class of SoCs, and also provides software building blocks and example demos that can be leaveraged in robotics software development. The SDK runs in Docker container environments on Processor SDK Linux for Edge AI. We provide detailed steps for setting Docker container environments for ROS Melodic and ROS 2 Foxy on the Processor SDK Linux (see next section). The Robotics SDK allows:

Optimized software implementation of computation-intensive software blocks (including deep-learning, vision, perception, and ADAS) on deep-learning core (C7x/MMA), DSP cores, hardware accelerators built-in on the TDA4 processors.

Direct compilation of application software on the target using APIs optimized on the TDA4 cores and hardware accelerators along with many open-source libraries and packages including, for example, OpenCV, Point-Cloud Library (PCL), and many more.

Figure 1 shows the software libraries and components that the Robotics SDK provides.

TI Vision Apps Library¶

TI Vision Apps Library is included in the pre-built base image for Processor SDK Linux for Edge AI. The library provides a set of APIs including:

TI OpenVX kernels and infrastructure

Imaging and vision applications

Advanced driver-assistance systems (ADAS) applications

Perception applications

Open-Source Deep-Learning Runtime¶

The Processor SDK Linux for Edge AI also supports the following open-source deep-learning runtime:

TVM/Neo-AI-DLR

TFLite Runtime

ONNX Runtime

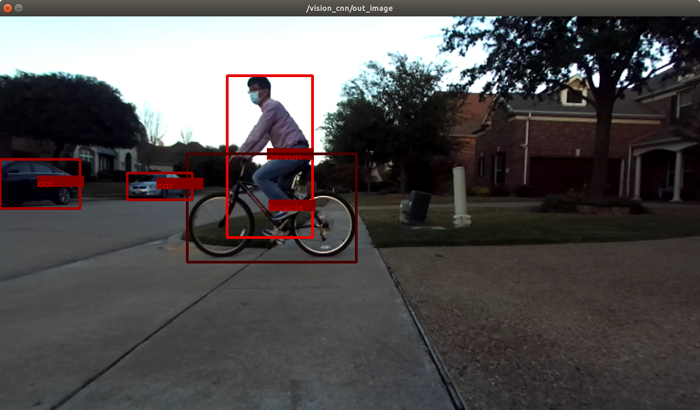

For more details on open-source deep-learning runtime on TDA4x, please check TI Edge AI Cloud. The Robotics SDK provides a versatile vision CNN node optimized on TDA4x that supports many deep-learning models for object detection and semantic segmentation operations.

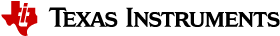

Figure 2 shows a representative deep-learning and compute-intensive application developed with the Robotics SDK.

Setting Up Robotics SDK Docker Container Environment¶

This section describes how to set up the Robotics SDK on the TDA4 Processor SDK Linux. Check out our Setting Up Robotics SDK to get started.

Note: git.ti.com has some issue in rendering markdown files. We highly recommend to use the section in the User Guide Documentation

Sensor Driver Nodes¶

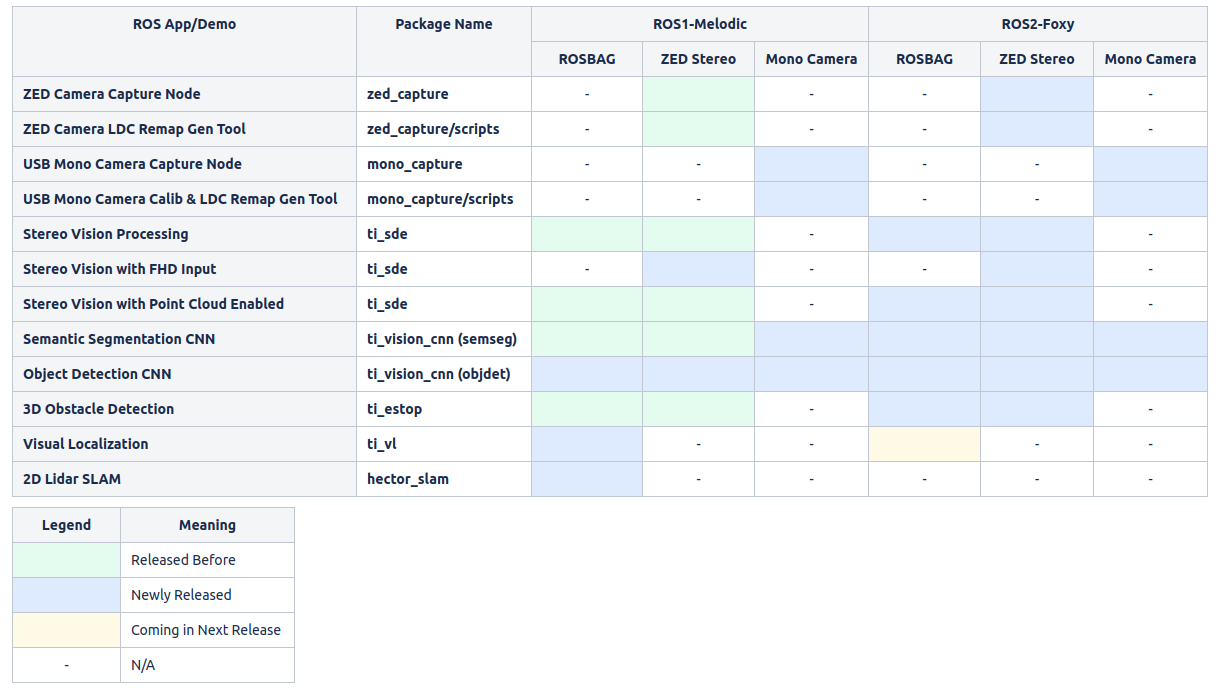

Following ROS nodes for cameras are tested and supported by the SDK.

Demo Applications¶

The SDK supports following out-of-box demo applications.

Scope of Robotics SDK 0.5¶

Change Log¶

See CHANGELOG.md

Limitations and Known Issues¶

RViz visualization is displayed on a remote Ubuntu PC.

Ctrl+C termination of a ROS node or a ROS launch can be sometimes slow.

Stereo Vision Demo

Output disparity map may have artifacts that are common to block-based stereo algorithms. e.g., noise in the sky, texture-less area, repeated patterns, etc.

While the confidence map from SDE has 8 values between 0 (least confident) to 7 (most confident), the confidence map from the multi-layer SDE refinement has only 2 values, 0 and 7. Therefore, it would not appear as fine as the SDE’s confidence map.

The default semantic segmentation model used in

ti_vision_cnnandti_estopnodes was trained with Cityscapes dataset first, and re-trained with a small dataset collected from a particular stereo camera (ZED camera, HD mode) for a limited scenarios with coarse annotation. Therefore, the model can show limited accuracy performance if a different camera model is used and/or when it is applied to different environment scenes.The default 2D object detection model (ONR-OD-8080-yolov3-lite-regNetX-1.6gf-bgr-coco-512x512) has initial loading time of about 20 seconds.

Launching a demo in ROS 2 environment with “ros2 bag play” in a single launch script currently not stable. It is recommended to launch “ros2 bag play” in a separate terminal. The demos in ROS 2 container currently run more stable with live cameras (ZED stereo camera or USB mono camera).

The USB mono camera capture node currently is tested only with Logitech C920 and C270 webcams in ‘YUYV’ (YUYV 4:2:2) mode. ‘MJPG’ (Motion-JPEG) mode is not yet enabled and tested.