9.4. IPC Design Overview¶

9.4.1. Introduction¶

The Jacinto 7 SoC has multiple different CPUs on an SoC. (e.g. R5F, C6x, C7x, and A72). Software running on these CPUs needs to communicate with each other to realize a use-case. The means of collaboration is referred to as inter-processor communication or IPC. An IPC library is provivded on each CPU and OS to enable communication among the higher level application components on each core.

The overall IPC software stack on different CPU/OS is shown in table below:

IPC SW layer |

Description |

|---|---|

Application |

Application which sends/receives IPC messages |

RPMSG CHAR |

[ONLY in Linux] User space API used by application to sends/receives IPC messages |

RPMSG |

SW protocol and interface used to exchange messages between endpoints on a destination CPU |

VRING |

Shared memory based SW queue which temporarily holds messages as they are exchanges between two CPUs |

HW Mailbox |

Hardware mechnism used for interrupt notification between two CPUs |

The main SW components for IPC are,

PDK IPC LLD driver for RTOS, this consists of RPMSG, VRING and HW Mailbox driver.

Linux kernel IPC driver suite for Linux, this consists of RPMSG CHAR, RPMSG, VRING and HW Mailbox driver.

The PDK IPC library and the Linux kernel IPC driver suite enables communication among all the cores present in J7 SoC. The PDK IPC library is linked with RTOS applications and supports message based communication with other cores running RTOS (like R5FSS cores and DSP Cores). It is also capable of communicating with A72 cores running Linux using the same APIs.

The Linux IPC driver runs on A72 cores as a part of the kernel and can communicate with R5FSS and DSP cores.

9.4.2. RPMSG and VRING¶

RPMSG is the common messaging framework that is used by Linux as well as RTOS. RPMSG is an endpoint based protocol where a server CPU can run a service that listens to incoming messages at a dedicated endpoint, while all other CPUs can send requests to that (server CPU, service endpoint) tuple. You can think of an analogy to UDP/IP layers in networking, where the CPU name is analogous to the IP address, and endpoint is similar to the UDP port number.

When sending a message to a server, a client CPU / task also provides a reply end point so that the server can send its reply to the client CPU.

Multiple logical IPC communication channels can be opened between the same set of CPUs, by using multiple end-points.

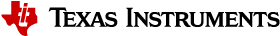

While RPMSG is the API or protocol as seen by an application, internally the IPC driver uses VRING to actually pass messages among different (RPMSG CPU, endpoint) tuples. The relationship between RMSG end points and VRING is shown in below figure.

Fig. 9.5 RPMSG and VRING¶

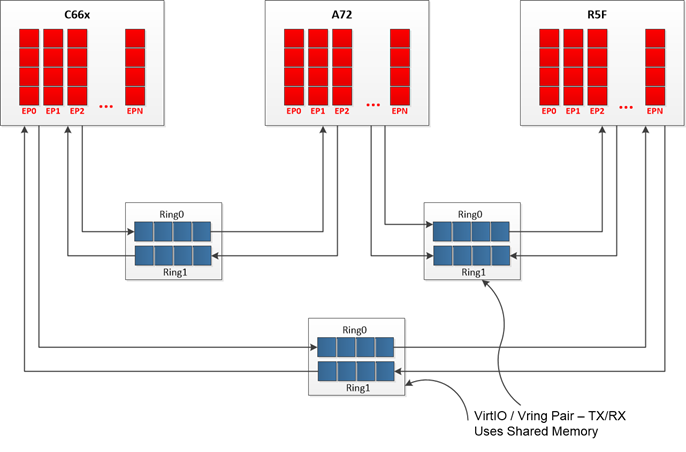

VRING is a shared memory segment, between a pair of CPUs, which holds the messages passed between the two CPUs. The sequence of events as a message is passed from a sender to receiver and back again is shown in below figure.

Fig. 9.6 RPMSG and VRING message exchange data flow¶

The sequence of steps is described below,

A application sends a message to a given destination (CPU, endpoint)

The message is first copied from the application to VRING used between the two CPUs. After this the IPC driver posts the VRING ID in the HW mailbox.

This triggers a interrupt on the destination CPU. In the ISR of destination CPU, it extracts the VRING ID and then based on the VRING ID, checks for any messages in that VRING

If a message is received, it extracts the message from the VRING and puts it in the destination RPMSG endpoint queue. It then triggers the application blocked on this RPMSG endpoint

The application then handles the received message and replies back to the sender CPU using the same RPMSG and VRING mechanism in the reverse direction.

9.4.3. RPMSG CHAR¶

RPMSG CHAR is a user space API which provides access to the RPMSG kernel driver in Linux.

RPMSG CHAR provides linux applications a file IO interface to read and write messages to different CPUs

Applications on RTOS which need to talk to linux need to “announce” its end point to linux. This create a device “/dev/rpmsgX” on linux.

Linux user space applications can use this device to read and write messages to the associated end point on the RTOS side.

Usual linux user space APIs like “select”, can be used to wait on multiple end points from multiple CPUs.

A utility library, “ti_rpmsg_char” is provided to simplify discovery and intialization of announced RPMSG endpoints from different CPUs

9.4.4. Hardware Mailbox¶

9.4.4.1. Mailbox features¶

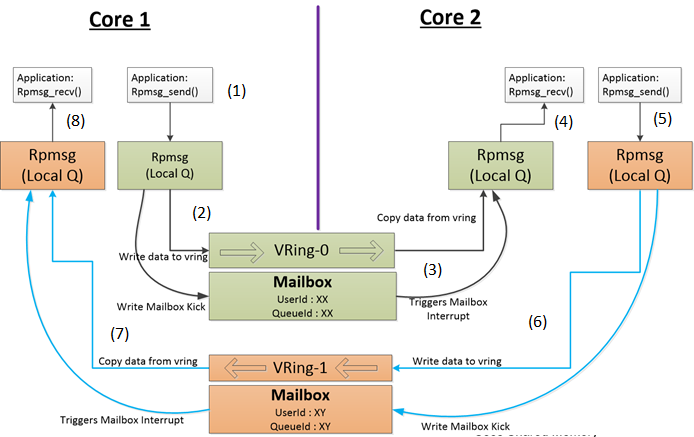

Hardware mailbox is primarily used to provide an interrupt event notification with a small 32-bit payload.

VRING uses hardware mailbox to trigger interrupts on destination CPU.

Each mailbox consists of 16 uni-directional HW queuse interfacing with max 4 communication users or CPUs.

Each mailbox Queue can hold up to 4 word-sized messages at a time.

Below mailbox status and interrupt event notification is available per communication user

New Message status event for Rx (triggered as long as a mailbox has a message) for each mailbox

Not Full status event for Tx (triggered as long as a mailbox has empy fifo )

Status register per mailbox for “Mailbox Full” and “Number of unread messages”

J7 platforms have 12 HW mailbox instances. i.e 12x 16 HW mailbox queues

Below figure shows a logical block diagram of a hardware mailbox,

Fig. 9.7 Hardware mailbox¶

9.4.4.2. Typical usage of mailbox IP¶

A mailbox is associated with a “sender” user and a “receiver” user

Sender does below sequence of steps,

Check if there is room in the mailbox FIFO (read MAILBOX_FIFOSTATUS_m)

If not full, write the message to the mailbox and return to caller (write MAILBOX_message_m)

If full,

Store the message in a software queue backing the h/w mailbox FIFO

Enable the NotFull event (set corresponding bit in MAILBOX_IRQENABLE_SET_u) for that mailbox

Upon interrupt,

Clear the interrupt trigger source within the IP (clear corresponding bit in MAILBOX_IRQSTATUS_CLR_u)

Remove the message from software queue and write to the mailbox (write as many empty slots as there are available)

Receiver does below sequence of steps,

Enable the corresponding mailbox ‘NewMsg’ event in the corresponding user interrupt configuration register

Upon interrupt,

Read one or more mailbox messages until empty into a software backed queue

Clear the interrupt source

Process the received messages

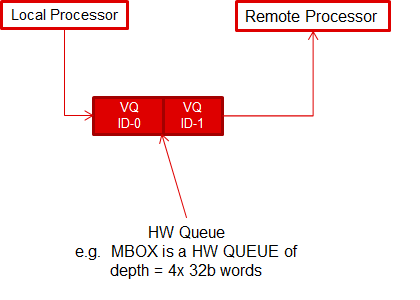

9.4.4.3. Mailbox and VRING¶

Mailbox essentially acts as a very small HW queue which holds the VRING ID

VRING is SW queue in shared memory and holds the actual message passed between two CPUs. When a interrupt is received the mailbox message tells which VRING to dequeue messages from.

VRING ID = 0 tells to look at the VRING from sender to receiver

VRING ID = 1 tells to look at the VRING from recevier to sender

Fig. 9.8 Mailbox and VRING¶

9.4.5. Hardware spinlock¶

Sometimes its needed to have mutually exclusive access between two CPUs to a critical section. Typically on a OS running on the same CPU or CPU cluster, one uses a SW semaphore or mutex function for mutual exclusion. However this function will have no effect when mutual exclusion is needed between two CPUs each running a different instance of an OS, say Linux and RTOS. In such cases, HW spinlock can be used.

There are 256 HW spinlocks within a single instance of HW spinlock in Jacinto 7 SoC.

J7 platforms have 1 instance of HW spinlock.

Note

Hardware spinlock is not used by IPC driver as such and can be used by applications directly.

Sample code showing usage of HW spinlock can be found in SDK as shown below

SDK Component |

File / Folder |

Description |

|---|---|---|

app_utils |

app_utils/utils/ipc/src/app_ipc_linux_hw_spinlock.c |

HW spinlock usage on Linux |

app_utils |

app_utils/utils/ipc/src/app_ipc_rtos.c [appIpcHwLockAcquire/appIpcHwLockRelease] |

HW spinlock usage on RTOS |

Note

When using HW spinlock the CPU “spins” waiting to the lock to be released, hence one should use HW spinlock for very short periods

Care should be taken to make sure a CPU does not exit without releasing the lock

SW timeouts can be used when taking a lock to make sure CPU does not spin indefinitely

It is also recommended to take a local OS lock (POSIX semaphore, pthread_mutex, RTOS Semaphore) before taking the HW lock to make sure tasks/threads/processes on the same CPU do not spin on the same HW spinlock

9.4.6. Performance and Latency¶

9.4.6.1. Latency¶

Latency

Latency is a function of sender CPU and receiver CPU speeds

Interrupt service latency on receiving CPU

Memory access latencies on sender and receiver CPUs

Mailbox Latency

Mailbox is a HW peripheral mapped as MMR in the SoC memory map; there will be some latency for CPU to read/write those MMRs.

There are two memcpy’s involved

Sender application to VRING

VRING to recevier RPMSG endpoint local queue

size of memcpy is equal to message payload size (max 512 B)

To reduce latencies and / or to send larger buffer it is recommended to pass a pointer/handle/offset to a larger shared memory from DMA Buf heap.

See PDK IPC Performance for measured performance on Jaincto 7 SoCs.

9.4.6.2. Performance, DO’s and DONT’s¶

Mailbox h/w queue depth is 4 elements

Sender can send back to back 4 messages after which sender will need to wait for the receiver to have “popped” at least one message from the mailbox.

Wait is a SW wait - not a CPU/bus stall. SW can use interrupt to resume from wait or poll for queue to have one free element.

In PDK IPC driver for RTOS, the mailbox is popped by receiver in ISR context itself. So mailbox by itself does not have any performance bottlenecks.

If the receiving CPU is bottlenecked so that ISR cannot execute then sender will need to wait before posting a new message in the mailbox.

IPC driver extends the HW queue with a SW queue in shared memory (VRING).

The SW queue length by default in RPmessage is 256 deep.

VRING can transfer one message of 512 bytes max.

For larger data, it is recommended to use shared memory using DMA Buf heap, and transfer the pointer for faster transfer.

9.4.7. Additional Considerations¶

9.4.7.1. Security¶

IPC driver does not handle security by itself.

If message security is important applications should encrypt and decrypt messages passed over IPC.

9.4.7.2. Message errors¶

IPC driver does not do any error checks on messages like CRC or checksums

If message integrity is important applications should do CRC or checksums on messages passed over IPC

For shared memory used by IPC (VRING), ECC can be enabled at system level to make sure messages and data structures used by IPC do not get corrupted due to HW errors in the memory.

9.4.7.3. Safety¶

IPC RTOS SW is currently developed using TI baseline quality process, however there is roadmap to adopt TI functional safety (FSQ) process to cover systematic faults in SW.

Further for FFI (Freedom from interfernce), below additional instructions need to be followed by a system integrator,

RPMSG endpoint queues are local to a CPU, so by using MMU/MPU and firewalls one can ensure that no other CPU or non-CPU master like DMA can access or corrupt another CPUs RPMSG endpoint queues.

There is a separate shared memory VRING between a pair of CPUs. Setup MMU/MPU to only access VRING that a given CPU needs.

This will avoid a CPU inadvertantly corrupting another CPUs VRING region

Firewall VRING shared memory to make sure a non-CPU master like DMA does not inadvertanly corrupt the shared VRING memory.

Each mailbox instance has its own firewall so for FFI between ASIL and QM OSes (say Linux is QM and RTOS is ASIL), it is recommended to partition the mailboxes such that all communication with QM OS is on one set of mailboxes and all IPC with ASIL OSes is on another set of mailboxes.

Firewall, MPU/MMU setup has to be done from outside IPC driver.

VRING, RPMSG endpoint memory is provided by user to IPC driver, so user can control the MPU/MMU and firewall setup to protect these memories.

9.4.8. Documentation References¶

SDK Component |

Documentation |

Description |

Section |

|---|---|---|---|

PDK |

IPC LLD driver API for RTOS |

IPC Driver |

|

PDK |

IPC latency and performance |

IPC |

9.4.9. Source Code References¶

SDK Component |

File / Folder |

Description |

|---|---|---|

PDK |

pdk/packages/ti/drv/ipc/ipc.h |

IPC driver interface on RTOS |

PDK |

pdk/packages/ti/drv/ipc/examples |

RTOS <-> RTOS, Linux kernel <-> RTOS unit level IPC examples |

Linux target filesystem |

usr/include/ti_rpmsg_char.h |

RPMSG CHAR helper functions |

vision_apps |

vision_apps/apps/basic_demos/app_ipc/ |

Linux user space (RPMSG CHAR) <-> RTOS unit level IPC examples |

app_utils |

app_utils/utils/ipc |

IPC initialization code on RTOS and the HLOS |

app_utils |

app_utils/utils/remote_service |

Simple client-server model usage of IPC between Linux user space (RPMSG CHAR) <-> RTOS, RTOS <-> RTOS |

vision_apps |

vision_apps/platform/<soc>/rtos/common_<hlos>/app_ipc_rsctable.h |

IPC Resource table. This is required for the HLOS to talk with RTOS |