4.9. IPC¶

4.9.1. Introduction¶

TI Jacinto family of devices consists of many cores - A72 , R5Fs (MCU or Main Domain) and/or DSPs (C66x / C7x). The actual cores vary with actual device. Refer to the datasheet of the device for the actual cores present on SoC. Inter-Processor Communication (IPC) provides a communication channel between various cores. IPCLLD is the low-level driver for IPC, which provides a core-agnostic and OS-agnostic framework for communication.

More information regarding the TI multicore processors is available .

4.9.2. Terms and Abbreviation¶

| Term | Definition or Explanation |

|---|---|

| IPC | Inter-Processor Communication |

| VirtIO | Virtual I/O driver |

| MailBox | IP which provides queued interrupt mechanism for communication channel |

| VRing | Ring Buffer in shared memory |

| PDK | Platform Development Kit |

4.9.3. References¶

- For J721E datasheet, please contact TI representative

4.9.4. Features¶

- Provides ipc low-level driver, which can be compiled for any of the cores on Jacinto devices

- Supports RTOS/RTOS communication and Linux/RTOS concurrently for all cores

- Supports RTOS, Linux, QNX (for select devices) and baremetal (No-OS) usecases.

- It can be extended for any third-party RTOS by adding OS adaptation layer

- IPCLLD also provides many examples for quick reference

4.9.5. Installation¶

IPCLLD is part of TI PDK (Platform Development Kit) package. Once Processor SDK RTOS is installed, PDK and all dependent packages and tool-chains are installed automatically. IPCLLD can also be located at <PRSDK_HOME>/pdk/packages/ti/drv/ipc. IPCLLD is also available publically at https://git.ti.com/processor-sdk/pdk/ at packages/ti/drv/ipc.

It can also be cloned using following git command:

- git clone git://git.ti.com/processor-sdk/pdk.git

- Provides many examples for quick reference

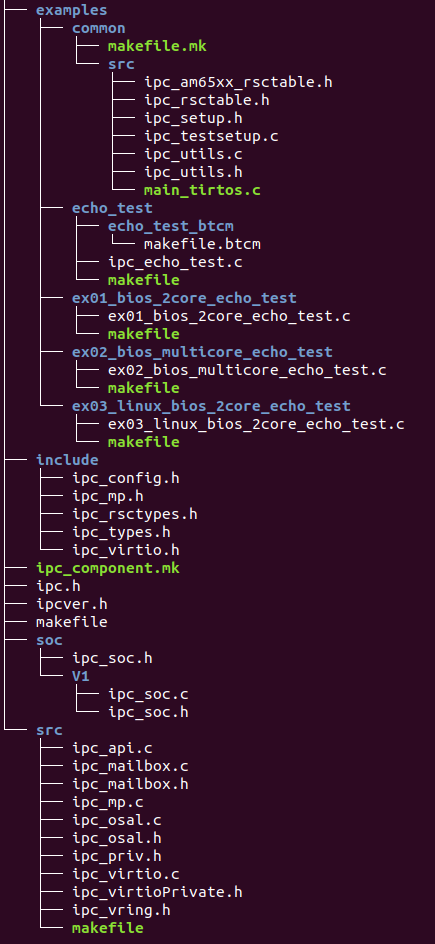

The file and directory oraganization are as below. Note that this is a representative snapshot and additional/different soc folders and examples may be present in latest codebase.

4.9.6. Build¶

IPCLLD uses the PDK build systems to build the IPCLLD library and example applications.

4.9.6.1. Dependency of External PDK Components¶

IPCLLD does not access any hardware directly. It uses the PDK/csl component to configure the Mailbox registers. It uses sciclient to set/release the Mailbox interrupts. Also it uses PDK/osal to configure OS-related steps like Semaphore, etc.

| PDK component | Use by IPCLLD |

|---|---|

| csl | Configures Mailbox registers |

| sciclient | Set Mailbox interrupts |

| osal | To register for HWI and other kernel stuffs. |

4.9.6.2. Command to build IPCLLD¶

The build can be done either from the IPCLLD’s path in the PDK packages, or from the PDK build folder. For either method, the following environment variables must be defined, or supplied with the build command (if they are different from the defaults of the SDK installation):

- SDK_INSTALL_PATH: Installation root for sdk

- TOOLS_INSTALL_PATH: where all the tool chains are installed, if different from SDK_INSTALL_PATH

- PDK_INSTALL_PATH: Installtion root for pdk

The following build instructions are for Linux. For Windows build, please replace “make” with “gmake”.

Method 1:

These libraries and examples are built from the IPCLLD’s path in the PDK packages:

PDK_INSTALL_DIR/packages/ti/drv/ipc

| Target | Build Command | Description |

|---|---|---|

| lib | make PDK_INSTALL_PATH=PDK_INSTALL_DIR/packages SDK_INSTALL_PATH=SDK_INSTALL_PATH lib | IPCLLD library |

| apps | make PDK_INSTALL_PATH=PDK_INSTALL_DIR/packages SDK_INSTALL_PATH=SDK_INSTALL_PATH apps | IPCLLD examples |

| clean | make PDK_INSTALL_PATH=PDK_INSTALL_DIR/packages SDK_INSTALL_PATH=SDK_INSTALL_PATH clean | Clean IPCLLD library and examples |

| all | make PDK_INSTALL_PATH=PDK_INSTALL_DIR/packages SDK_INSTALL_PATH=SDK_INSTALL_PATH all | Build IPCLLD library and examples |

Method 2:

If building from the PDK build folder, then use following steps to build

Go to PDK_INSTALL_DIR/packages/ti/build

| Target | Build Command | Description |

|---|---|---|

| lib | make -s -j BUILD_PROFILE=<debug/release> BOARD=< j721e_evm > CORE=<core_name> ipc | IPCLLD library |

| example | make -s -j BUILD_PROFILE=<debug/release> BOARD=< j721e_evm > CORE=<core_name> ipc_echo_test_freertos | ipc_echo_test example (this can be replaced with any available IPC test name to build the specific test) |

| clean | make -s -j BUILD_PROFILE=<debug/release> BOARD=< j721e_evm > CORE=<core_name> ipc_echo_test_freertos_clean | Clean the ipc_echo_test (this can be replaced with any available IPC test name to build the specific test) |

See Example Details for list of supported examples.

4.9.6.3. Available Core names¶

- J721E:

- mpu1_0 (A72)

- mcu1_0 (mcu-r5f0_0)

- mcu1_1 (mcu-r5f0_1)

- mcu2_0 (main-r5f0_0)

- mcu2_1 (main-r5f0_1)

- mcu3_0 (main-r5f1_0)

- mcu3_1 (main-r5f1_1)

- c66xdsp_1 (c66x_0)

- c66xdsp_2 (c66x_1)

- c7x_1 (c71x_0)

4.9.6.4. Expected Output¶

The built example binaries can be found in the PDK’s binary folder:

PDK_INSTALL_PATH/packages/ti/binary/<test_name>/bin/j721e_evm/

4.9.7. Running the IPCLLD examples¶

IPCLLD comes with the following examples.

4.9.7.1. Example Details¶

Name

|

Description

|

Expected Results

|

SoC Supported

|

Cores Supported

|

Build Type

|

|---|---|---|---|---|---|

| ipc_echo_test | This is the most generic

application where mpu1_0

is running Linux, and all

other cores are running

BIOS. All cores talk to

each other bi-directionally.

Each core will send a ping

message and remote end will

respond with a pong message.

There are 10000 ping/pong

messages exchanged between

each core pair.

The messaging with Linux is

initiated from Linux using

rpmsg-client-sample.

|

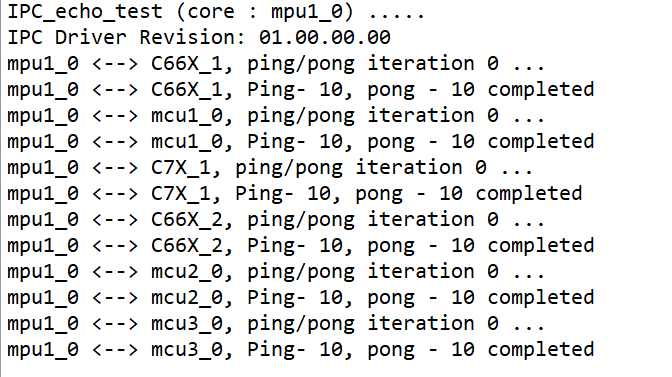

At the end of the

10000 iterations with

a remote core, a

ping/pong completed

message is printed to

the core’s trace buffer.

The buffer may be viewed

with ROV or Linux remotecore

trace buffer.

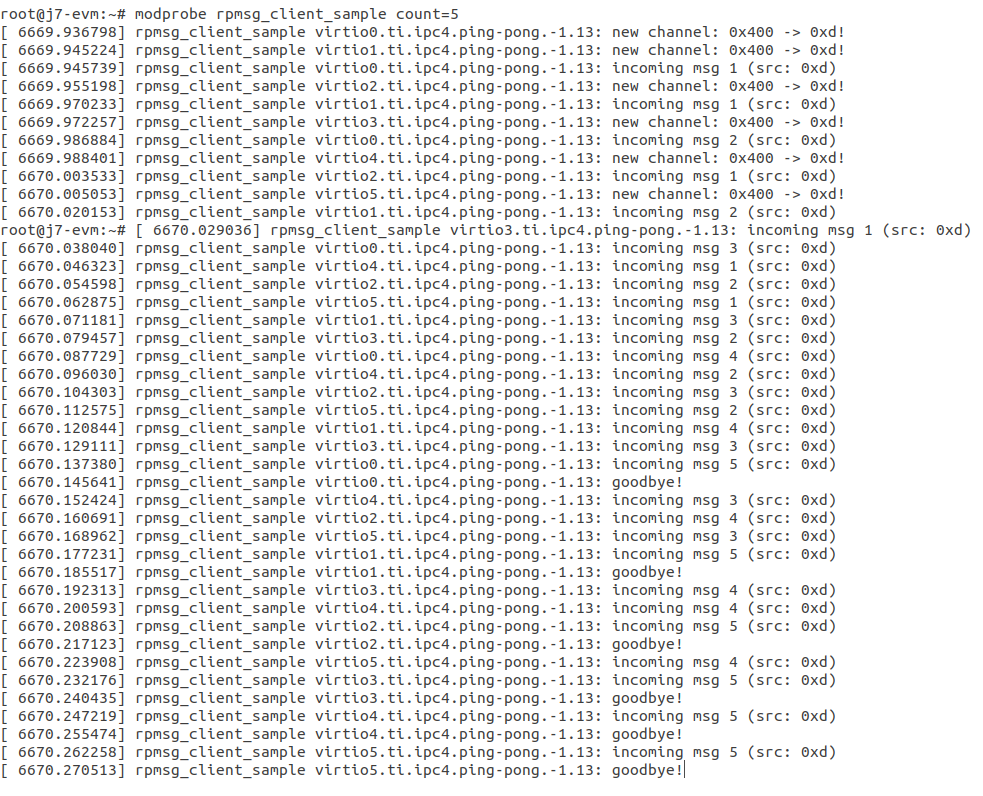

After insmod of the

rpmsg-client-sample

kernel module, traces

indicating the ping/pong

process are printed to

the Linux console for

each message to indicate

success.

|

AM65XX | mcu1_0

mcu1_1

|

makefile |

| J721E | mcu1_0

mcu1_1

mcu2_0

mcu2_1

mcu3_0

mcu3_1

c66xdsp_1

c66xdsp_2

c7x_1

|

makefile | |||

| J7200 | mcu1_0

mcu1_1

mcu2_0

mcu2_1

|

makefile | |||

| AM64X | mcu1_0

mcu1_1

mcu2_0

mcu2_1

|

makefile | |||

| ipc_echo_baremetal_test | This is the same as

ipc_echo_test, but with

baremetal instead of RTOS.

Only the R5Fs are supported

for this test.

|

At the end of the

10000 iterations with

a remote core, a

ping/pong completed

message is printed to

the core’s trace buffer.

The buffer may be viewed

with Linux remotecore

trace buffer.

After insmod of the

rpmsg-client-sample

kernel module, traces

indicating the ping/pong

process are printed to

the Linux console for

each message to indicate

success.

|

AM65XX | mcu1_0

mcu1_1

|

makefile |

| J721E | mcu1_0

mcu1_1

mcu2_0

mcu2_1

mcu3_0

mcu3_1

|

makefile | |||

| J7200 | mcu1_0

mcu1_1

mcu2_0

mcu2_1

|

makefile | |||

| AM64X | mcu1_0

mcu1_1

mcu2_0

mcu2_1

|

makefile | |||

| ex01_bios_2core_echo_test | This is the simple ping/pong

application between 2 cores

running BIOS.

The defaults for which two

cores are used depends on the

platform. Please check the

ex01_bios_2core_echo_test.c

source file to know the

specific cores.

|

At the end of the

10000 iterations with

a remote core, a

ping/pong completed

message is printed to

the core’s trace buffer.

The buffer may be viewed

with ROV in CCS.

|

AM65XX | mcu1_0

mcu1_1

|

makefile |

| J721E | mcu2_0

c66xdsp_1

|

makefile | |||

| J7200 | mcu1_0

mcu2_0

|

makefile | |||

| AMA64X | mcu1_0

mcu2_0

|

makefile | |||

| ex02_bios_multicore_echo_test | All cores are running BIOS

with many-to-many

communication, each core

sending ping/pong with each

other. In this test mpu1_0

is also running BIOS.

This test may be used in

combination with other cores

running the baremetal

version of the test

(ex02_baremetal test).

|

At the end of the

10000 iterations with

a remote core, a

ping/pong completed

message is printed to

the core’s trace buffer.

The buffer may be viewed

with ROV in CCS.

|

AM65XX | mpu1_0

mcu1_0

mcu1_1

|

makefile |

| J721E | mpu1_0

mcu1_0

mcu1_1

mcu2_0

mcu2_1

mcu3_0

mcu3_1

c66xdsp_1

c66xdsp_2

c7x_1

|

makefile | |||

| J7200 | mpu1_0

mcu1_0

mcu1_1

mcu2_0

mcu2_1

|

makefile | |||

| AM64X | mpu1_0

mcu1_0

mcu1_1

mcu2_0

mcu2_1

|

makefile | |||

| ex02_baremetal_multicore_echo_test | All R5Fs are running

baremetal with many-to-many

communication, each core

sending ping/pong with each

other.

This test may be used in

combination with other cores

running the BIOS

version of the test

(ex02_bios test).

|

At the end of the

10000 iterations with

a remote core, a

ping/pong completed

message is printed to

the core’s trace buffer.

The buffer may be viewed

by viewing the trace buffer

memory in CCS memory browser

window.

The address of the trace

buffer can be found by

checking the map file for

the tracebuf section.

|

AM65XX | mcu1_0

mcu1_1

|

makefile |

| J721E | mcu1_0

mcu1_1

mcu2_0

mcu2_1

mcu3_0

mcu3_1

|

makefile | |||

| J7200 | mcu1_0

mcu1_1

mcu2_0

mcu2_1

|

makefile | |||

| AM64X | mcu1_0

mcu1_1

mcu2_0

mcu2_1

m4f_0

|

makefile | |||

| ex03_linux_bios_2core_echo_test | This is the simple ping/pong

application, where mpu1_0 is

running Linux, and all other

cores are running BIOS.

Each of the cores running

BIOS will communicate only

with the mpu1_0 running

Linux and not with each other

|

After insmod of the

rpmsg-client-sample

kernel module, traces

indicating the ping/pong

process are printed to

the Linux console for

each message to indicate

success.

|

AM65XX | mcu1_0

|

makefile |

| J721E | mcu1_0

|

makefile | |||

| J7200 | mcu1_0

|

makefile | |||

| AM64X | mcu1_0

|

makefile | |||

| ex04_linux_baremetal_2core_echo_test | This is the simple ping/pong

application, where mpu1_0 is

running Linux, and all other

cores are running baremetal

Each of the cores running

baremetal will communicate

only with the mpu1_0 running

Linux and not with each

other.

|

After insmod of the

rpmsg-client-sample

kernel module, traces

indicating the ping/pong

process are printed to

the Linux console for each

message to indicate

success.

|

AM65XX | mcu1_0

mcu1_1

|

makefile |

| J721E | mcu1_0

mcu1_1

mcu2_0

mcu2_1

mcu3_0

mcu3_1

|

makefile | |||

| J7200 | mcu1_0

mcu1_1

mcu2_0

mcu2_1

|

makefile | |||

| AM64X | mcu1_0

mcu1_1

mcu2_0

mcu2_1

|

makefile |

4.9.7.2. Loading Remote Firmware¶

Remote firmware can be loading using CCS or using uBoot SPL.

Loading using CCS :

Sciclient module contains default system firmware and CCS script to load the system firmware. The load scripts should be modified to reflect the correct full-path of the script location.

Refer the J721E EVM CCS Setup Documentation section for details of setting up the CCS target configuration and loading system firmware.

Load the remote binaries

- Menu Run –> Load Program

Run the cores.

After running the cores, the sample output should look something like below.

Loading using SPL/uBoot

Run following steps to configure remote firmware for SPL loading with HLOS running on MPU

- Copy the remote firmware to rootfs at /lib/firmware/pdk-ipc folder

- cd /lib/firmware

- Remove old soft link for remote cores

- rm j7*

- Create new soft links

- ln -s /lib/firmware/pdk-ipc/ipc_echo_test_c66xdsp_1_release.xe66 j7-c66_0-fw

- ln -s /lib/firmware/pdk-ipc/ipc_echo_test_c66xdsp_2_release.xe66 j7-c66_1-fw

- ln -s /lib/firmware/pdk-ipc/ipc_echo_test_c7x_1_release.xe71 j7-c71_0-fw

- ln -s /lib/firmware/pdk-ipc/ipc_echo_test_mcu3_0_release.xer5f j7-main-r5f1_0-fw

- ln -s /lib/firmware/pdk-ipc/ipc_echo_test_mcu3_1_release.xer5f j7-main-r5f1_1-fw

- ln -s /lib/firmware/pdk-ipc/ipc_echo_test_mcu2_0_release.xer5f j7-main-r5f0_0-fw

- ln -s /lib/firmware/pdk-ipc/ipc_echo_test_mcu2_1_release.xer5f j7-main-r5f0_1-fw

- ln -s /lib/firmware/pdk-ipc/ipc_echo_testb_mcu1_0_release.xer5f j7-mcu-r5f0_0-fw

- ln -s /lib/firmware/pdk-ipc/ipc_echo_test_mcu1_1_release.xer5f j7-mcu-r5f0_1-fw

- sync : write the changes to filesystem

- Reboot the system

4.9.7.3. Running the Echo Tests¶

In this section ipc_echo_test is used to demonstrate, but same instructions apply to other examples that have Linux on MPU, though test output may be slightly different.

4.9.8. IPCLLD Design Details¶

- Ring Buffer is used as shared memory to transfer the data. It must be reserved system wide. The base-address and size of ring Buffer must be provided to IPCLLD. It must be same for all core applications. The invidual memory-range for Ring Buffer between core combinations are calculated internally inside the library. The default base-address and size used in the IPC examples is

| Device | Base Address | Size |

|---|---|---|

| J721E | 0xAA000000 | 0x1C00000 |

The VRing base address and size is passed from the application during the Ipc_initVirtIO() call. See Writing HelloWorld App using IPCLLD for the example of usage.

Additionally the Ring Buffer memory used when communicating with MPU running Linux must be reserved system wide. The base-address and size of the ring buffer is different from what is used between cores not running Linux. The base-address and size of the ring Buffer is provided to IPCLLD when Linux updates the core’s resource table with the allocated addresses. Linux allocates the base-address from the first memory-region. See Resource Table for more information.

- For each RPmessage object, the memory must be provided to library from local heap. All subsequent send/recv API is using rpmessage buffer provided during the create function.

- Maximum payload/user message size that can be transferred by RPMessage is 496 bytes. For larger data transfers, it is recommended to pass a pointer/handle/offset to a larger shared memory buffer inside the message data.

- For firmware that will communicate with Linux over IPC, a Resource Table is required. See Resource Table for more information.

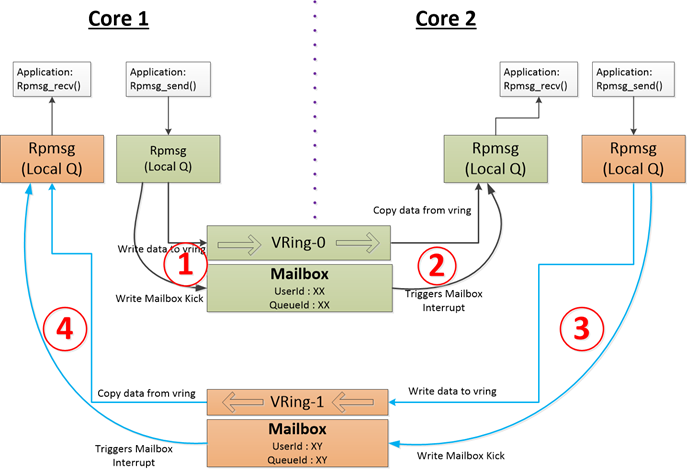

4.9.8.1. Typical Data-Flow in IPCLLD communication between two cores¶

Following picture illustrates the data flow between two cores using mailbox IP as transport.

4.9.8.2. Resource Table¶

For applications that will use Linux IPC, a resource table is required. Example resource tables can be found in the IPC examples:

| Device | Resource Table Example Location |

|---|---|

| J721E | examples/common/src/ipc_rsctable.h |

The resource table must have at least one entry, the VDEV entry, to define the the vrings used for IPC communication with Linux. Optionally, the resource table can also have a TRACE entry which defines the location of the remote core trace buffer.

The VDEV entry specifies the address as RPMSG_VRING_ADDR_ANY, meaning that the address will be allocated by the Linux driver during loading. The allocation is made from the first memory-region specified in the dts file for the remote core. For example, if the dts entry for mcu_r5fss0_core0 is

reserved_memory: reserved-memory {

#address-cells = <2>;

#size-cells = <2>;

ranges;

mcu_r5fss0_core0_dma_memory_region: r5f-dma-memory@a0000000 {

compatible = "shared-dma-pool";

reg = <0 0xa0000000 0 0x100000>;

no-map;

};

mcu_r5fss0_core0_memory_region: r5f-memory@a0100000 {

compatible = "shared-dma-pool";

reg = <0 0xa0100000 0 0xf00000>;

no-map;

};

}

then the allocation for the vrings for mcu_r5fss0_core0 will come from the 0xa0000000 entry.

Note that this address is specific to the Linux<->remote core VRING, and is different from the address provided to the Virtio module in the remote core firmware in the call to Ipc_initVirtIO.

4.9.8.3. Memory Considerations¶

As mentioned in IPCLLD Design Details, the Ring Buffer memory must be reserved system-wide. In addition, the Ring Buffer memory should be configured as non-cached on all cores using IPCLLD. For examples of configurations for Ring Buffer memory, refer to the examples in pdk/packages/ti/drv/ipc/examples/.

4.9.9. Writing HelloWorld App using IPCLLD¶

Step1: Initialize MultiProc with SelfId, and how many remote cores

Ipc_mpSetConfig(selfProcId, numProc, remoteProc);

Step2a: Initialize the Ipc module (RTOS case).

/* Use NULL for params pointer to take all default params */ Ipc_init(NULL);

Step2b: Initialize the Ipc module (baremetal case)

/* Init the params to default and specify the custom newMsgFxn to be notified of new message arrival */ Ipc_initPrms_init(0U, &initPrms); initPrms.newMsgFxn = &IpcTestBaremetalNewMsgCb; Ipc_init(&initPrms);

Step3: Load the Resource Table (required only if running Linux on A72/A53)

Ipc_loadResourceTable((void*)&ti_ipc_remoteproc_ResourceTable);

See Resource Table for details on the resource table.

Step4: Initialize VirtIO (note: Base Address for Shared Memory used for RingBuffer)

vqParam.vqObjBaseAddr = (void*)sysVqBuf; vqParam.vqBufSize = numProc * Ipc_getVqObjMemoryRequiredPerCore(); vqParam.vringBaseAddr = (void*)VRING_BASE_ADDRESS; vqParam.vringBufSize = VRING_BUFFER_SIZE; Ipc_initVirtIO(&vqParam);

Step5: Initialize RPMessage

RPMessage_init(&cntrlParam);

Step6: Send Message

RPMessage_send(handle, dstProc, ENDPT1, myEndPt, (Ptr)buf, len);

Step7a: Receive Message (RTOS case)

RPMessage_recv(handle, (Ptr)buf, &len, &remoteEndPt, &remoteProcId, timeout);

Step7b: Receive Message (baremetal case)

/* After the IPCLLD notifies the app of a new message available via the newMsgFxn, app can call the non-blocking variant to get the message */ RPMessage_recvNb(handle, (Ptr)buf, &len, &remoteEndPt, &remoteProcId);