3. Inference Explained¶

This section explains more details about running and debugging TVM inference. An introduction to this topic is provided in Running Inference.

There are two inference scenarios:

Running TIDL unsupported layers on Arm

Running TIDL unsupported layers on C7x

These

correspond to compiling the model with advanced_options:c7x_codegen=0 or advanced_options:c7x_codegen=1. The same

inference script or application can be used for both scenarios.

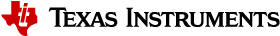

3.1. Running Unsupported Layers on Arm¶

When running unsupported layers on Arm, TI TVM graph runtime on Arm looks at each node in the graph (.json).

If it is a TIDL subgraph, it is dispatched to C7x for execution (via OpenVX).

If it is a non-TIDL node, it is executed with TVM-generated Arm code.

Fig. 3.1 Model inference with unsupported layers mapped to Arm¶

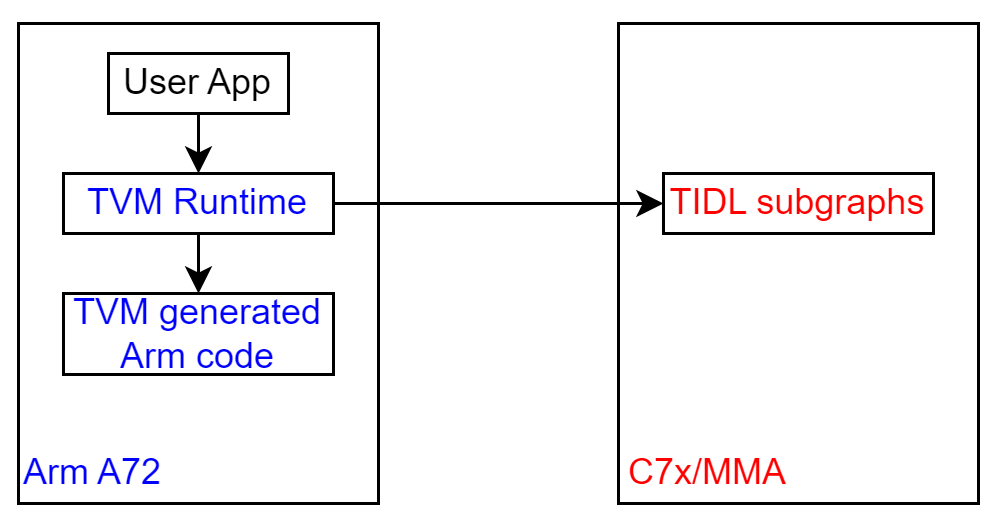

3.2. Running Unsupported Layers on C7x¶

When running unsupported layers on C7x, TI TVM graph runtime on Arm looks at the single node in the wrapper graph (.json), ‘tidl_tvm_0’, and dispatches the whole graph to C7x (via OpenVX).

TI TVM graph runtime on C7x looks at each node in the graph.

If it is a TIDL subgraph, it is executed with the TIDL library.

If it is a non-TIDL node, it is executed with TVM-generated C7x code.

Fig. 3.2 Model inference with unsupported layers mapped to C7x¶

3.3. Debugging Inference¶

TIDL uses the TIDL_RT_DEBUG environment variable to help debug the TVM+TIDL inference flow.

3.3.1. Vision apps “printf” terminal¶

First, you need to open a “printf” terminal, where debug “printf” output from C7x core is shown.

Open a terminal on your EVM, and run the following commands.

# if using EdgeAI Start Kit SDK

root@tda4vm-sk:~# /opt/vx_app_arm_remote_log.out

# if using J721E SDK

root@j7-evm:~# cd /opt/vision_apps/

root@j7-evm:/opt/vision_apps# source ./vision_apps_init.sh

Then, run the inference in a different terminal.

3.3.2. Debugging TIDL subgraphs¶

The available settings for the TIDL_RT_DEBUG environment variable are as follows.

3.3.2.1. TIDL_RT_DEBUG=1¶

When set to 1, TIDL subgraph performance information is printed out during inference, either on the “printf” terminal or on the terminal where inference is running. For example:

[C7x_1 ] 1851913.287814 s: Layer, Layer Cycles,kernelOnlyCycles, coreLoopCycles,LayerSetupCycles,dmaPipeupCycles, dmaPipeDownCycles, PrefetchCycles,copyKerCoeffCycles,LayerDeinitCycles,LastBlockCycles, paddingTrigger, paddingWait,LayerWithoutPad,LayerHandleCopy, BackupCycles, RestoreCycles,

[C7x_1 ] 1851913.287889 s: 0, 201247, 171496, 173177, 1021, 9371, 20, 0, 0, 375, 41487, 6392, 51, 191300, 0, 0, 0,

[C7x_1 ] 1851913.287956 s: 1, 44208, 17603, 18221, 4170, 2679, 18, 0, 0, 662, 17603, 7720, 454, 33530, 2096, 0, 0,

... ... ...

3.3.2.2. TIDL_RT_DEBUG=2, 3¶

When set to 2 or 3, more verbose information about TIDL subgraphs is provided in addition to the information provided when the setting is 1. For example:

[C7x_1 ] 1852081.872134 s: Alg Alloc for Layer # - 0

[C7x_1 ] 1852081.872160 s: Alg Alloc for Layer # - 1

... ... ...

[C7x_1 ] 1852081.873059 s: TIDL Memory requirement

[C7x_1 ] 1852081.873087 s: MemRecNum , Space , Attribute , SizeinBytes

[C7x_1 ] 1852081.873117 s: 0 , DDR , Persistent, 15208

[C7x_1 ] 1852081.873145 s: 1 , DDR , Persistent, 136

... ... ...

[C7x_1 ] 1852081.874400 s: Alg Init for Layer # - 2 out of 32

[C7x_1 ] 1852081.874470 s: Alg Init for Layer # - 3 out of 32

... ... ...

[C7x_1 ] 1852081.911120 s: Starting Layer # - 1

[C7x_1 ] 1852081.911145 s: Processing Layer # - 1

[C7x_1 ] 1852081.911375 s: End of Layer # - 1 with outPtrs[0] = 7002001e

[C7x_1 ] 1852081.911400 s: Starting Layer # - 2

[C7x_1 ] 1852081.911422 s: Processing Layer # - 2

[C7x_1 ] 1852081.911493 s: End of Layer # - 2 with outPtrs[0] = 7004550e

... ...

3.3.2.3. TIDL_RT_DEBUG=4, 5¶

Setting this environment variable to 4 or 5 is supported only when running TIDL unsupported layers on Arm. When set to 4 or 5, tensor output

from each layer in the TIDL subgraph is dumped into the Arm Linux file system, with filenames

tidl_trace_subgraph_<subgraph_id>_<layer_id>_<tensor_shape>.y for raw data and

tidl_trace_subgraph_<subgraph_id>_<layer_id>_<tensor_shape>_float.bin for converted float data.

3.3.3. Debugging TVM nodes¶

The TVM_RT_DEBUG, TVM_RT_TRACE_NODE, TVM_RT_TRACE_SIZE, and TVM_TRACE_NODE environment variables can be used as follows to aid in debugging TVM nodes.

3.3.3.1. TVM_RT_DEBUG=1¶

When set to 1, TVM runtime on Arm collects performance statistics for each node

in the graph and saves into the Arm Linux file system, with the filename tvm_arm.trace. You can use the

python/tvm/contrib/tidl/dump_tvm_trace.py script to dump the details.

When TIDL unsupported layers are run on Arm, the tvm_arm.trace file includes the execution time for

TIDL nodes and layers running on Arm. For example:

# python3 $TVM_HOME/python/tvm/contrib/tidl/dump_tvm_trace.py tvm_arm.trace

Trace size: 633 version: 0x20220728 device: J7 core: Arm

node 1: tidl_8 1520.9 microseconds

node 2: tvmgen_default_fused_multiply 436.81 microseconds

node 3: tidl_7 993.62 microseconds

node 4: tvmgen_default_fused_multiply_1 149.11 microseconds

node 5: tidl_6 1162.075 microseconds

node 6: tvmgen_default_fused_multiply_11 176.68 microseconds

node 7: tidl_5 2233.29 microseconds

node 8: tvmgen_default_fused_multiply_2 120.095 microseconds

node 9: tidl_4 1503.91 microseconds

node 10: tvmgen_default_fused_multiply_3 163.83 microseconds

node 11: tidl_3 1381.615 microseconds

node 12: tvmgen_default_fused_multiply_4 61.94 microseconds

node 13: tidl_2 1195.17 microseconds

node 14: tvmgen_default_fused_multiply_5 70.53 microseconds

node 15: tidl_1 1311.9 microseconds

node 16: tvmgen_default_fused_multiply_51 71.32 microseconds

node 17: tidl_0 1279.285 microseconds

node 4294967295: Graph 13856.93 microseconds

When TIDL unsupported layers are running on C7x, tvm_arm.trace includes only the execution

time for a single node representing the whole graph. For example:

# python3 $TVM_HOME/python/tvm/contrib/tidl/dump_tvm_trace.py tvm_arm.trace

Trace size: 69 version: 0x20220728 device: J7 core: Arm

node 1: tidl_tvm_0 9126.275 microseconds

node 4294967295: Graph 9129.92 microseconds

3.3.3.2. TVM_RT_DEBUG=2¶

When set to 2, in addition to the information provided when the TVM_RT_DEBUG setting is 1, TVM runtime on C7x also collects performance

statistics for each node in the graph on the C7x and saves them to the Arm Linux file system,

with the filename tvm_c7x.trace. You can use the python/tvm/contrib/tidl/dump_tvm_trace.py script

to dump the details. For example:

# python3 $TVM_HOME/python/tvm/contrib/tidl/dump_tvm_trace.py tvm_c7x.trace

Trace size: 631 version: 0x20220728 device: J7 core: C7x

node 1: tidl_8 909.002 microseconds

node 2: tvmgen_default_fused_multiply 62.201 microseconds

node 3: tidl_7 378.24 microseconds

node 4: tvmgen_default_fused_multiply_1 83.055 microseconds

node 5: tidl_6 445.359 microseconds

node 6: tvmgen_default_fused_multiply_1 83.433 microseconds

node 7: tidl_5 1590.525 microseconds

node 8: tvmgen_default_fused_multiply_2 82.979 microseconds

node 9: tidl_4 809.138 microseconds

node 10: tvmgen_default_fused_multiply_3 110.202 microseconds

node 11: tidl_3 802.037 microseconds

node 12: tvmgen_default_fused_multiply_4 46.95 microseconds

node 13: tidl_2 848.143 microseconds

node 14: tvmgen_default_fused_multiply_5 55.662 microseconds

node 15: tidl_1 908.129 microseconds

node 16: tvmgen_default_fused_multiply_5 54.628 microseconds

node 17: tidl_0 990.953 microseconds

node 4294967295: Graph 8283.967 microseconds

The tvm_c7x.trace output is available only if the model was compiled with c7x_codegen=1.

3.3.3.3. TVM_RT_DEBUG=3¶

When set to 3, in addition to the information provided when the setting is 1 or 2, TVM runtime on Arm and C7x collects output tensor statistics for each layer and saves it to the trace files. Collected statistics include minimum, maximum, sum, and the sum of the first half of the tensor.

Please ignore performance numbers obtained in this mode; there is overhead when collecting tensor statistics.

# python3 $TVM_HOME/python/tvm/contrib/tidl/dump_tvm_trace.py tvm_arm.trace

Trace size: 1833 version: 0x20220728 device: J7 core: Arm

node 1: tidl_8 1527.845 microseconds

output 0: ndim=4 type_code=2 elem_bytes=4 num_elements=56448

min=0.0 max=21.715360641479492 sum=234286.71875 fh_sum=110520.9296875

output 1: ndim=4 type_code=2 elem_bytes=4 num_elements=72

min=0.73150634765625 max=0.999969482421875 sum=68.35482788085938 fh_sum=34.87158203125

node 2: tvmgen_default_fused_multiply 416.19 microseconds

output 0: ndim=4 type_code=2 elem_bytes=4 num_elements=56448

min=0.0 max=21.439014434814453 sum=226814.515625 fh_sum=106811.2890625

... ... ...

# python3 $TVM_HOME/python/tvm/contrib/tidl/dump_tvm_trace.py tvm_c7x.trace

Trace size: 1831 version: 0x20220728 device: J7 core: C7x

node 1: tidl_8 934.738 microseconds

output 0: ndim=4 type_code=2 elem_bytes=4 num_elements=56448

min=0.0 max=21.715360641479492 sum=234286.71875 fh_sum=110520.9296875

output 1: ndim=4 type_code=2 elem_bytes=4 num_elements=72

min=0.73150634765625 max=0.999969482421875 sum=68.35482788085938 fh_sum=34.87158203125

node 2: tvmgen_default_fused_multiply 64.704 microseconds

output 0: ndim=4 type_code=2 elem_bytes=4 num_elements=56448

min=0.0 max=21.439014434814453 sum=226814.515625 fh_sum=106811.2890625

... ... ...

3.3.3.4. TVM_RT_DEBUG=4¶

When set to 4, TVM runtime on Arm and C7x prints out information about each node when each node is being executed, either on the terminal where inference is run or the “printf” terminal. This mode can be helpful for debugging the model’s execution.

3.3.3.5. TVM_RT_TRACE_NODE=<node_id> TVM_RT_DEBUG=3, 4¶

When these settings are used, TVM runtime on Arm and C7x also saves the tensor outputs of the specified node into

the trace file. The dump_trace.py script saves the tensor outputs into the Arm Linux file system

as numpy files, with the filename n<node_id>_o<output_id>.npy. For example, see the tensor values saved in

messages in the following example.

Tensor outputs are saved as float values in the trace.

# TVM_RT_TRACE_NODE=1 TVM_RT_DEBUG=4 python3 ./infer_model.py ... ...

... ...

# python3 $TVM_HOME/python/tvm/contrib/tidl/dump_tvm_trace.py tvm_c7x.trace

Trace size: 227911 version: 0x20220728 device: J7 core: C7x

node 1: tidl_8 912.196 microseconds

output 0: ndim=4 type_code=2 elem_bytes=4 num_elements=56448

min=0.0 max=21.715360641479492 sum=234286.71875 fh_sum=110520.9296875

tensor values saved in n1_o0.npy

output 1: ndim=4 type_code=2 elem_bytes=4 num_elements=72

min=0.73150634765625 max=0.999969482421875 sum=68.35482788085938 fh_sum=34.87158203125

tensor values saved in n1_o1.npy

node 2: tvmgen_default_fused_multiply 65.955 microseconds

output 0: ndim=4 type_code=2 elem_bytes=4 num_elements=56448

min=0.0 max=21.439014434814453 sum=226814.515625 fh_sum=106811.2890625

node 3: tidl_7 388.9 microseconds

3.3.3.6. TVM_RT_TRACE_SIZE=<new_size> TVM_TRACE_NODE=<node_id> TVM_RT_DEBUG=3, 4¶

If you see a Not enough trace memory for dumping output <node_id> message in the verbose

output for a node, you can use TVM_RT_TRACE_SIZE to increase the size of the trace buffer that stores

the trace. The default is 2*1024*1024 (2MB) bytes.