4.1. TI-RTOS Kernel¶

4.2. XDAIS¶

4.3. FC¶

4.4. IPC¶

Note

This section mainly provides details of the software distributed part of the IPC 3.x package (installed under ipc_<version>). The IPC package provides the IPC APIs with higher level software abstraction. In addition,starting from Processor SDK 6.1 release, a lower level IPC driver (IPC LLD) using rpmsg based transport is included specifically for AM6x/J7 platforms. See the following link for additional details IPC 3.x vs IPC LLD

Note

Starting with releases after the 6.3 release, IPC3.x will be deprecated on the AM65x device and IPC LLD is the only IPC stack supported. Please stop new development on top of IPC3.x for AM65x, and migrate to IPC LLD stack. Please see IPC LLD for AM65x/J721E for information on IPC LLD.

4.4.1. IPC User’s Guide¶

Inter-Processor Communication (IPC) provides a processor-agnostic API which can be used for communication between processors in a multi-processor environment (inter-core), communication to other threads on same processor (inter-process), and communication to peripherals (inter-device). The API supports message passing, streams, and linked lists. IPC can be used to communicate with the following:

Other threads on the same processor

Threads on other processors running SYS/BIOS

Threads on other processors running an HLOS (e.g., Linux, QNX, Android)

4.4.2. Overview¶

This user’s guide contains the topics in the following list. It also

links to API reference documentation for static configuration ( )

and run-time C processing (

)

and run-time C processing ( ) for each module.

) for each module.

Getting Started

Use Cases for IPC explains the various use cases for IPC.

IPC Examples explains how to build and generate the IPC examples.

IPC Training lists available IPC training.

IPC 3.x Provides details of IPC 3.x releases

Application Development

Create DSP and IPU firmware using PDK drivers and IPC to load from ARM Linux on AM57xx devices

Customizing Memory map for creating Multicore Applications on AM57xx using IPC

Optimizing IPC Applications provides hints for improving the runtime performance and shared memory usage of applications that use IPC.

IPC Benchmarking IPC Benchmarking with IPC 3.x

IPC Custom ResourceTable Provides details of customizing the resource table.

IPC Internal/API & Other Useful Links

The TI SDO IPC Package section describes the modules in the ti.sdo.ipc package.

The TI SDO Utils Package section describes the modules in the ti.sdo.utils package.

IPC 3.x Migration Guide Provides details of migrating to IPC 3.x from previous releases

IPC GateMP Support for UIO and Misc Driver Provides details of IPC GateMP support with UIO driver

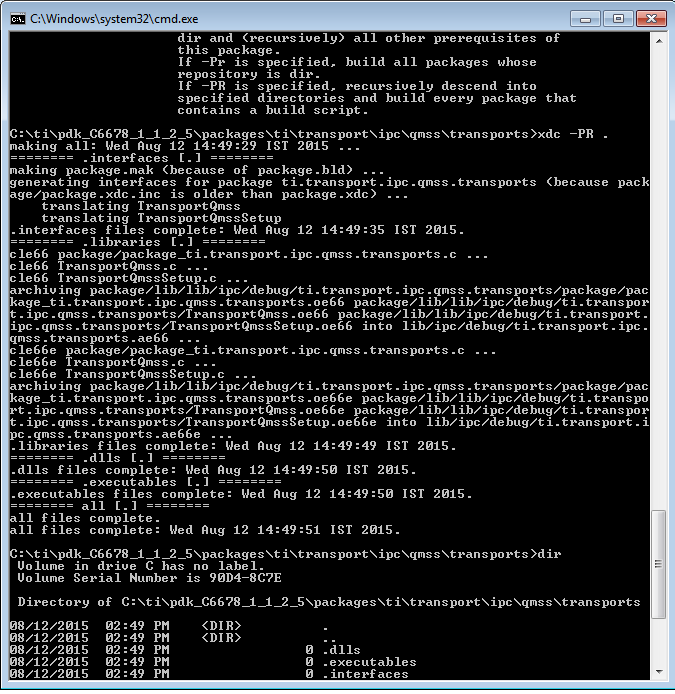

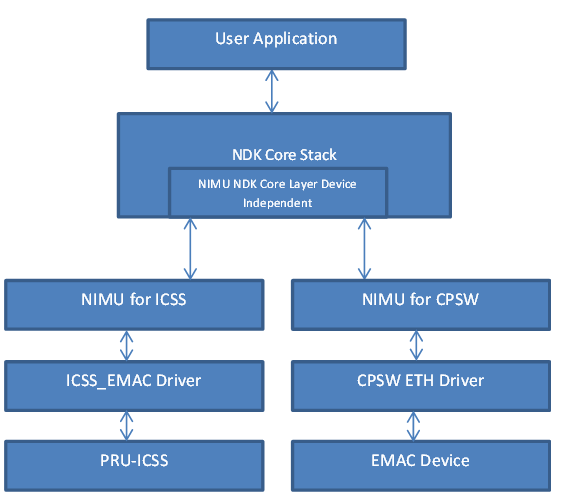

RTOS IPC Transports explains details of the additional RTOS IPC transports provided via the Processor SDK PDK component.

Rebuilding IPC explains how to rebuild the IPC libraries if you modify the source files.

FAQ

IPC 3.x FAQ Frequently asked questions on IPC 3.x

Note

Please see the release notes in your IPC installation before starting to use IPC. The release notes contain important information about feature support, issues, and compatibility information for a particular release.

4.4.3. Use Cases for IPC¶

You can use IPC modules in a variety of combinations. From the simplest setup to the setup with the most functionality, the use case options are as follows. A number of variations of these cases are also possible:

Minimal use of IPC. (BIOS-to-BIOS only) This scenario performs inter-processor notification. The amount of data passed with a notification is minimal–typically on the order of 32 bits. This scenario is best used for simple synchronization between processors without the overhead and complexity of message-passing infrastructure.

Add data passing. (BIOS-to-BIOS only) This scenario adds the ability to pass linked list elements between processors to the previous minimal scenario. The linked list implementation may optionally use shared memory and/or gates to manage synchronization.

Add dynamic allocation. (BIOS-to-BIOS only) This scenario adds the ability to dynamically allocate linked list elements from a heap.

Powerful but easy-to-use messaging. (HLOS and BIOS) This scenario uses the MessageQ module for messaging. The application configures other modules. However, the APIs for other modules are then used internally by MessageQ, rather than directly by the application.

In the following sections, figures show modules used by each scenario.

Blue boxes, identify modules for which your application will call C API functions other than those used to dynamically create objects.

Red boxes, identify modules that require only configuration by your application. Static configuration is performed in an XDCtools configuration script (.cfg). Dynamic configuration is performed in C code.

Grey boxes, identify modules that are used internally but do not need to be configured or have their APIs called.

4.4.3.1. Minimal Use Scenario (BIOS-to-BIOS only)¶

This scenario performs inter-processor notification using a Notify driver, which is used by the Notify module. This scenario is best used for simple synchronization in which you want to send a message to another processor to tell it to perform some action and optionally have it notify the first processor when it is finished.

In this scenario, you make API calls to the Notify module. For example, the Notify_sendEvent() function sends an event to the specified processor. You can dynamically register callback functions with the Notify module to handle such events.

You must statically configure MultiProc module properties, which are used by the Notify module.

The amount of data passed with a notification is minimal. You can send an event number, which is typically used by the callback function to determine what action it needs to perform. Optionally, a small “payload”? of data can also be sent.

See Notify Module and MultiProc Module.

4.4.3.2. Data Passing Scenario (BIOS-to-BIOS only)¶

In addition to the IPC modules used in the previous scenario, you can use the ListMP module to share a linked list between processors.

In this scenario, you make API calls to the Notify and ListMP modules.

The ListMP module is a doubly-linked-list designed to be shared by multiple processors. ListMP differs from a conventional “local”? linked list in the following ways:

Address translation is performed internally upon pointers contained within the data structure.

Cache coherency is maintained when the cacheable shared memory is used.

A multi-processor gate (GateMP) is used to protect read/write accesses to the list by two or more processors.

ListMP uses SharedRegion’s lookup table to manage access to shared memory, so configuration of the SharedRegion module is required.

Internally, ListMP can optionally use the NameServer module to manage name/value pairs. The ListMP module also uses a GateMP object, which your application must configure. The GateMP is used internally to synchronize access to the list elements.

See ListMP Module, GateMP Module, SharedRegion Module, and NameServer Module.

4.4.3.3. Dynamic Allocation Scenario (BIOS-to-BIOS only)¶

To the previous scenario, you can add dynamic allocation of ListMP elements using one of the Heap*MP modules.

In this scenario, you make API calls to the Notify and ListMP modules and a Heap*MP module.

In addition to the modules that you configured for the previous scenario, the Heap*MP modules use a GateMP that you must configure. You may use the same GateMP instance used by ListMP.

See Heap*MP Modules and GateMP Module.

4.4.3.4. Powerful But Easy-to-Use Messaging with MessageQ (HLOS and BIOS)¶

Finally, to use the most sophisticated inter-processor communication scenario supported by IPC, you can add the MessageQ module. Note that the following diagram shows one particular transport (TransportShm) and may not apply to all devices and/or environments.

In this scenario, you make API calls to the MessageQ module for inter-processor communication.

API calls made to the Notify, ListMP, and Heap*MP modules in the previous scenarios are not needed. Instead, your application only needs to configure the MultiProc and (if the underlying MessageQ transport requires it) the SharedRegion modules.

Note

Some MessageQ transports do not use SharedRegion, but instead copy the message payloads. This may be done because of hardware limitations (e.g. no shared memory is available) or software design (e.g. transports built on Linux kernel-friendly rpmsg drivers).

The Ipc_start() API call configures all the necessary underlying

modules (e.g. Notify, HeapMemMP, ListMP, TransportShm, NameServer, and

GateMP). The actual details of what modules Ipc_start() initializes

varies from environment to environment.

It is possible to use MessageQ in a single-processor SYS/BIOS application. In such a case, only API calls to MessageQ and configuration of any xdc.runtime.IHeap implementation are needed.

4.4.4. IPC Examples¶

4.4.4.1. Building IPC Examples¶

The IPC BIOS examples are located in the Processor SDK RTOS IPC directory within the examples folder. Please refer to the readme of each example for details on each example.

The examples are makefile based and can be built using the top-level makefile provided in the Processor SDK RTOS folder. The following commands will build all of the IPC examples.

Windows

cd (Processor SDK RTOS folder)

setupenv.bat

gmake ipc_examples

Linux

cd (Processor SDK RTOS folder)

source ./setupenv.sh

make ipc_examples

4.4.4.2. IPC Examples: Details¶

This section explains some of the common details about IPC examples. The sub-directories under the examples are organised into the code for each of the cores in the SOC. For example

Host

DSP1

DSP2

IPU1

IPU2

Typically we have a host core which is the main core in the SOC and other slave cores. The directory name of the slave cores have a base name (like DSP, IPU etc) which indicates the type of core and a core number. Depending on the example, the Host can run TI BIOS or Linux or QNX and the slave cores in general run TI BIOS only. So the specific build related files need to be interpreted accordingly.

4.4.4.2.1. BIOS Application Configuration Files¶

The cores running BIOS in general has a config file which brings in all the modules needed to complete the application running on the specific core. This section explains the details of the entries in the config file. Note: The details here are just representative of a typical configuration. In general the configuration is customized based on the particular example.

4.4.4.2.1.1. BIOS Configuration¶

The following configuration are related to configuring BIOS

var BIOS = xdc.useModule('ti.sysbios.BIOS');

/* This adds ipc Startup to be done part of BIOS startup before main*/

BIOS.addUserStartupFunction('&IpcMgr_ipcStartup');

/* The following configures Debug libtype with Debug build */

if (Program.build.profile == "debug") {

BIOS.libType = BIOS.LibType_Debug;

} else {

BIOS.libType = BIOS.LibType_Custom;

}

var Sem = xdc.useModule('ti.sysbios.knl.Semaphore');

var instSem0_Params = new Sem.Params();

instSem0_Params.mode = Sem.Mode_BINARY;

Program.global.runOmpSem = Sem.create(0, instSem0_Params);

Program.global.runOmpSem_complete = Sem.create(0, instSem0_Params);

var Task = xdc.useModule('ti.sysbios.knl.Task');

Task.common$.namedInstance = true;

/* default memory heap */

var Memory = xdc.useModule('xdc.runtime.Memory');

var HeapMem = xdc.useModule('ti.sysbios.heaps.HeapMem');

var heapMemParams = new HeapMem.Params();

heapMemParams.size = 0x8000;

Memory.defaultHeapInstance = HeapMem.create(heapMemParams);

/* create a heap for MessageQ messages */

var HeapBuf = xdc.useModule('ti.sysbios.heaps.HeapBuf');

var params = new HeapBuf.Params;

params.align = 8;

params.blockSize = 512;

params.numBlocks = 20;

4.4.4.2.1.2. XDC Runtime¶

The following configuration are in general used by an IPC application in BIOS

/* application uses the following modules and packages */

xdc.useModule('xdc.runtime.Assert');

xdc.useModule('xdc.runtime.Diags');

xdc.useModule('xdc.runtime.Error');

xdc.useModule('xdc.runtime.Log');

xdc.useModule('xdc.runtime.Registry');

xdc.useModule('ti.sysbios.knl.Semaphore');

xdc.useModule('ti.sysbios.knl.Task');

4.4.4.2.1.3. IPC Configuration¶

The following IPC modules are used in a typical IPC application.

xdc.useModule('ti.sdo.ipc.Ipc');

xdc.useModule('ti.ipc.ipcmgr.IpcMgr');

var MultiProc = xdc.useModule('ti.sdo.utils.MultiProc');

/* The following configures the PROC List */

MultiProc.setConfig("CORE0", ["HOST", "CORE0"]);

var msgHeap = HeapBuf.create(params);

var MessageQ = xdc.useModule('ti.sdo.ipc.MessageQ');

/* Register msgHeap with messageQ */

MessageQ.registerHeapMeta(msgHeap, 0);

The following lines configure placement of Resource table in memory. Note that some platforms or applications the placement of memory can be in a different section in the memory map.

/* Enable Memory Translation module that operates on the Resource Table */

var Resource = xdc.useModule('ti.ipc.remoteproc.Resource');

Resource.loadSegment = Program.platform.dataMemory;

4.4.4.2.1.4. Transport Configuration¶

Typically the transport to be used by IPC is specified here. The following snippet configures RPMsg based transport.

/* Setup MessageQ transport */

var VirtioSetup = xdc.useModule('ti.ipc.transports.TransportRpmsgSetup');

MessageQ.SetupTransportProxy = VirtioSetup;

4.4.4.2.1.5. NameServer Configuration¶

The Name server to be used is specified here.

/* Setup NameServer remote proxy */

var NameServer = xdc.useModule("ti.sdo.utils.NameServer");

var NsRemote = xdc.useModule("ti.ipc.namesrv.NameServerRemoteRpmsg");

NameServer.SetupProxy = NsRemote;

4.4.4.2.1.6. Instrumentation Configuration¶

The following configuration are required for system logging and diagnostics.

/* system logger */

var LoggerSys = xdc.useModule('xdc.runtime.LoggerSys');

var LoggerSysParams = new LoggerSys.Params();

var Defaults = xdc.useModule('xdc.runtime.Defaults');

Defaults.common$.logger = LoggerSys.create(LoggerSysParams);

/* enable runtime Diags_setMask() for non-XDC spec'd modules */

var Diags = xdc.useModule('xdc.runtime.Diags');

Diags.setMaskEnabled = true;

/* override diags mask for selected modules */

xdc.useModule('xdc.runtime.Main');

Diags.setMaskMeta("xdc.runtime.Main",

Diags.ENTRY | Diags.EXIT | Diags.INFO, Diags.RUNTIME_ON);

var Registry = xdc.useModule('xdc.runtime.Registry');

Registry.common$.diags_ENTRY = Diags.RUNTIME_OFF;

Registry.common$.diags_EXIT = Diags.RUNTIME_OFF;

Registry.common$.diags_INFO = Diags.RUNTIME_OFF;

Registry.common$.diags_USER1 = Diags.RUNTIME_OFF;

Registry.common$.diags_LIFECYCLE = Diags.RUNTIME_OFF;

Registry.common$.diags_STATUS = Diags.RUNTIME_OFF;

var Main = xdc.useModule('xdc.runtime.Main');

Main.common$.diags_ASSERT = Diags.ALWAYS_ON;

Main.common$.diags_INTERNAL = Diags.ALWAYS_ON;

4.4.4.2.1.7. Other Optional Configurations¶

In addition to the above configurations there are other platform specific configurations may be used to enable certain features.

For example the following sections shows the sections used to enable device exception handler. ( But the deh module may not be available on all devices)

var Idle = xdc.useModule('ti.sysbios.knl.Idle');

var Deh = xdc.useModule('ti.deh.Deh');

/* Must be placed before pwr mgmt */

Idle.addFunc('&ti_deh_Deh_idleBegin');

4.4.4.3. Building IPC examples using products.mak¶

The following sections discuss how to individually build the IPC examples by modifying the product.mak file.

The IPC product contains an examples/archive directory with device-specific examples. Once identifying your device, the examples can be unzipped anywhere on your build host. Typically once unzipped, the user edits the example’s individual products.mak file and simply invokes make.

Note

A common place to unzip the examples is into the IPC_INSTALL_DIR/examples/ directory. Each example’s products.mak file is smart enough to look up two directories (in this case, into IPC_INSTALL_DIR) for a master products.mak file, and if found it uses those variables. This technique enables users to set the dependency variables in one place, namely IPC_INSTALL_DIR/products.mak.

Each example contains a readme.txt with example-specific details.

4.4.4.3.1. Generating Examples¶

The IPC product will come with the generated examples directory. The IPC product is what is typically delivered with SDKs such as Processor SDK. However, some SDKs point directly to the IPC git tree for the IPC source. In this case, the IPC Examples can be generated separately.

4.4.4.3.2. Tools¶

- The following tools need to be installed:

XDC tools (check the IPC release notes for compatible version)

4.4.4.3.3. Source Code¶

mkdir ipc

cd ipc

git clone git://git.ti.com/ipc/ipc-metadata.git

git clone git://git.ti.com/ipc/ipc-examples.git

Then checkout the IPC release tag that is associated with the IPC version being used. Do this for both repos. For example:

git checkout 3.42.01.03

4.4.4.3.4. Build¶

cd ipc-examples/src

make .examples XDC_INSTALL_DIR=<path_to_xdc_tools> IPCTOOLS=<path_to_ipc-metadata>/src/etc

For example:

make .examples XDC_INSTALL_DIR=/opt/ti/xdctools_3_32_00_06_core IPCTOOLS=/home/user/ipc/ipc-metadata/src/etc

The “examples” director will be generated in the path “ipc-examples/src/”:

ipc-examples/src/examples

4.4.5. IPC Training¶

Introduction to Inter-Processor Communication (IPC) for KeyStone and Sitara™ Devices - The IPC software package is designed to hide the lower-layer hardware complexity of multi-core devices and help users to quickly develop applications for data transfer between cores or devices. IPC also maximizes application software reuse by providing a common API interface across all supported platforms, including AM65x, AM57x, 66AK2Gx, 66AK2Ex, 66AK2Hx, TCI663x, TDA3XX, OMAP-L138, OMAP54XX, DRA7XX, and more. This training video introduces the IPC features and modules, shows how to build the IPC libraries for your platform, examines the RPMsg framework, and provides a look at the included examples and benchmarks.

Building and Running Inter-Processor Communication (IPC) Examples on the AM572x GP EVM - For TI embedded processors with heterogeneous multi-core architectures – like the AM57x family of devices, which have integrated ARM, DSP, and M4 cores – the TI Processor Software Development Kit (SDK) provides a software component called Inter-processor Communication (IPC). This video demonstrates how to build and run IPC examples on the AM572x General Purpose Evaluation Module (GP EVM) using Processor SDK for Linux.

IPC Training v2.21 - IPC 3.x Full Training Material (PowerPoint) (PowerPointShow) (PDF)

IPC Lab 1 - Hello (PowerPoint) (PowerPointShow) (PDF)

IPC Lab 2 - MessageQ (PowerPoint) (PowerPointShow) (PDF)

IPC Lab 3 - Scalability (PowerPoint) (PowerPointShow) (PDF)

4.4.6. TI SDO IPC Package¶

4.4.6.1. Introduction¶

This page introduces the modules in the ti.sdo.ipc package.

Note

This package is not used on HLOS-based cores. Although this is a BIOS-only package, note that the BIOS-side of a HLOS<->BIOS IPC-using application will need to bring in a subset of these packages into the BIOS-side configuration scripts.

The ti.sdo.ipc package contains the following modules that you may use in your applications:

Module

Module Path

Description

GateMP

Manages gates for mutual exclusion of shared resources by multiple processors and threads. See GateMP Module

ti.sdo.ipc.heaps.HeapBufMP

Fixed-sized shared memory Heaps. Similar to SYS/BIOS’s ti.sysbios.heaps.HeapBuf module, but with some configuration differences. See HeapMP Module

ti.sdo.ipc.heaps.HeapMemMP

Variable-sized shared memory Heaps. See HeapMP Module

ti.sdo.ipc.heaps.HeapMultiBufMP

Multiple fixed-sized shared memory Heaps. See HeapMP Module

ti.sdo.ipc.Ipc

Provides Ipc_start() function and allows startup sequence configuration. See IPC Module

ti.sdo.ipc.ListMP

Doubly-linked list for shared-memory, multi-processor applications. Very similar to the ti.sdo.utils.List module. See ListMP Module

ti.sdo.ipc.MessageQ

Variable size messaging module. See MessageQ Module

TransportShm

ti.sdo.ipc.transports.TransportShm

Transport used by MessageQ for remote communication with other processors via shared memory. See MessageQ Module

ti.sdo.ipc.Notify

Low-level interrupt mux/demuxer module. See Notify Module

NotifyDriverShm

ti.sdo.ipc.notifyDrivers. NotifyDriverShm

Shared memory notification driver used by the Notify module to communicate between a pair of processors. See Notify Module

ti.sdo.ipc.SharedRegion

Maintains shared memory for multiple shared regions. See SharedRegion Module

Additional modules in the subfolders of the ti.sdo.ipc package contain specific implementations of gates, heaps, notify drivers, transports, and various device family-specific modules.

In addition, the ti.sdo.ipc package defines the following interfaces that you may implement as your own custom modules:

Module

Module Path

IGateMPSupport

ti.sdo.ipc.interfaces.IGateMPSupport

IInterrupt

ti.sdo.ipc.notifyDrivers.IInterrupt

IMessageQTransport

ti.sdo.ipc.interfaces.IMessageQTransport

INotifyDriver

ti.sdo.ipc.interfaces.INotifyDriver

INotifySetup

ti.sdo.ipc.interfaces.INotifySetup

The <ipc_install_dir>/packages/ti/sdo/ipc directory contains the following packages that you may need to know about:

examples. Contains examples.

family. Contains device-specific support modules (used internally).

gates. Contains GateMP implementations (used internally).

heaps. Contains multiprocessor heaps.

interfaces. Contains interfaces.

notifyDrivers. Contains NotifyDriver implementations (used internally).

transports. Contains MessageQ transport implementations that are used internally.

4.4.6.1.1. Including Header Files¶

BIOS applications that use modules in the ti.sdo.ipc or ti.sdo.utils

package should include the common header files provided in

<ipc_install_dir>/packages/ti/ipc/. These header files offer a

common API for both SYS/BIOS and HLOS users of IPC.

The following example C code includes header files applications may need to use. Depending on the APIs used in your application code, you may need to include different XDC, IPC, and SYS/BIOS header files.

#include <xdc/std.h>

#include <string.h>

/* ---- XDC.RUNTIME module Headers */

#include <xdc/runtime/Memory.h>

#include <xdc/runtime/System.h>

#include <xdc/runtime/IHeap.h>

/* ----- IPC module Headers */

#include <ti/ipc/GateMP.h>

#include <ti/ipc/Ipc.h>

#include <ti/ipc/MessageQ.h>

#include <ti/ipc/HeapBufMP.h>

#include <ti/ipc/MultiProc.h>

/* ---- BIOS6 module Headers */

#include <ti/sysbios/BIOS.h>

#include <ti/sysbios/knl/Task.h>

/* ---- Get globals from .cfg Header */

#include <xdc/cfg/global.h>

Note that the appropriate include file location has changed from

previous versions of IPC. The XDCtools-generated header files are still

available in <ipc_install_dir>/packages/ti/sdo/ipc/, but these

should not directly be included in runtime .c code.

You should search your applications for “ti/sdo/ipc” and “ti/sdo/utils”

and change the header file references found as needed. Additional

changes to API calls will be needed.

Documentation for all common-header APIs is provided in Doxygen format

in your IPC installation at

Documentation for all common-header APIs is provided in Doxygen format

in your IPC installation at

<ipc_install_dir>/docs/doxygen/html/index.html. The latest version

of that documentation is available

online.

4.4.6.1.2. Standard IPC Function Call Sequence¶

For instance-based modules in IPC, the standard IPC methodology when creating object dynamically (that is, in C code) is to have the creator thread first initialize a MODULE_Params structure to its default values via a MODULE_Params_init() function. The creator thread can then set individual parameter fields in this structure as needed. After setting up the MODULE_Params structure, the creator thread calls the MODULE_create() function to creates the instance and initializes any shared memory used by the instance. If the instance is to be opened remotely, a unique name must be supplied in the parameters.

Other threads can access this instance via the MODULE_open() function, which returns a handle with access to the instance. The name that was used for instance creation must be used in the MODULE_open() function.

In most cases, MODULE_open() functions must be called in the context of a Task. This is because the thread running the MODULE_open() function needs to be able to block (to pend on a Semaphore in this case) while waiting for the remote processor to respond. The response from the remote processor triggers a hardware interrupt, which then posts a Semaphore to allow to Task to resume execution. The exception to this rule is that MODULE_open() functions do not need to be able to block when opening an instance on the local processor.

When the threads have finished using an instance, all threads that called MODULE_open() must call MODULE_close(). Then, the thread that called MODULE_create() can call MODULE_delete() to free the memory used by the instance.

Note that all threads that opened an instance must close that instance before the thread that created it can delete it. Also, a thread that calls MODULE_create() cannot call MODULE_close(). Likewise, a thread that calls MODULE_open() cannot call MODULE_delete().

4.4.6.1.3. Error Handling in IPC¶

Many of the APIs provided by IPC return an integer as a status code. Your application can test the status value returned against any of the provided status constants. For example:

MessageQ_Msg msg;

MessageQ_Handle messageQ;

Int status;

...

status = MessageQ_get(messageQ, &msg, MessageQ_FOREVER);

if (status < 0) {

System_abort("Should not happen\n");

}

Status constants have the following format: MODULE_[S|E]_CONDITION.

For example, Ipc_S_SUCCESS, MessageQ_E_FAIL, and SharedRegion_E_MEMORY

are status codes that may be returned by functions in the corresponding

modules.

Success codes always have values greater or equal to zero. For example,

Ipc_S_SUCCESS=0 and Ipc_S_ALREADYSETUP=1; both are success codes.

Failure codes always have values less than zero. Therefore, the presence

of an error can be detected by simply checking whether the return value

is negative.

Other APIs provided by IPC return a handle to a created object. If the handle is NULL, an error occurred when creating the object. For example:

messageQ = MessageQ_create(DSP_MESSAGEQNAME, NULL);

if (messageQ == NULL) {

System_abort("MessageQ_create failed\n");

}

Refer to the Doxygen documentation for status codes returned by IPC functions.

4.4.6.2. IPC Module¶

The main purpose of the Ipc module is to initialize the various subsystems of IPC. All applications that use IPC modules must call the Ipc_start() API, which does the following:

Initializes a number of objects and modules used by IPC

Synchronizes multiple processors so they can boot in any order

An application that uses IPC APIs–such as MessageQ–must include the Ipc module header file and call Ipc_start() before any calls to IPC modules. Here is a BIOS-side example:

#include <ti/ipc/Ipc.h>

int main(int argc, char* argv[])

{

Int status;

/* Call Ipc_start() */

status = Ipc_start();

if (status < 0) {

System_abort("Ipc_start failed\n");

}

BIOS_start();

return (0);

}

By default, the BIOS implementation of Ipc_start() internally calls Notify_start() if it has not already been called, then loops through the defined SharedRegions so that it can set up the HeapMemMP and GateMP instances used internally by the IPC modules. It also sets up MessageQ transports to remote processors.

The SharedRegion with an index of 0 (zero) is often used by BIOS-side IPC_start() to create resource management tables for internal use by other IPC modules. Thus SharedRegion “0” must be accessible by all processors. See SharedRegion Module for more about the SharedRegion module.

4.4.6.2.1. Ipc Module Configuration (BIOS-side only)¶

In an XDCtools configuration file, you configure the Ipc module for use as follows:

Ipc = xdc.useModule('ti.sdo.ipc.Ipc');

You can configure what the Ipc_start() API will do–which modules it will start and which objects it will create–by using the Ipc.setEntryMeta method in the configuration file to set the following properties:

setupNotify. If set to false, the Notify module is not set up. The default is true.

setupMessageQ. If set to false, the MessageQ transport instances to remote processors are not set up and the MessageQ module does not attach to remote processors. The default is true.

For example, the following statements from the notify example configuration turn off the setup of the MessageQ transports and connections to remote processors:

/* To avoid wasting shared memory for MessageQ transports */

for (var i = 0; i < MultiProc.numProcessors; i++) {

Ipc.setEntryMeta({

remoteProcId: i,

setupMessageQ: false,

});

}

You can configure how the IPC module synchronizes processors by configuring the Ipc.procSync property. For example:

Ipc.procSync = Ipc.ProcSync_ALL;

The options are:

Ipc.ProcSync_ALL. If you use this option, the Ipc_start() API automatically attaches to and synchronizes all remote processors. If you use this option, your application should never call Ipc_attach(). Use this option if all IPC processors on a device start up at the same time and connections should be established between every possible pair of processors.

Ipc.ProcSync_PAIR. (Default) If you use this option, you must explicitly call Ipc_attach() to attach to a specific remote processor.

If you use this option, Ipc_start() performs system-wide IPC initialization, but does not make connections to remote processors. Use this option if any or all of the following are true: - You need to control when synchronization with each remote processor occurs. - Useful work can be done while trying to synchronize with a remote processor by yielding a thread after each attempt to Ipc_attach() to the processor. - Connections to some remote processors are unnecessary and should be made selectively to save memory. - Ipc.ProcSync_NONE. If you use this option, Ipc_start() doesn’t synchronize any processors before setting up the objects needed by other modules.

Use this option with caution. It is intended for use in cases where the application performs its own synchronization and you want to avoid a potential deadlock situation with the IPC synchronization.

If you use the ProcSync_NONE option, Ipc_start() works exactly as it does with ProcSync_PAIR. : However, in this case, Ipc_attach() does not synchronize with the remote processor. As with other ProcSync options, Ipc_attach() still sets up access to GateMP, SharedRegion, Notify, NameServer, and MessageQ transports, so your application must still call Ipc_attach() for each remote processor that will be accessed. Note that an Ipc_attach() call for a remote processor whose ID is less than the local processor’s ID must occur after the corresponding remote processor has called Ipc_attach() to the local processor. For example, processor #2 can call Ipc_attach(1) only after processor #1 has called Ipc_attach(2).:

You can configure a function to perform custom actions in addition to the default actions performed when attaching to or detaching from a remote processor. These functions run near the end of Ipc_attach() and near the beginning of Ipc_detach(), respectively. Such functions must be non-blocking and must run to completion. The following example configures two attach functions and two detach functions. Each set of functions will be passed a different argument:

var Ipc = xdc.useModule('ti.sdo.ipc.Ipc');

var fxn = new Ipc.UserFxn;

fxn.attach = '&userAttachFxn1';

fxn.detach = '&userDetachFxn1';

Ipc.addUserFxn(fxn, 0x1);

fxn.attach = '&userAttachFxn2';

fxn.detach = '&userDetachFxn2';

Ipc.addUserFxn(fxn, 0x2);

The latest version of the IPC module configuration documentation is available

here

The latest version of the IPC module configuration documentation is available

here

4.4.6.2.2. Ipc Module APIs¶

In addition to the Ipc_start() API, which all applications that use IPC modules are required to call, the Ipc module also provides the following APIs for processor synchronization:

Ipc_attach() Creates a connection to the specified remote processor.

Ipc_detach() Deletes the connection to the specified remote processor.

You must call Ipc_start() on a processor before calling Ipc_attach().

Note

Call Ipc_attach() to the processor that owns shared memory region 0–usually the processor with an id of 0–before making a connection to any other remote processor. For example, if there are three processors configured with MultiProc, processor 1 should attach to processor 0 before it can attach to processor 2.

Use these functions unless you are using the Ipc.ProcSync_ALL configuration setting. With that option, Ipc_start() automatically attaches to and synchronizes all remote processors, and your application should never call Ipc_attach().

The Ipc.ProcSync_PAIR configuration option expects that your application will call Ipc_attach() for each remote processor with which it should be able to communicate.

Note

In ARM-Linux/DSP-RTOS scenario, Linux application gets the IPC configuration from LAD which has Ipc.ProcSync_ALL configured. DSP has Ipc.ProcSync_PAIR configured.

Processor synchronization means that one processor waits until the other processor signals that a particular module is ready for use. Within Ipc_attach(), this is done for the GateMP, SharedRegion (region 0), and Notify modules and the MessageQ transports.

You can call the Ipc_detach() API to delete internal instances created by Ipc_attach() and to free the memory used by these instances.

The latest version of the IPC module run-time API documentation is available

online

The latest version of the IPC module run-time API documentation is available

online

4.4.6.3. MessageQ Module¶

The MessageQ module supports the structured sending and receiving of variable length messages. It is OS independent and works with any threading model. For each MessageQ you create, there is a single reader and may be multiple writers.

MessageQ is the recommended messaging API for most applications. It can be used for both homogeneous and heterogeneous multi-processor messaging, along with single-processor messaging between threads.

Note

The MessageQ module in IPC is similar in functionality to the MSGQ module in DSP/BIOS 5.x.

The following are key features of the MessageQ module:

Writers and readers can be relocated to another processor with no runtime code changes.

Timeouts are allowed when receiving messages.

Readers can determine the writer and reply back.

Receiving a message is deterministic when the timeout is zero.

Messages can reside on any message queue.

Supports zero-copy transfers (BIOS only)

Messages can be sent and received from any type of thread.

The notification mechanism is specified by the application (BIOS only)

Allows QoS (quality of service) on message buffer pools. For example, using specific buffer pools for specific message queues. (BIOS only)

Messages are sent and received via a message queue. A reader is a thread that gets (reads) messages from a message queue. A writer is a thread that puts (writes) a message to a message queue. Each message queue has one reader and can have many writers. A thread may read from or write to multiple message queues.

Reader. The single reader thread calls MessageQ_create(), MessageQ_get(), MessageQ_free(), and MessageQ_delete().

Writer. Writer threads call MessageQ_open(), MessageQ_alloc(), MessageQ_put(), and MessageQ_close().

The following figure shows the flow in which applications typically use the main runtime MessageQ APIs:

Conceptually, the reader thread creates and owns the message queue. Writer threads then open a created message queue to get access to them.

4.4.6.3.1. Configuring the MessageQ Module (BIOS only)¶

On BIOS-based systems, you can configure a number of module-wide properties for MessageQ in your XDCtools configuration file.

A snapshot of the MessageQ module configuration

documentation is available online.

A snapshot of the MessageQ module configuration

documentation is available online.

To configure the MessageQ module, you must enable the module as follows:

var MessageQ = xdc.useModule('ti.sdo.ipc.MessageQ');

Some example Module-wide configuration properties you can set follow; refer to the IPC documentation for details.

// Maximum length of MessageQ names

MessageQ.maxNameLen = 32;

// Max number of MessageQs that can be dynamically created

MessageQ.maxRuntimeEntries = 10;

4.4.6.3.2. Creating a MessageQ Object¶

You can create message queues dynamically. Static creation is not supported. A MessageQ object is not a shared resource. That is, it resides on the processor, within the process, that creates it.

The reader thread creates a message queue. To create a MessageQ object dynamically, use the MessageQ_create() C API, which has the following syntax:

MessageQ_Handle MessageQ_create(String name, MessageQ_Params *params);

When you create a queue, you specify a name string. This name will be needed by the MessageQ_open() function, which is called by threads on the same or remote processors that want to send messages to the created message queue. While the name is not required (that is, it can be NULL), an unnamed queue cannot be opened.

An ISync handle is associated with the message queue via the synchronizer parameter.

If the call is successful, the MessageQ_Handle is returned. If the call fails, NULL is returned.

You initialize the params struct by using the MessageQ_Params_init() function, which initializes the params structure with the default values. A NULL value for params can be passed into the create call, which results in the defaults being used. The default synchronizer is SyncSem.

The following code creates a MessageQ object using SyncSem as the synchronizer.

MessageQ_Handle messageQ;

MessageQ_Params messageQParams;

SyncSem_Handle syncSemHandle;

...

syncSemHandle = SyncSem_create(NULL, NULL);

MessageQ_Params_init(&messageQParams);

messageQParams.synchronizer = SyncSem_Handle_upCast(syncSemHandle);

messageQ = MessageQ_create(CORE0_MESSAGEQNAME, &messageQParams);

In this example, the CORE0_MESSAGEQNAME constant may be defined in header shared by multiple cores.

A snapshot of the MessageQ module run-time

API documentation is available online.

A snapshot of the MessageQ module run-time

API documentation is available online.

4.4.6.3.3. Opening a Message Queue¶

Writer threads open a created message queue to get access to them. In order to obtain a handle to a message queue that has been created, a writer thread must call MessageQ_open(), which has the following syntax.

Int MessageQ_open(String name, MessageQ_QueueId *queueId);

This function expects a name, which must match with the name of the created object. Internally MessageQ calls NameServer_get() to find the 32-bit queueId associated with the created message queue. NameServer looks both locally and remotely.

If no matching name is found on any processor, MessageQ_open() returns MessageQ_E_NOTFOUND. If the open is successful, the Queue ID is filled in and MessageQ_S_SUCCESS is returned.

The following code opens the MessageQ object created by the processor.

MessageQ_QueueId remoteQueueId;

Int status;

...

/* Open the remote message queue. Spin until it is ready. */

do {

status = MessageQ_open(CORE0_MESSAGEQNAME, &remoteQueueId);

}

while (status < 0);

4.4.6.3.4. Allocating a Message¶

MessageQ manages message allocation via the MessageQ_alloc() and MessageQ_free() functions. MessageQ uses Heaps for message allocation. MessageQ_alloc() has the following syntax:

MessageQ_Msg MessageQ_alloc(UInt16 heapId,

UInt32 size);

The allocation size in MessageQ_alloc() must include the size of the message header, which is 32 bytes.

The following code allocates a message:

#define MSGSIZE 256

#define HEAPID 0

...

MessageQ_Msg msg;

...

msg = MessageQ_alloc(HEAPID, sizeof(MessageQ_MsgHeader));

if (msg == NULL) {

System_abort("MessageQ_alloc failed\n");

}

Once a message is allocated, it can be sent on any message queue. Once the reader receives the message, it may either free the message or re-use the message. Messages in a message queue can be of variable length. The only requirement is that the first field in the definition of a message must be a MsgHeader structure. For example:

typedef struct MyMsg {

MessageQ_MsgHeader header; // Required

SomeEnumType type // Can be any field

... // ...

} MyMsg;

The MessageQ APIs use the MessageQ_MsgHeader internally. Your application should not modify or directly access the fields in the MessageQ_MsgHeader structure.

4.4.6.3.4.1. MessageQ Allocation and Heaps¶

All messages sent via the MessageQ module must be allocated from a xdc.runtime.IHeap implementation, such as ti.sdo.ipc.heaps.HeapBufMP. The same heap can also be used for other memory allocation not related to MessageQ.

The MessageQ_registerHeap() API assigns a MessageQ heapId to a heap. When allocating a message, the heapId is used, not the heap handle. The heapIds should start at zero and increase. The maximum number of heaps is determined by the numHeap module configuration property. See the online documentation for MessageQ_registerHeap() for details.

/* Register this heap with MessageQ */

status = MessageQ_registerHeap( HeapBufMP_Handle_upCast(heapHandle), HEAPID);

If the registration fails (for example, the heapId is already used), this function returns FALSE.

An application can use multiple heaps to allow an application to regulate its message usage. For example, an application can allocate critical messages from a heap of fast on-chip memory and non-critical messages from a heap of slower external memory. Additionally, heaps MessageQ uses can be shared with other modules and/or the application.

MessageQ alternatively supports allocating messages without the MessageQ_alloc() function.

Heaps can be unregistered via MessageQ_unregisterHeap().

4.4.6.3.4.2. MessageQ Allocation Without a Heap¶

It is possible to send MessageQ messages that are allocated statically instead of being allocated at run-time via MessageQ_alloc(). However the first field of the message must still be a MsgHeader. To make sure the MsgHeader has valid settings, the application must call MessageQ_staticMsgInit(). This function initializes the header fields in the same way that MessageQ_alloc() does, except that it sets the heapId field in the header to the MessageQ_STATICMSG constant.

If an application uses messages that were not allocated using MessageQ_alloc(), it cannot free the messages via the MessageQ_free() function, even if the message is received by a different processor. Also, the transport may internally call MessageQ_free() and encounter an error.

If MessageQ_free() is called on a statically allocated message, it asserts that the heapId of the message is not MessageQ_STATICMSG.

4.4.6.3.5. Sending a Message¶

Once a message queue is opened and a message is allocated, the message can be sent to the MessageQ via the MessageQ_put() function, which has the following syntax.

Int MessageQ_put(MessageQ_QueueId queueId,

MessageQ_Msg msg);

For example:

status = MessageQ_put(remoteQueueId, msg);

Opening a queue is not required. Instead the message queue ID can be “discovered” via the MessageQ_getReplyQueue() function, which returns the 32-bit queueId.

MessageQ_QueueId replyQueue;

MessageQ_Msg msg;

/* Use the embedded reply destination */

replyMessageQ = MessageQ_getReplyQueue(msg);

if (replyMessageQ == MessageQ_INVALIDMESSAGEQ) {

System_abort("Invalid reply queue\n");

}

/* Send the response back */

status = MessageQ_put(replyQueue, msg);

if (status < 0) {

System_abort("MessageQ_put was not successful\n");

}

If the destination queue is local, the message is placed on the appropriate priority linked list and the ISync signal function is called. If the destination queue is on a remote processor, the message is given to the proper transport and returns.

If MessageQ_put() succeeds, it returns MessageQ_S_SUCCESS. If MessageQ_E_FAIL is returned, an error occurred and the caller still owns the message.

There can be multiple senders to a single message queue. MessageQ handles the thread safety.

Before you send a message, you can use the MessageQ_setMsgId() function to assign a numeric value to the message that can be checked by the receiving thread.

/* Increment...the remote side will check this */

msgId++;

MessageQ_setMsgId(msg, msgId);

You can use the MessageQ_setMsgPri() function to set the priority of the message.

4.4.6.3.6. Receiving a Message¶

To receive a message, a reader thread calls the MessageQ_get() API.

Int MessageQ_get(MessageQ_Handle handle,

MessageQ_Msg *msg,

UInt timeout)

If a message is present, it returned by this function. In this case the ISync’s wait() function is not called. For example:

/* Get a message */

status = MessageQ_get(messageQ, &msg, MessageQ_FOREVER);

if (status < 0) {

System_abort("Should not happen; timeout is forever\n");

}

If no message is present and no error occurs, this function blocks while waiting for the timeout period for the message to arrive. If the timeout period expires, MessageQ_E_FAIL is returned. If an error occurs, the msg argument will be unchanged.

After receiving a message, you can use the following APIs to get information about the message from the message header:

MessageQ_getMsgId() gets the ID value set by MessageQ_setMsgId(). For example:

/* Get the id and increment it to send back */

msgId = MessageQ_getMsgId(msg);

msgId += NUMCLIENTS;

MessageQ_setMsgId(msg, msgId);

MessageQ_getMsgPri() gets the priority set by MessageQ_setMsgPri().

MessageQ_getMsgSize() gets the size of the message in bytes.

MessageQ_getReplyQueue() gets the ID of the queue provided by MessageQ_setReplyQueue().

4.4.6.3.7. Deleting a MessageQ Object¶

MessageQ_delete() frees a MessageQ object stored in local memory. If any messages are still on the internal linked lists, they will be freed. The contents of the handle are nulled out by the function to prevent use after deleting.

Void MessageQ_delete(MessageQ_Handle *handle);

The queue array entry is set to NULL to allow re-use.

Once a message queue is deleted, no messages should be sent to it. A MessageQ_close() is recommended, but not required.

4.4.6.3.8. Message Priorities¶

MessageQ supports three message priorities as follows:

MessageQ_NORMALPRI = 0

MessageQ_HIGHPRI = 1

MessageQ_URGENTPRI = 3

You can set the priority level for a message before sending it by using the MessageQ_setMsgPri() function:

Void MessageQ_setMsgPri(MessageQ_Msg msg,

MessageQ_Priority priority)

Internally a MessageQ object maintains two linked lists: normal and high-priority. A normal priority message is placed onto the “normal” linked list in FIFO manner. A high priority message is placed onto the “high-priority” linked list in FIFO manner. An urgent message is placed at the beginning of the high linked list.

Note

Since multiple urgent messages may be sent before a message is read, the order of urgent messages is not guaranteed.

When getting a message, the reader checks the high priority linked list first. If a message is present on that list, it is returned. If not, the normal priority linked list is checked. If a message is present there, it is returned. Otherwise the synchronizer’s wait function is called.

Note

The MessageQ priority feature is enabled by the selecting different MessageQ transports. Some MessageQ implementations (e.g. Linux) may not support multiple transports and therefore may not support this feature.

4.4.6.3.9. Thread Synchronization (BIOS only)¶

MessageQ supports reads and writes of different thread models. It can work with threading models that include SYS/BIOS’s Hwi, Swi, and Task threads.

This flexibility is accomplished by using an implementation of the xdc.runtime.knl.ISync interface. The creator of the message queue must also create an object of the desired ISync implementation and assign that object as the “synchronizer” of the MessageQ. Each message queue has its own synchronizer object.

An ISync object has two main functions: signal() and wait(). Whenever MessageQ_put() is called, the signal() function of the ISync implementation is called. If MessageQ_get() is called when there are no messages on the queue, the wait() function of the ISync implementation is called. The timeout passed into the MessageQ_get() is directly passed to the ISync wait() API.

Note

Since ISync implementations must be binary, the reader thread must drain the MessageQ of all messages before waiting for another signal.

For example, if the reader is a SYS/BIOS Swi, the instance could be a SyncSwi. When a MessageQ_put() is called, the Swi_post() API would be called. The Swi would run and it must call MessageQ_get() until no messages are returned. If the Swi does not get all the messages, the Swi will not run again, or at least will not run until a new message is placed on the queue.

The calls to ISync functions occurs directly in MessageQ_put() when the call occurs on the same processor where the queue was created. In the remote case, the transport calls MessageQ_put(), which is then a local put, and the signal function is called.

The following are ISync implementations provided by XDCtools and SYS/BIOS:

xdc.runtime.knl.SyncNull. The signal() and wait() functions do nothing. Basically this implementation allows for polling.

xdc.runtime.knl.SyncSemThread. An implementation built using the xdc.runtime.knl.Semaphore module, which is a binary semaphore.

xdc.runtime.knl.SyncGeneric.xdc. This implementation allows you to use custom signal() and wait() functions as needed.

ti.sysbios.syncs.SyncSem. An implementation built using the ti.sysbios.ipc.Semaphore module. The signal() function runs a Semaphore_post(). The wait() function runs a Semaphore_pend().

ti.sysbios.syncs.SyncSwi. An implementation built using the ti.sysbios.knl.Swi module. The signal() function runs a Swi_post(). The wait() function does nothing and returns FALSE if the timeout elapses.

ti.sysbios.syncs.SyncEvent. An implementation built using the ti.sysbios.ipc.Event module. The signal() function runs an Event_post(). The wait() function does nothing and returns FALSE if the timeout elapses. This implementation allows waiting on multiple events.

The following code from the “message” example creates a SyncSem instance and assigns it to the synchronizer field in the MessageQ_Params structure before creating the MessageQ instance:

#include <ti/sysbios/syncs/SyncSem.h>

...

MessageQ_Params messageQParams;

SyncSem_Handle syncSemHandle;

/* Create a message queue using SyncSem as synchronizer */

syncSemHandle = SyncSem_create(NULL, NULL);

MessageQ_Params_init(&messageQParams);

messageQParams.synchronizer = SyncSem_Handle_upCast(syncSemHandle);

messageQ = MessageQ_create(CORE1_MESSAGEQNAME, &messageQParams, NULL);

4.4.6.3.10. ReplyQueue¶

For some applications, doing a MessageQ_open() on a queue is not realistic. For example, a server may not want to open all the clients’ queues for sending responses. To support this use case, the message sender can embed a reply queueId in the message using the MessageQ_setReplyQueue() function.

Void MessageQ_setReplyQueue(MessageQ_Handle handle,

MessageQ_Msg msg)

This API stores the message queue’s queueId into fields in the MsgHeader.

The MessageQ_getReplyQueue() function does the reverse. For example:

MessageQ_QueueId replyQueue;

MessageQ_Msg msg;

...

/* Use the embedded reply destination */

replyMessageQ = MessageQ_getReplyQueue(msg);

if (replyMessageQ == MessageQ_INVALIDMESSAGEQ) {

System_abort("Invalid reply queue\n");

}

The MessageQ_QueueId value returned by this function can then be used in a MessageQ_put() call.

The queue that is embedded in the message does not have to be the sender’s queue.

4.4.6.3.11. Remote Communication via Transports (BIOS only)¶

MessageQ is designed to support multiple processors. To allow this, different transports can be plugged into MessageQ.

In a multi-processor system, MessageQ communicates with other processors via ti.sdo.ipc.interfaces.IMessageQTransport instances. There can be up to two IMessageQTransport instances for each processor to which communication is desired. One can be a normal-priority transport and the other for handling high-priority messages. This is done via the priority parameter in the transport create() function. If there is only one register to a remote processor (either normal or high), all messages go via that transport.

There can be different transports on a processor. For example, there may be a shared memory transport to processor A and an sRIO one to processor B.

When your application calls Ipc_start(), the default transport instance used by MessageQ is created automatically. Internally, transport instances are responsible for registering themselves with MessageQ via the MessageQ_registerTransport() function.

IPC provides an implementation of the IMessageQTransport interface called ti.sdo.ipc.transports.TransportShm (shared memory). You can write other implementations to meet your needs.

When a transport is created via a transport-specific create() call, a remote processor ID (defined via the MultiProc module) is specified. This ID denotes which processor this instance communicates with. Additionally there are configuration properties for the transport–such as the message priority handled–that can be defined in a Params structure. The transport takes these pieces of information and registers itself with MessageQ. MessageQ now knows which transport to call when sending a message to a remote processor.

Trying to send to a processor that has no transport results in an error.

4.4.6.3.11.1. Custom Transport Implementations¶

Transports can register and unregister themselves dynamically. That is, if the transport instance is deleted, it should unregister with MessageQ.

When receiving a message, transports need to form the MessageQ_QueueId that allows them to call MessageQ_put(). This is accomplished via the MessageQ_getDstQueue() API.

MessageQ_QueueId MessageQ_getDstQueue(MessageQ_Msg msg)

4.4.6.3.12. Sample Runtime Program Flow (Dynamic)¶

The following figure shows the typical sequence of events when using a MessageQ. A message queue is created by a Task. An open on the same processor then occurs. Assume there is one message in the system. The opener allocates the message and sends it to the created message queue, which gets and frees it.

4.4.6.4. ListMP Module¶

The ti.sdo.ipc.ListMP module is a linked-list based module designed to be used in a multi-processor environment. It is designed to provide a means of communication between different processors. ListMP uses shared memory to provide a way for multiple processors to share, pass, or store data buffers, messages, or state information. ListMP is a low-level module used by several other IPC modules, including MessageQ, HeapBufMP, and transports, as a building block for their instance and state structures.

A common challenge that occurs in a multi-processor environment is preventing concurrent data access in shared memory between different processors. ListMP uses a multi-processor gate to prevent multiple processors from simultaneously accessing the same linked-list. All ListMP operations are atomic across processors.

You create a ListMP instance dynamically as follows:

# Initialize a ListMP_Params structure by calling ListMP_Params_init(). # Specify the name, regionId, and other parameters in the ListMP_Params structure. # Call ListMP_create().

ListMP uses a ti.sdo.utils.NameServer instance to store the instance information. The ListMP name supplied must be unique for all ListMP instances in the system.

ListMP_Params params;

GateMP_Handle gateHandle;

ListMP_Handle handle1;

/* If gateHandle is NULL, the default remote gate will be

automatically chosen by ListMP */

gateHandle = GateMP_getDefaultRemote();

ListMP_Params_init(¶ms);

params.gate = gateHandle;

params.name = "myListMP";

params.regionId = 1;

handle1 = ListMP_create(¶ms, NULL);

Once created, another processor or thread can open the ListMP instance by calling ListMP_open().

while (ListMP_open("myListMP", &handle1, NULL) < 0) {

;

}

ListMP uses SharedRegion pointers (see SharedRegion Module), which are portable across processors, to translate addresses for shared memory. The processor that creates the ListMP instance must specify the shared memory in terms of its local address space. This shared memory must have been defined in the SharedRegion module by the application. The ListMP module has the following constraints:

ListMP elements to be added/removed from the linked-list must be stored in a shared memory region.

The linked list must be on a worst-case cache line boundary for all the processors sharing the list.

ListMP_open() should be called only when global interrupts are enabled.

A list item must have a field of type ListMP_Elem as its first field. For example, the following structure could be used for list elements:

typedef struct Tester {

ListMP_Elem elem;

Int scratch[30];

Int flag;

} Tester;

Besides creating, opening, and deleting a list instance, the ListMP module provides functions for the following common list operations:

ListMP_empty(). Test for an empty ListMP.

ListMP_getHead(). Get the element from the front of the ListMP.

ListMP_getTail(). Get the element from the end of the ListMP.

ListMP_insert(). Insert element into a ListMP at the current location.

ListMP_next(). Return the next element in the ListMP (non-atomic).

ListMP_prev(). Return previous element in the ListMP (non-atomic).

ListMP_putHead(). Put an element at the head of the ListMP.

ListMP_putTail(). Put an element at the end of the ListMP.

ListMP_remove(). Remove the current element from the middle of the ListMP.

This example prints a “flag” field from the list elements in a ListMP instance in order:

System_printf("On the List: ");

testElem = NULL;

while ((testElem = ListMP_next(handle, (ListMP_Elem *)testElem)) != NULL) {

System_printf("%d ", testElem->flag);

}

This example prints the same items in reverse order:

System_printf("in reverse: ");

elem = NULL;

while ((elem = ListMP_prev(handle, elem)) != NULL) {

System_printf("%d ", ((Tester *)elem)->flag);

}

This example determines if a ListMP instance is empty:

if (ListMP_empty(handle1) == TRUE) {

System_printf("Yes, handle1 is empty\n");

}

This example places a sequence of even numbers in a ListMP instance:

/* Add 0, 2, 4, 6, 8 */

for (i = 0; i < COUNT; i = i + 2) {

ListMP_putTail(handle1, (ListMP_Elem *)&(buf[i]));

}

The instance state information contains a pointer to the head of the linked-list, which is stored in shared memory. Other attributes of the instance stored in shared memory include the version, status, and the size of the shared address. Other processors can obtain a handle to the linked list by calling ListMP_open().

The following figure shows local memory and shared memory for processors Proc 0 and Proc 1, in which Proc 0 calls ListMP_create() and Proc 1 calls ListMP_open().

The cache alignment used by the list is taken from the SharedRegion on a per-region basis. The alignment must be the same across all processors and should be the worst-case cache line boundary.

4.4.6.5. HeapMP Module¶

The ti.sdo.ipc.heaps package provides three implementations of the xdc.runtime.IHeap interface.

HeapBufMP. Fixed-size memory manager. All buffers allocated from a HeapBufMP instance are of the same size. There can be multiple instances of HeapBufMP that manage different sizes. The ti.sdo.ipc.heaps.HeapBufMP module is modeled after SYS/BIOS 6’s HeapBuf module (ti.sysbios.heaps.HeapBuf).

HeapMultiBufMP. Each instance supports up to 8 different fixed sizes of buffers. When an allocation request is made, the HeapMultiBufMP instance searches the different buckets to find the smallest one that satisfies the request. If that bucket is empty, the allocation fails. The ti.sdo.ipc.heaps.HeapMultiBufMP module is modeled after SYS/BIOS 6’s HeapMultiBuf module (ti.sysbios.heaps.HeapMultiBuf).

HeapMemMP. Variable-size memory manager. HeapMemMP manages a single buffer in shared memory from which blocks of user-specified length are allocated and freed. The ti.sdo.ipc.heaps.HeapMemMP module is modeled after SYS/BIOS 6’s HeapMem module (ti.sysbios.heaps.HeapMem).

The main addition to these modules is the use of shared memory and the management of multi-processor exclusion.

The SharedRegion modules, and therefore the MessageQ module and other IPC modules that use SharedRegion, use a HeapMemMP instance internally.

The following subsections use “Heap*MP” to refer to the HeapBufMP, HeapMultiBufMP, and HeapMemMP modules.

Note

These Heap*MP Modules are only available on SYS/BIOS-based cores, they are not available when running an HLOS (e.g. Linux). As an HLOS shared memory solution, many Linux SDKs are recommending/providing CMEM. There are limitations (e.g. you cannot alloc on HLOS and free on RTOS), but these are in line with using the HLOS as a master (owning all resources) and RTOS as a slave (using resources provided by the master).

4.4.6.5.1. Configuring a Heap*MP Module¶

In addition to configuring Heap*MP instances, you can set module-wide configuration properties. For example, the maxNameLen property lets you set the maximum length of heap names. The track[Max]Allocs module configuration property enables/disables tracking memory allocation statistics.

A Heap*MP instance uses a NameServer instance to manage name/value pairs.

The Heap*MP modules make the following assumptions:

The SharedRegion module handles address translation between a virtual shared address space and the local processor’s address space. If the memory address spaces are identical across all processors, or if a single processor is being used, no address translation is required and the SharedRegion module must be appropriately configured.

Both processors must have the same endianness.

4.4.6.5.2. Creating a Heap*MP Instance¶

Heaps can be created dynamically. You use the Heap*MP_create() functions to dynamically create Heap*MP instances. As with other IPC modules, before creating a Heap*MP instance, you initialize a Heap*MP_Params structure and set fields in the structure to the desired values. When you create a heap, the shared memory is initialized and the Heap*MP object is created in local memory. Only the actual buffers and some shared information reside in shared memory.

The following code example initializes a HeapBufMP_Params structure and sets fields in it. It then creates and registers an instance of the HeapBufMP module.

/* Create the heap that will be used to allocate messages. */

HeapBufMP_Params_init(&heapBufMPParams);

heapBufMPParams.regionId = 0; /* use default region */

heapBufMPParams.name = "myHeap";

heapBufMPParams.align = 256;

heapBufMPParams.numBlocks = 40;

heapBufMPParams.blockSize = 1024;

heapBufMPParams.gate = NULL; /* use system gate */

heapHandle = HeapBufMP_create(&heapBufMPParams);

if (heapHandle == NULL) {

System_abort("HeapBufMP_create failed\n");

}

/* Register this heap with MessageQ */

MessageQ_registerHeap(HeapBufMP_Handle_upCast(heapHandle), HEAPID);

The parameters for the various Heap*MP implementations vary. For example, when you create a HeapBufMP instance, you can configure the following parameters after initializing the HeapBufMP_Params structure:

regionId. The index corresponding to the shared region from which shared memory will be allocated.

name. A name of the heap instance for NameServer (optional).

align. Requested alignment for each block.

numBlocks. Number of fixed size blocks.

blockSize. Size of the blocks in this instance.

gate. A multiprocessor gate for context protection.

exact. Only allocate a block if the requested size is an exact match. Default is false.

Of these parameters, the ones that are common to all three Heap*MP implementations are gate, name and regionId.

4.4.6.5.3. Opening a Heap*MP Instance¶

Once a Heap*MP instance is created on a processor, the heap can be opened on another processor to obtain a local handle to the same shared instance. In order for a remote processor to obtain a handle to a Heap*MP that has been created, the remote processor needs to open it using Heap*MP_open().

The Heap*MP modules use a NameServer instance to allow a remote processor to address the local Heap*MP instance using a user-configurable string value as an identifier. The Heap*MP name is the sole parameter needed to identify an instance.

The heap must be created before it can be opened. An open call matches the call’s version number with the creator’s version number in order to ensure compatibility. For example:

HeapBufMP_Handle heapHandle; …

/* Open heap created by other processor. Loop until open. */

do {

status = HeapBufMP_open("myHeap", &heapHandle);

}

while (status < 0);

/* Register this heap with MessageQ */

MessageQ_registerHeap(HeapBufMP_Handle_upCast(heapHandle), HEAPID);

4.4.6.5.4. Closing a Heap*MP Instance¶

Heap*MP_close() frees an opened Heap*MP instance stored in local memory. Heap*MP_close() may only be used to finalize instances that were opened with Heap*MP_open() by this thread. For example:

HeapBufMP_close(&heapHandle);

Never call Heap*MP_close() if some other thread has already called Heap*MP_delete().

4.4.6.5.5. Deleting a Heap*MP Instance¶

The Heap*MP creator thread can use Heap*MP_delete() to free a Heap*MP object stored in local memory and to flag the shared memory to indicate that the heap is no longer initialized. Heap*MP_delete() may not be used to finalize a heap using a handle acquired using Heap*MP_open()–Heap*MP_close() should be used by such threads instead.

4.4.6.5.6. Allocating Memory from the Heap¶

The HeapBufMP_alloc() function obtains the first buffer off the heap’s freeList.

The HeapMultiBufMP_alloc() function searches through the buckets to find the smallest size that honors the requested size. It obtains the first block on that bucket.

If the “exact” field in the Heap*BufMP_Params structure was true when the heap was created, the alloc only returns the block if the blockSize for a bucket is the exact size requested. If no exact size is found, an allocation error is returned.

The HeapMemMP_alloc() function allocates a block of memory of the requested size from the heap.

For all of these allocation functions, the cache coherency of the message is managed by the SharedRegion module that manages the shared memory region used for the heap.

4.4.6.5.7. Freeing Memory to the Heap¶

The HeapBufMP_free() function returns an allocated buffer to its heap.

The HeapMultiBufMP_free() function searches through the buckets to determine on which bucket the block should be returned. This is determined by the same algorithm as the HeapMultiBufMP_alloc() function, namely the smallest blockSize that the block can fit into.

If the “exact” field in the Heap*BufMP_Params structure was true when the heap was created, and the size of the block to free does not match any bucket’s blockSize, an assert is raised.

The HeapMemMP_free() function returns the allocated block of memory to its heap.

For all of these deallocation functions, cache coherency is managed by the corresponding Heap*MP module.

4.4.6.5.8. Querying Heap Statistics¶

Both heap modules support use of the xdc.runtime.Memory module’s Memory_getStats() and Memory_query() functions on the heap.

In addition, the Heap*MP modules provide the Heap*MP_getStats(), Heap*MP_getExtendedStats(), and Heap*MP_isBlocking() functions to enable you to gather information about a heap.

By default, allocation tracking is often disabled in shared-heap modules for performance reasons. You can set the HeapBufMP.trackAllocs and HeapMultiBufMP.trackMaxAllocs configuration properties to true in order to turn on allocation tracking for their respective modules. Refer to the CDOC documentation for further information.

Sample Runtime Program Flow The following diagram shows the program flow for a two-processor (or two-thread) application. This application creates a Heap*MP instance dynamically.

4.4.6.6. GateMP Module¶

A GateMP instance can be used to enforce both local and remote context protection. That is, entering a GateMP can prevent preemption by another thread/process running on the same processor and simultaneously prevent a remote processor from entering the same gate. GateMP’s are typically used to protect reads/writes to a shared resource, such as shared memory.

Note

Initial IPC 3.x releases only supported GateMP on BIOS. It was introduced on QNX in IPC 3.10, and on Linux/Android in 3.21.

4.4.6.6.1. Creating a GateMP Instance¶

As with other IPC modules, GateMP instances can only be created dynamically.

Before creating the GateMP instance, you initialize a GateMP_Params structure and set fields in the structure to the desired values. You then use the GateMP_create() function to dynamically create a GateMP instance.

When you create a gate, shared memory is initialized, but the GateMP object is created in local memory. Only the gate information resides in shared memory.

The following code creates a GateMP object:

GateMP_Params gparams;

GateMP_Handle gateHandle;

GateMP_Params_init(&gparams);

gparams.localProtect = GateMP_LocalProtect_THREAD;

gparams.remoteProtect = GateMP_RemoteProtect_SYSTEM;

gparams.name = "myGate";

gparams.regionId = 1;

gateHandle = GateMP_create(&gparams, NULL);

A gate can be configured to implement remote processor protection in various ways. This is done via the params.remoteProtect configuration property. The options for params.remoteProtect are as follows:

GateMP_RemoteProtect_NONE. Creates only the local gate specified by the localProtect property.

GateMP_RemoteProtect_SYSTEM. Uses the default device-specific gate protection mechanism for your device. Internally, GateMP automatically uses device-specific implementations of multi-processor mutexes implemented via a variety of hardware mechanisms. Devices typically support a single type of system gate, so this is usually the correct configuration setting for params.remoteProtect.

GateMP_RemoteProtect_CUSTOM1 and GateMP_RemoteProtect_CUSTOM2 (BIOS-only). Some devices support multiple types of system gates. If you know that GateMP has multiple implementations of gates for your device, you can use one of these options.

Several gate implementations used internally for remote protection are provided in the ti.sdo.ipc.gates package.

A gate can be configured to implement local protection at various levels. This is done via the params.localProtect configuration property (BIOS-only). The options for params.localProtect are as follows:

GateMP_LocalProtect_NONE. Uses the XDCtools GateNull implementation, which does not offer any local context protection. For example, you might use this option for a single-threaded local application that still needs remote protection.

GateMP_LocalProtect_INTERRUPT. Uses the SYS/BIOS GateHwi implementation, which disables hardware interrupts.

GateMP_LocalProtect_TASKLET. Uses the SYS/BIOS GateSwi implementation, which disables software interrupts.

GateMP_LocalProtect_THREAD. Uses the SYS/BIOS GateMutexPri implementation, which is based on Semaphores. This option may use a different gate than the following option on some operating systems. When using SYS/BIOS, they are equivalent.

GateMP_LocalProtect_PROCESS. Uses the SYS/BIOS GateMutexPri implementation, which is based on Semaphores.

This property is currently ignored (as of IPC 3.23) on non-BIOS OSes. A thread-level mutex is used for local protection independent of this property setting.

Other fields you are required to set in the GateMP_Params structure are:

name. The name of the GateMP instance.

regionId. The ID of the SharedRegion to use for shared memory used by this GateMP instance (BIOS-only).

The latest version of the GateMP module run-time API documentation is available

online

The latest version of the GateMP module run-time API documentation is available

online

4.4.6.6.2. Opening a GateMP Instance¶

Once an instance is created on a processor, the gate can be opened on another processor to obtain a local handle to the same instance.

The GateMP module uses a NameServer instance to allow a remote processor to address the local GateMP instance using a user-configurable string value as an identifier rather than a potentially dynamic address value.

status = GateMP_open("myGate", &gateHandle);

if (status < 0) {

System_printf("GateMP_open failed\n");

}

4.4.6.6.3. Closing a GateMP Instance¶

GateMP_close() frees a GateMP object stored in local memory.

GateMP_close() should never be called on an instance whose creator has been deleted.

4.4.6.6.4. Deleting a GateMP Instance¶

GateMP_delete() frees a GateMP object stored in local memory and flags the shared memory to indicate that the gate is no longer initialized.

A thread may not use GateMP_delete() if it acquired the handle to the gate using GateMP_open(). Such threads should call GateMP_close() instead.

4.4.6.6.5. Entering a GateMP Instance¶

Either the GateMP creator or opener may call GateMP_enter() to enter a gate. While it is necessary for the opener to wait for a gate to be created to enter a created gate, it isn’t necessary for a creator to wait for a gate to be opened before entering it.

GateMP_enter() enters the caller’s local gate. The local gate (if supplied) blocks if entered on the local processor. If entered by the remote processor, GateMP_enter() spins until the remote processor has left the gate.

No matter what the params.localProtection configuration property is set to, after GateMP_enter() returns, the caller has exclusive access to the data protected by this gate.

A thread may reenter a gate without blocking or failing.

GateMP_enter() returns a “key” that is used by GateMP_leave() to leave this gate; this value is used to restore thread preemption to the state that existed just prior to entering this gate.

IArg key;

/* Enter the gate */

key = GateMP_enter(gateHandle);

4.4.6.6.6. Leaving a GateMP Instance¶

GateMP_leave() may only called by a thread that has previously entered this gate via GateMP_enter().

After this method returns, the caller must not access the data structure protected by this gate (unless the caller has entered the gate more than once and other calls to leave remain to balance the number of previous calls to enter).

IArg key;

/* Leave the gate */

GateMP_leave(gateHandle, key);

4.4.6.6.7. Querying a GateMP Instance¶

GateMP_query() returns TRUE if a gate has a given quality, and FALSE otherwise, including cases when the gate does not recognize the constant describing the quality. The qualities you can query are:

GateMP_Q_BLOCKING. If GateMP_Q__BLOCKING is FALSE, the gate never blocks.

GateMP_Q_PREEMPTING. If GateMP_Q_PREEMPTING is FALSE, the gate does not allow other threads to preempt the thread that has already entered the gate.

4.4.6.6.8. NameServer Interaction¶