5.3. Application Demos¶

5.3.1. Dual Camera¶

Dual camera demo

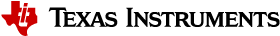

Below diagram illustrates the video data processing flow of dual camera demo.

Dual camera example demo demonstrates following-

- Video capture using V4L2 interface from up to two cameras.

- QT QWidget based drawing of user interface using linuxfb plugin. linuxfb is fbdev based software drawn QPA.

- Hardware accelerated scaling of input video from primary camera to display resolution using DSS IP.

- Hardware accelerated scaling of input video from secondary camera (if connected) to lower resolution using DSS IP.

- Hardware accelerated overlaying of two video planes and graphics plane using DSS IP.

- Scaling of two video planes and overlaying with graphics plane happens on the fly in single pass inside DSS IP using DRM atomic APIs.

- Snapshot of the screen using JPEG compression running on ARM. The captured images are stored in filesystem under /usr/share/camera-images/ folder

- The camera and display driver shares video buffer using dmabuf protocol. Capture driver writes captured content to a buffer which is directly read by the display driver without copying the content locally to another buffer (zero copy involved).

- The application also demonstrates allocating the buffer from either omap_bo (from omapdrm) memory pool or from cmem buffer pool. The option to pick omap_bo or cmem memory pool is provided runtime using cmd line.

- If the application has need to do some CPU based processing on captured buffer, then it is recommended to allocate the buffer using CMEM buffer pool. The reason being omap_bo memory pool doesn’t support cache read operation. Due to this any CPU operation involving video buffer read will be 10x to 15x slower. CMEM pool supports cache operation and hence CPU operations on capture video buffer are fast.

- The application runs in nullDRM/full screen mode (no windows manager like wayland/x11) and the linuxfb QPA runs in fbdev mode. This gives applicationfull control of DRM resource DSS to control and display the video planes.

Instructions to run dual camera demo

- Since the application need control of DRM resource (DSS) and there can be only one master, make sure that the wayland/weston is not running.

#/etc/init.d/weston stop

- Run the dual camera application

#dual-camera -platform linuxfb <0/1>

- When last argument is set as 0, capture and display driver allocates memory from omap_bo pool.

- When it is set to 1, the buffers are allocated from CMEM pool.

5.3.2. Video Analytics¶

Overview

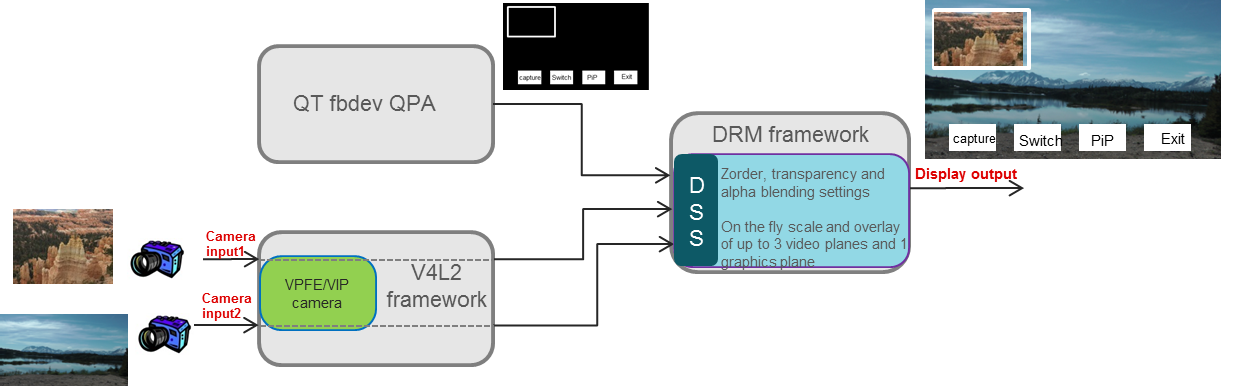

The Video Analytics demo shipped with the Processor SDK Linux for AM57xx showcases how a Linux Application running on Cortex A-15 cluster can take advantage of C66x DSP, 3D SGX hardware acceleration blocks to process a real-time camera input feed and render the processed output on display - all using open programming paradigms such as OpenCV, OpenCL, OpenGL, and Qt and standard Linux drivers for capture/display.

A hand gesture is detected using morphological operators from OpenCV library. The gesture is used to control a parameter (wind speed) used in physical simulation of water waves. Result of simulation is visualized in real-time via 3D rendering of water surface. The hardware IP blocks, such as IVAHD, M4 cores, are not utilized for this demo.

Setup

In order to re-create the demo, user would need a standard AM572x GP EVM and Processor SDK Linux package. The demo is integrated into the Matrix GUI and can be launched by touching the “Video Analytics” icon on the LCD. The sources to the demo are packaged in the Processor SDK Linux Installer, under “example-applications” folder and can be compiled using the SDK’s top-level Makefile

Building Blocks

- Camera Capture: The camera module on AM572x GP EVM acquires color frames of 640x480 resolution. The images are received by the OpenCV framework using camera capture class, that depends on the standard V4L2 Linux driver (/dev/video1).

- Gesture Recognition: The first 30 frames captured are used to estimate the background, and later subtracted to extract the hand contour, using erode and dilute operations available in the OpenCV Library. Analysis of largest contour is performed to find convex hulls. Hand gesture classification is based on ratio of outer and inner contour. Several discrete values are sent to wave simulation algorithm.

- Wave Simulation: Wave simulation is done in two stages: calculation of initial (t=0)) Phillips spectrum followed by spectrum updates per each time instant. Height map is generated in both steps using 2D IFFT (of spectrum). The Gesture inputs are used to vary the wind direction for wave simulation

- Graphics Rendering: Finally, 2D vertex grid is generated with the above height map. Fragment rendering uses user-defined texture and height dependent coloring.

- Display: The displayed content includes - Camera feed, overlayed with the contour detection, and the generated water surface

Block diagram

Demo Internals

Programming Paradigm

- Code running on A15 Linux platform is written in C++ and links with QT5, OpenCV and OpenCL host side libraries.

- Code running on DSP C66x is written in OpenCL C

- Code running on GPU is written in GLSL.

- Standard V4L2 Linux driver is used for camera capture

- The demo uses OpenCV 3.1, OpenCL 1.2, OpenGL ES 2.0/GLSL and QT 5.5

OpenCV (running on ARM A15s)

Video analytics functionality includes simple algorithm to extract contours of hand, and detect how widely opened the hand is. A parameter which indicates ratio between convex hull and contours is used to control physical simulation of waves.This is an output argument converted to wind speed, in 2.5-10 ms range (4 discrete values). Initially image background is estimated (for 30 frames) using MOG2 (Mixture Of Gaussians), standard OpenCV algorithm. Later, the background is subtracted from camera frames, before morphological operations: erode and dilute are performed.Next two steps include contour detection (which is also presented in camera view window) and convex hull estimation. Ratio between outer and inner contour indicates (in 1.1-1.8 range) can be correlated with number of fingers and how widely are they spread. If they are more widely spread, parameter is converted to higher wind speed and waves become higher.

OpenCL (running on ARM A15s + C66x)

Wave surface simulation is based on generation of Phillips (2D) spectrum followed by 2D IFFT (256x256). Both operations are executed by OpenCL offload to single DSP core. Many details on this algorithm can be found in graphics.ucsd.edu/courses/rendering/2005/jdewall/tessendorf.pdf. This stage is controlled by wave speed (output of gesture detection algorithm) using fixed wind direction (an idea for improvement: wind direction can be controlled using hand gesture).

Height map in form of 256x256 float matrix is generated on output and used by OpenGL based vertex renderer (performed in next step). Wave surface simulation consists of two steps:

- initial setup defining starting conditions (wind speed and wind direction are used as input in this stage only)

- update of wave surface height map (Phillips spectrum modification generated at t=0, along time axe and 2D IFFT for each time step).

OpenGL ES (running on ARM A15s + GPU/SGX)

FLOW: 256x256 float height map matrix + fixed texture -> OpenGL ES based algorithm -> rendered frame buffers

OpenGL ES is a subset of Open GL for desktop devices. Important difference (for this project) is requirement to use vertex buffers and only triangle strips. Also Qt provides wrapper functions (QOpenGL functions) created with intention to hide differences between different OpenGL versions and also to slightly simplify programming. On the downside, it involves Qt specific syntax (not far from original OpenGL ES functions). Height Map data (256x256, but sub-sampled by 4x4, hence 64x64 vertices) received from previous stage, are rendered specific coloring and user supplied JPEG image. Fragment shader does mixing of texture and color derived from interpolated height giving more natural visualization. Currently lighting effects are not used (Implementing this can significantly improve the quality of rendering).

QT 5 (running on ARM A15)

FLOW: user input (mouse and keyboard) -> QT application -> windowing system and control of above threads

Directory Structure

The functionality is organized as shows in the files/description below.

| file name | description | |

| 1 | CameraConnectDialog.cpp/CameraConnectDialog.h | |

| 2 | CameraGrab.cpp/CameraGrab.h | Auxilliary camera frame acquisition functions to achieve full FPS |

| 3 | CameraView.cpp/CameraView.h | Major class instantiated after connecting to camera. This class creates processing thread, wavesimulation thread and also instantiates wave display (3D graphics) widget. |

| 4 | CaptureThread.cpp/CaptureThread.h | Input (camera) frame buffering. |

| 5 | FrameLabel.cpp/FrameLabel.h | |

| 6 | GeometryEngine.cpp/GeometryEngine.h | Height map mash creation, vertex updates |

| 7 | Gesture.cpp/Gesture.h | Hand gesture (OpenCV) detection algorith, |

| 8 | ImageProcessingSettingsDialog.cpp/ImageProcessingSettingsDialog.h | Settings of parameters used by image processing algorithms. |

| 9 | main.cpp | main function |

| 10 | MainWindow.cpp/MainWindow.h | |

| 11 | MatToQImage.cpp/MatToQImage.h | Conversion from OpenCV Mat object to QT QImage object |

| 12 | ProcessingThread.cpp/ProcessingThread.h | Main processing thread, frame rate dynamics, invokes variois image processing algorithms |

| 13 | SharedImageBuffer.cpp/SharedImageBuffer.h | |

| 14 | WaveDisplayWidget.cpp/WaveDisplayWidget.h | Wave surface 3D rendering (invokes methods from GeometryEngine.cpp) |

| 15 | WaveSimulationThread.cpp/WaveSimulationThread.h | Wave surface physical simulation thread - host side of OpenCL dispatch. |

| 16 | Buffers.h | |

| 17 | Structures.h | |

| 18 | Config.h | |

| 19 | phillips.cl | DSP OpenCL phillips spectrum generation kernels and 2D IFFT kernel (invoking dsplib.ae66 optimized 1D FFT). After compilation (by clocl) phillips.dsp_h is generated, and included in WaveSimulationThread.cpp (ocl kernels are compiled and downloaded in this thread, before run-time operation is started). |

| 20 | vshader.glsl | Vertex shader (gets projection matrix, position and texture position as input arguments; generates texture coordinate and height for fragment shader |

| 21 | fshader.glsl | Fragment shader doing linear interpolation of textures and mixing texture with height dependent color, and adds ambient light |

| 22 | shaders.qrc | Specify shader filenames |

| 23 | textures.qrc | Specify texture file (2D block which is linearly interpolated in fragment shader, using position arguments provided by vertex shader) |

| 24 | qt-opencv-multithreaded.pro | Top level QT make file: phi |

| 25 | ImageProcessingSettingsDialog.ui | User interface definition file for modification of algorithm parameters. |

| 26 | CameraView.ui | Camera view user interface definition file - right click mouse action brings up image processing algorithm selection |

| 27 | CameraConnectDialog.ui | |

| 28 | MainWindow.ui |

Performance

The hand gesture detection/wave surface simulation/wave surface rendering demo pipeline runs at 18-20 fps. For other algorithms (e.g. smoothing, canny) the pipeline runs at 33-35 fps.

Licensing

The demo code is provided under BSD/MIT License

FAQ/Known Issues

- Brighter lighting conditions are necessary for noise-free camera input, to allow good contour detection. In poor lighting conditions, there would be false or no detection.

- OpenCV 3.1 version shows low FPS rate for Camera Capture. Hence, a custom solution based on direct V4L2 ioctl() calls is adopted (cameraGrab.cpp file) to boost the FPS

5.3.3. DLP 3D Scanner¶

This demo demonstrates an embedded 3D scanner based on the structured light principle, with am57xx. More details can be found at https://www.ti.com/tool/tidep0076

5.3.4. People Tracking¶

This demo demonstrates the capability of people tracking and detection with TI’s ToF (Time-of-Flight) sensor. More details can be found at https://www.ti.com/lit/pdf/tidud06

5.3.5. Barcode Reader¶

Introduction

Detecting 1D and 2D barcodes on an image, and decoding those barcodes are important use cases for the Machine-Vision. Processor SDK Linux has integrated the following open source components, and examples to demonstrate both of these features.

- Barcode detection: OpenCV

- Barcode Decoder/Reader: Zbar Library

OpenCV includes python wrapper to allow quick and easy prototyping. It also includes support for OpenCL offload on devices with C66 DSP core (currently OpenCV T-API cannot be used with python wrapper).

Zbar Barcode Decoder/Reader

Recipes for zbar barcode reader have been added to build the zbar library and test binary. Zbar is standalone library, which does not depend on OpenCV. Current release is not accelerated via OpenCL dispatch (obvious candidates are zbar_scan_y() and calc_tresh() functions consuming >50% of CPU resources).

Command to run zbar test binary:

barcode_zbar [barcode_image_name]

Barcode Region Of Interest (ROI) Detection with OpenCV and Python

Detecting Barcodes in Images using Python and OpenCV provides python scripts which run with OpenCV 2.4.x. For use with Process SDK Linux which has OpenCV 3.1, modifications have been made to the original python scripts so that they can run with OpenCV 3.1. Below please find the modified python scripts detect_barcode.py.

# import the necessary packages

import numpy as np

import argparse

import cv2

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", required = True, help = "path to the image file")

args = vars(ap.parse_args())

# load the image and convert it to grayscale

image = cv2.imread(args["image"])

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# compute the Scharr gradient magnitude representation of the images

# in both the x and y direction

gradX = cv2.Sobel(gray, ddepth = cv2.CV_32F, dx = 1, dy = 0, ksize = -1)

gradY = cv2.Sobel(gray, ddepth = cv2.CV_32F, dx = 0, dy = 1, ksize = -1)

# subtract the y-gradient from the x-gradient

gradient = cv2.subtract(gradX, gradY)

gradient = cv2.convertScaleAbs(gradient)

# blur and threshold the image

blurred = cv2.blur(gradient, (9, 9))

(_, thresh) = cv2.threshold(blurred, 225, 255, cv2.THRESH_BINARY)

# construct a closing kernel and apply it to the thresholded image

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (21, 7))

closed = cv2.morphologyEx(thresh, cv2.MORPH_CLOSE, kernel)

# perform a series of erosions and dilations

closed = cv2.erode(closed, None, iterations = 4)

closed = cv2.dilate(closed, None, iterations = 4)

# find the contours in the thresholded image, then sort the contours

# by their area, keeping only the largest one

(_, cnts, _) = cv2.findContours(closed.copy(), cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

c = sorted(cnts, key = cv2.contourArea, reverse = True)[0]

# compute the rotated bounding box of the largest contour

rect = cv2.minAreaRect(c)

box = np.int0(cv2.boxPoints(rect))

# draw a bounding box arounded the detected barcode and display the

# image

cv2.drawContours(image, [box], -1, (0, 255, 0), 3)

cv2.imshow("Image", image)

cv2.waitKey(0)

python detect_barcode.py --image [barcode_image_name]

Barcode Region Of Interest (ROI) Detection with OpenCV and CPP implementation

Current version of OpenCV (3.1) Python wrapper does not support T-API which is needed for OpenCL dispatch. So Processor SDK Linux is including the same algorithm implemented in CPP (https://git.ti.com/apps/barcode-roi-detection), which can be executed on ARM platform only, or with DSP acceleration. CPP example includes more options for various types of input and output, and run-time control of OpenCL dispatch.

This example allows multiple command line options:

- Using static image (JPG or PNG) as input

- Live display or static image output (JPG or PNG)

- Use OpenCL offload or not

Target filesystem includes detect_barcode in “/usr/bin”, and test vector in “/usr/share/ti/image” folder. Again, after successful detection image with barcode in green bounding box is displayed or written to output file. Below are various use cases of detect_barcode.

- Static image input, no opencl dispatch, live display: detect_barcode sample_barcode.jpg 0 1

- Static image input, opencl ON, live display: detect_barcode sample_barcode.jpg 1 1

- Static image input, opencl ON, file output: detect_barcode sample_barcode.jpg 1 image_det.png

Majority of workload is in following lines:

ocl::setUseOpenCL(ocl_acc_flag); /* Turn ON or OFF OpenCL dispatch */

cvtColor(im_rgb,im_gray,CV_RGB2GRAY);

im_gray.copyTo(img_gray);

Sobel( img_gray, gradX, CV_16S, 1, 0, -1, 1, 0, BORDER_DEFAULT ); /* Input is 8-bit unsigned, output is 16-bit signed */

Sobel( img_gray, gradY, CV_16S, 0, 1, -1, 1, 0, BORDER_DEFAULT ); /* Input is 8-bit unsigned, output is 16-bit signed */

subtract(gradX, gradY, gradient);

convertScaleAbs(gradient, abs_gradient);

// blur and threshold the image

//GaussianBlur( abs_gradient, blurredImg, Size(7,7), 0, 0, BORDER_DEFAULT );

GaussianBlur( abs_gradient, blurredImg, Size(3,3), 0, 0, BORDER_DEFAULT ); /* 3x3 kernel */

threshold(blurredImg, threshImg, 225, 255, THRESH_BINARY);

Mat elementKernel = getStructuringElement( MORPH_RECT, Size( 2*10+1, 2*3+1 ), Point(10, 3));

ocl::setUseOpenCL(false); /* Turn OFF OpenCL dispatch */

morphologyEx( threshImg, closedImg, MORPH_CLOSE, elementKernel );

ocl::setUseOpenCL(ocl_acc_flag); /* Turn ON or OFF OpenCL dispatch */

erode(closedImg, img_final, UMat(), Point(-1, -1), 4); /* erode, 4 iterations */

dilate(img_final, img_ocl, UMat(), Point(-1, -1), 4); /* dilate, 4 iteration */

ocl::setUseOpenCL(false); /* Turn OFF OpenCL dispatch */

Not all OpenCV kernels can be dispatched to DSP via OpenCL. Please refer to OpenCV#OpenCL_C_C66_DSP_kernels for the list of kernels which are currently DSP accelerated.

In order to use OpenCL dispatch, it is necessary to:

- Enable OpenCL use (by setting environment variables, and invoking ocl::setUseOpenCL(ocl_acc_flag))

- Use T-API: e.g. replace Mat types with UMat types

5.3.6. EVSE Demos¶

This demo showcases Human Machine Interface (HMI) for Electric Vehicle Supply Equipment(EVSE) Charging Stations. More details can be found at https://www.ti.com/tool/TIDEP-0087

5.3.7. Protection Relay Demo¶

Matrix UI provides out of box demo to showcase Human Machine Interface (HMI) for Protection Relays. More details can be found at https://www.ti.com/tool/TIDEP-0102

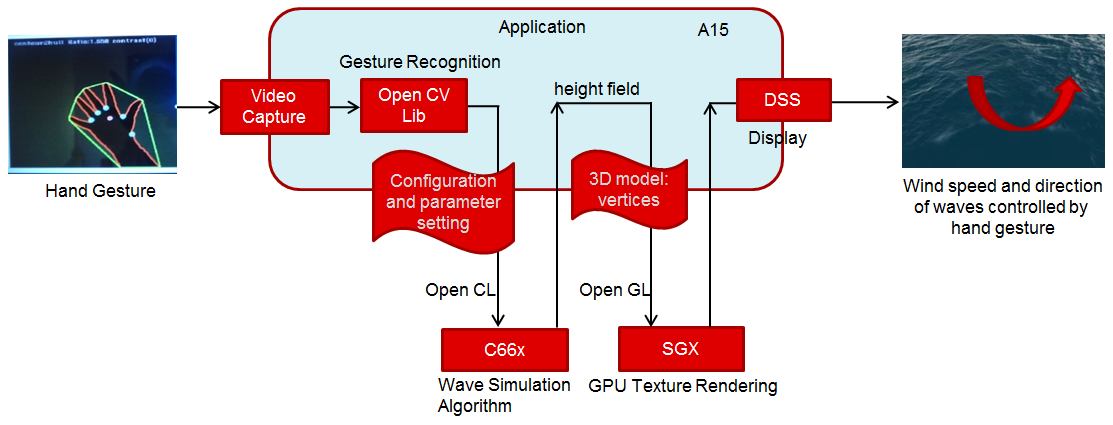

5.3.8. Qt5 Thermostat HMI Demo¶

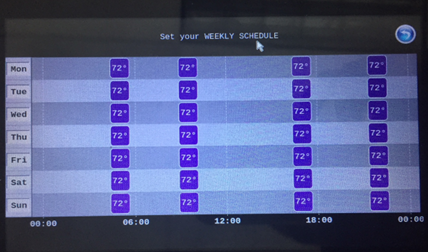

Qt-based Thermostat HMI demo

The morphing of devices like the basic thermostat into a breed of power smart thermostats has shown how the appliances in residences today must adapt, re-imagine and, in some cases, reinvent their role in the connected home of the future or risk being left behind. Thermostats in particular have come a long way since they were first created. There was a time when the mechanical dial thermostat was the only option. It was simple and intuitive to operate because what you saw was what you got. The user simply set the temperature and walked away. Unfortunately, it was not efficient. Energy and money could be wasted since its settings would only change when someone manually turned the dial to a new temperature. Modern thermostats provide a much richer Graphical Interface, with several features. Processor SDK now includes a Qt5 based thermostat with the following key features - that should easily enable customers to use as a starting point for further innovation.

- Display three-day weather forecast, daily temperature range for the selected city from openweathermap.org

- Display and adjust room temperature

- Pop-up menu to select city, temperature format and set network proxy

- Weekly temperature schedule

- Away Energy saving mode

Fig. 5.1 Figure1: three-day weather forecast

Fig. 5.2 Figure2: Select City for weather forecasts

Fig. 5.3 Figure3: Set Proxy for Internet connectivity

Fig. 5.4 Figure4: Weekly temperature schedule

Fig. 5.5 Figure5: Inside temperature, Away energy saving settings

The demo is hosted at https://git.ti.com/apps/thermostat-demo, and also the sources are located at

<SDK-install-dir>/example-applications/qt-tstat-2.0/

The code can be compiled, installed using top-level SDK Makefile

make qt-tstat-clean

make qt-tstat

make qt-tstat-install

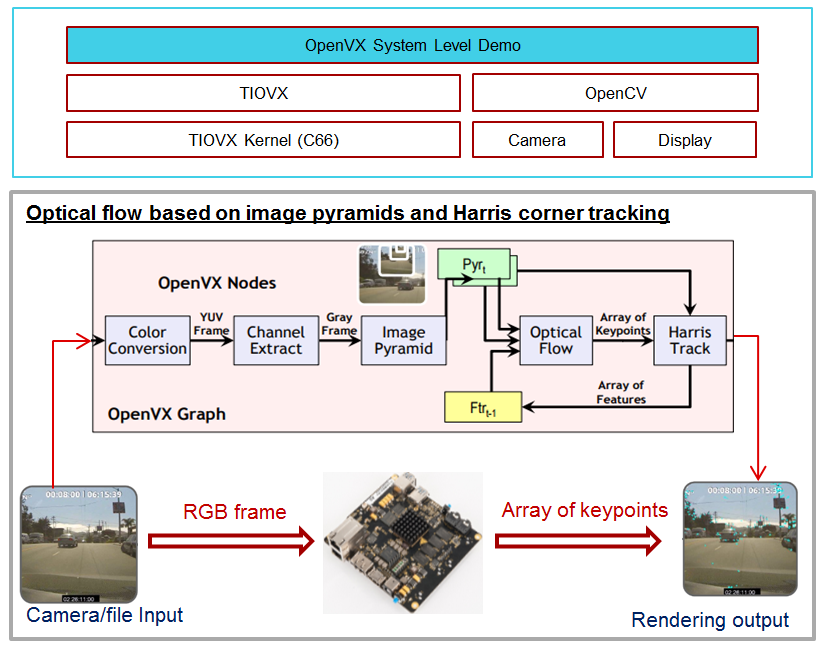

5.3.9. Optical Flow with OpenVX¶

5.3.9.1. OpenVX Example Block Diagram¶

OpenVx tutorial example demontrates the optical flow based on image pyramids and Harris corner tracking. It builds upon TIOVX, and utilizes OpenCV for reading the input (from file or camera) and rending the output for display. Input frame from OpenCV invokes OpenVX graph, and the processing is done once per input frame. OpenVX defines the graph topology and all configuration details. All resources are allocated and initialzied before processing.

5.3.9.2. Run OpenVx Tutorial Example¶

The binary for OpenVX tutorial example is located at /usr/bin/tiovx-opticalflow. It is a statically linked Linux application running on Arm.

Before running the tutorial example, download the test clip and copy it to file system (e.g. ~/tiovx). Then, execute the commands below to load OpenVx firmware and run the optical flow example.

reload-dsp-fw.sh tiovx # load openvx firmware and restart dsps

tiovx-opticalflow /home/root/tiovx/PETS09-S1-L1-View001.avi # Run tutorial example

Screen capture after running the optical flow example:

Logs:

root@am57xx-evm:~# tiovx-opticalflow /home/root/PETS09-S1-L1-View001.avi

VX_ZONE_INIT:Enabled

VX_ZONE_ERROR:Enabled

VX_ZONE_WARNING:Enabled

VSPRINTF_DBG:SYSTEM NOTIFY_INIT: starting

VSPRINTF_DBG: SYSTEM: IPC: Notify init in progress !!!

VSPRINTF_DBG:[0] DUMP ALL PROCS[3]=DSP1

VSPRINTF_DBG:[1] DUMP ALL PROCS[4]=DSP2

VSPRINTF_DBG:Next rx queue to open:QUE_RX_HOST

VSPRINTF_DBG:Just created MsgQue

VSPRINTF_DBG:Created RX task

VSPRINTF_DBG:Next tx queue to open:QUE_RX_DSP1, procId=3

VSPRINTF_DBG:Next tx queue to open:QUE_RX_DSP2, procId=4

VSPRINTF_DBG:Dump all TX queues: procId=3 name=QUE_RX_DSP1 queId=262272, msgSize=68, heapId=0

VSPRINTF_DBG:Dump all TX queues: procId=4 name=QUE_RX_DSP2 queId=196736, msgSize=68, heapId=0

VSPRINTF_DBG:SYSTEM: IPC: SentCfgMsg, procId=3 queuId=262272

VSPRINTF_DBG:SYSTEM: IPC: SentCfgMsg, procId=4 queuId=196736

VSPRINTF_DBG: SYSTEM: IPC: Notify init DONE !!!

VSPRINTF_DBG:>>>> ipcNotifyInit returned: 0

VSPRINTF_DBG:SYSTEM NOTIFY_INIT: done

OK: FILE /home/root/PETS09-S1-L1-View001.avi 768x480

init done

Using Wayland-EGL

wlpvr: PVR Services Initialised

LOG: [ status = -1 ] Hello there!

Run the optical flow example with camera input:

reload-dsp-fw.sh tiovx # load openvx firmware and restart dsps

tiovx-opticalflow # Run tutorial example with camera input

Logs:

root@am57xx-evm:~# tiovx-opticalflow

VX_ZONE_INIT:Enabled

VX_ZONE_ERROR:Enabled

VX_ZONE_WARNING:Enabled

VSPRINTF_DBG:SYSTEM NOTIFY_INIT: starting

VSPRINTF_DBG: SYSTEM: IPC: Notify init in progress !!!

VSPRINTF_DBG:[0] DUMP ALL PROCS[3]=DSP1

VSPRINTF_DBG:[1] DUMP ALL PROCS[4]=DSP2

VSPRINTF_DBG:Next rx queue to open:QUE_RX_HOST

VSPRINTF_DBG:Just created MsgQue

VSPRINTF_DBG:Created RX task

VSPRINTF_DBG:Next tx queue to open:QUE_RX_DSP1, procId=3

VSPRINTF_DBG:Next tx queue to open:QUE_RX_DSP2, procId=4

VSPRINTF_DBG:Dump all TX queues: procId=3 name=QUE_RX_DSP1 queId=262272, msgSize=68, heapId=0

VSPRINTF_DBG:Dump all TX queues: procId=4 name=QUE_RX_DSP2 queId=196736, msgSize=68, heapId=0

VSPRINTF_DBG:SYSTEM: IPC: SentCfgMsg, procId=3 queuId=262272

VSPRINTF_DBG:SYSTEM: IPC: SentCfgMsg, procId=4 queuId=196736

VSPRINTF_DBG: SYSTEM: IPC: Notify init DONE !!!

VSPRINTF_DBG:>>>> ipcNotifyInit returned: 0

VSPRINTF_DBG:SYSTEM NOTIFY_INIT: done

OK: CAMERA#1 640x480

init done

Using Wayland-EGL

wlpvr: PVR Services Initialised

LOG: [ status = -1 ] Hello there!

After finishing running the OpenVX tutorial example, switch the firmware back to the default for OpenCL:

reload-dsp-fw.sh opencl # load opencl firmware and restart dsps

5.3.10. ROS and Radar¶

5.3.10.1. Introduction¶

The ROS is meta-ros running on top of Linux. It is a collection of software libraries and packages to help you write robotic applications. Both mobile platforms and static manipulators can leverage wide colelction of open-source drivers and tools. ROS framework is mainly based on publisher-subscriber model, and in some cases server-client mode.

It is frequently the case that ROS based applications require sensors enabling interaction with the environment. Various types of sensors can be used: cameras, ultrasound, time-of-flight RGBD cameras, lidars, ...

In case of 3D sensors, typical output is point cloud. Consumer of this information (e.g. for navigation) is decoupled from point cloud producer since format is well defined. Due to modular nature of ROS it is easy to replace one sensor with the other as long as format of information (in this case point cloud) is the same.

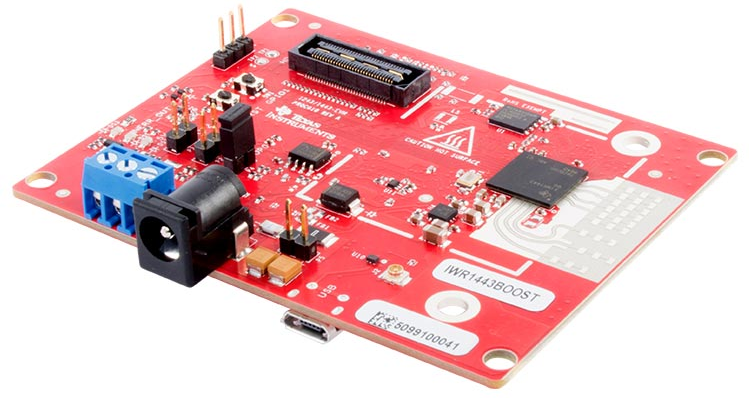

An important type of 3D sensor, especially suited for outdoor use cases is mmWave radar (77-81GHz). For this demo IWR/AWR-1443 or 1642 EVM is needed. It is connected to Sitara device over USB connection creating virtual UART.

Optionally to make this platform movable, Kobuki mobile base can be added. Sitara EVM and Radar EVM would be attached to Kobuki, and Sitara EVM running ROS would control Kobuki movement via USB connection. Please note that mobile base is not essentail for verification of ROS on Sitara plus Radar EVM operation.

It is desirable to have Ubuntu Linux box (typically Linux PC desktop or laptop) with Ubuntu 14.04LTS and ROS indigo installed (please note that ROS Indigo is natively supported in Ubuntu 14.04).

5.3.10.2. HW Setup¶

- USB to microUSB cable (connecting USB connector on Sitara side with microUSB connector on Radar EVM

- [optional] Kobuki mobile base (as used by Turtlebot2), http://kobuki.yujinrobot.com/

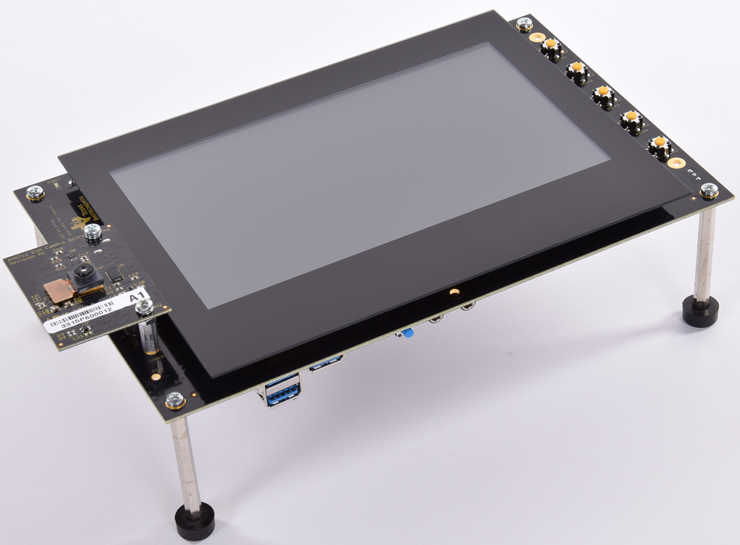

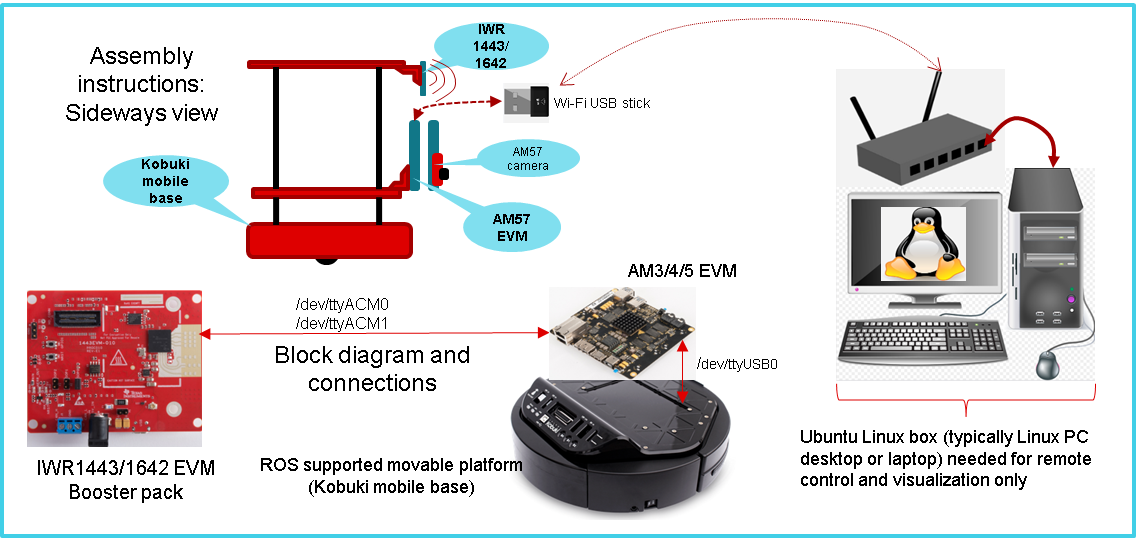

Fig. 5.6 Kobuki mobile base with Sitara, Radar EVMs and monitoring Ubuntu Linux box (PC desktop or laptop)

Compatibility

The ti_mmwave_rospkg ROS driver package on Sitara is tested with Processor Linux SDK which includes meta-ros layer, indigo, (from https://github.com/bmwcarit/meta-ros).

For visualization we use ROS indigo distro on Ubuntu Linux box, preffered for compatibility reasons. Run the commands below to install ROS indigo on Ubuntu Linux box. More details can be found from https://wiki.ros.org/indigo/Installation/Ubuntu.

sudo sh -c 'echo "deb http://packages.ros.org/ros/ubuntu trusty main" > /etc/apt/sources.list.d/ros-latest.list'

wget https://raw.githubusercontent.com/ros/rosdistro/master/ros.key -O - | sudo apt-key add -

sudo apt-get update

sudo apt-get install ros-indigo-desktop-full

For this demo, IWR EVM requires mmWave SDK firmware. If different firmware is used on Radar EVM, please follow procedure using UniFlash tool to install mmWave SDK.

5.3.10.3. ROS configuration verification¶

ROS is part of PLSDK 4.3 target filesystem, including mmWave ROS driver, so no additional installation steps are required. ROS is installed in /opt/ros/indigo folder. Only setting up configuration related to specific IP address of target EVM, and Ubuntu Linux box host IP address is needed. ROS is distributed meta-ros, with single ROS host acting as a broker for all internode transcations. It runs roscore node and in this case roscore is executed on Sitara. Ubuntu Linux box will only run ROS RViz node since RViz requires OpenGL desktop support (Sitara only supports OpenGL ES 2.0).

Note

If visualization using RViz is not needed, Ubuntu Linux box is not necessary for this demo (except to start multiple SSH terminals).

Reconfigure PLSDK for Python3

PLSDK includes ROS packages from meta-ros layer, compiled with Python3 support (build/conf/local.conf : ROS_USE_PYTHON3 = “yes”) As PLSDK default python setting is Python 2.7, filesystem update is required for ROS tests to run:

root@am57xx-evm:/usr/bin# ln -sf python3 python.python

root@am57xx-evm:/usr/bin# ln -sf python3-config python-config.python

5.3.10.4. ROS between distributed nodes (Sitara and Ubuntu Linux box)¶

1st SSH terminal, to Sitara EVM

Modify /opt/ros/indigo/setup.bash

export ROS_ROOT=/opt/ros/indigo

export PATH=$PATH:/opt/ros/indigo/bin

export LD_LIBRARY_PATH=/opt/ros/indigo/lib

export PYTHONPATH=/usr/lib/python3.5/site-packages:/opt/ros/indigo/lib/python3.5/site-packages

export ROS_MASTER_URI=http://$SITARA_IP_ADDR:11311

export ROS_IP=$SITARA_IP_ADDR

export CMAKE_PREFIX_PATH=/opt/ros/indigo

export ROS_PACKAGE_PATH=/opt/ros/indigo/share

touch /opt/ros/indigo/.catkin

Then, execute

source /opt/ros/indigo/setup.bash

roscore

2nd SSH terminal, to Sitara EVM

source /opt/ros/indigo/setup.bash

rosrun roscpp_tutorials talker

You will see log similar to following:

....[ INFO] [1516637959.231163685]: hello world 5295

[ INFO] [1516637959.331163994]: hello world 5296

[ INFO] [1516637959.431165605]: hello world 5297

[ INFO] [1516637959.531161359]: hello world 5298

[ INFO] [1516637959.631162807]: hello world 5299

[ INFO] [1516637959.731166207]: hello world 5300

[ INFO] [1516637959.831215641]: hello world 5301

[ INFO] [1516637959.931165361]: hello world 5302

[ INFO] [1516637960.031165019]: hello world 5303

[ INFO] [1516637960.131164027]: hello world 5304

3rd SSH terminal, to Linux BOX (Optional)

export ROS_MASTER_URI=http://$SITARA_IP_ADDR:11311

export ROS_IP=$LINUXBOX_IP_ADDR

source /opt/ros/indigo/setup.bash

rosrun roscpp_tutorials listener

You will see log similar to following:

...

data: hello world 5338

---

data: hello world 5339

---

data: hello world 5340

---

data: hello world 5341

---

data: hello world 5342

---

data: hello world 5343

---

data: hello world 5344

5.3.10.5. mmWave ROS node on Sitara and RViz on Ubuntu Linux box¶

1st SSH terminal, to Sitara EVM

Start roscore, only if it is not already started

source /opt/ros/indigo/setup.bash roscore

2nd SSH terminal, to Sitara EVM

source /opt/ros/indigo/setup.bash

roslaunch ti_mmwave_rospkg rviz_1443_3d.launch

Change "rviz_1443_3d.launch to "rviz_1642_2d.launch", based on Radar EVM type (1443 or 1642).

If Kobuki mobile is available, use the command below instead:

roslaunch ti_mmwave_rospkg plsdk_rviz_1443_3d.launch

Sample log is included:

source /opt/ros/indigo/setup.bash

roslaunch ti_mmwave_rospkg plsdk_rviz_1443_3d.launch

... logging to /home/root/.ros/log/97dfe396-2711-11e8-bd4a-a0f6fdc25c34/roslaunch-am57xx-evm-7487.log

Checking log directory for disk usage. This may take awhile.

Press Ctrl-C to interrupt

Done checking log file disk usage. Usage is <1GB.

started roslaunch server http://192.168.0.222:35481/

SUMMARY

========

PARAMETERS

* /fake_localization/use_map_topic: True

* /mmWave_Manager/command_port: /dev/ttyACM0

* /mmWave_Manager/command_rate: 115200

* /mmWave_Manager/data_port: /dev/ttyACM1

* /mmWave_Manager/data_rate: 921600

* /mmWave_Manager/max_allowed_azimuth_angle_deg: 90

* /mmWave_Manager/max_allowed_elevation_angle_deg: 90

* /rosdistro: b'<unknown>\n'

* /rosversion: b'1.11.21\n'

NODES

/

fake_localization (fake_localization/fake_localization)

mmWaveQuickConfig (ti_mmwave_rospkg/mmWaveQuickConfig)

mmWave_Manager (ti_mmwave_rospkg/ti_mmwave_rospkg)

octomap_server (octomap_server/octomap_server_node)

static_tf_map_to_base_radar_link (tf/static_transform_publisher)

static_tf_map_to_odom (tf/static_transform_publisher)

ROS_MASTER_URI=http://192.168.0.222:11311

core service [/rosout] found

process[mmWave_Manager-1]: started with pid [7505]

process[mmWaveQuickConfig-2]: started with pid [7506]

process[static_tf_map_to_odom-3]: started with pid [7507]

process[static_tf_map_to_base_radar_link-4]: started with pid [7508]

[ INFO] [1520981858.224293205]: mmWaveQuickConfig: Configuring mmWave device using config file: /opt/ros/indigo/share/ti_mmwave_rospkg/cfg/1443_3d.cfg

process[octomap_server-5]: started with pid [7509]

process[fake_localization-6]: started with pid [7517]

[ INFO] [1520981858.367713151]: waitForService: Service [/mmWaveCommSrv/mmWaveCLI] has not been advertised, waiting...

[ INFO] [1520981858.436009564]: Initializing nodelet with 2 worker threads.

[ INFO] [1520981858.480256524]: mmWaveCommSrv: command_port = /dev/ttyACM0

[ INFO] [1520981858.480407967]: mmWaveCommSrv: command_rate = 115200

[ INFO] [1520981858.497923263]: waitForService: Service [/mmWaveCommSrv/mmWaveCLI] is now available.

[ INFO] [1520981858.498667137]: mmWaveQuickConfig: Ignored blank or comment line: '% ***************************************************************'

[ INFO] [1520981858.499059815]: mmWaveQuickConfig: Ignored blank or comment line: '% Created for SDK ver:01.01'

[ INFO] [1520981858.499462577]: mmWaveQuickConfig: Ignored blank or comment line: '% Created using Visualizer ver:1.1.0.1'

[ INFO] [1520981858.505357942]: mmWaveQuickConfig: Ignored blank or comment line: '% Frequency:77'

[ INFO] [1520981858.506164932]: mmWaveQuickConfig: Ignored blank or comment line: '% Platform:xWR14xx'

[ INFO] [1520981858.506843089]: mmWaveQuickConfig: Ignored blank or comment line: '% Scene Classifier:best_range_res'

[ INFO] [1520981858.507514414]: mmWaveQuickConfig: Ignored blank or comment line: '% Azimuth Resolution(deg):15 + Elevation'

[ INFO] [1520981858.508289684]: mmWaveQuickConfig: Ignored blank or comment line: '% Range Resolution(m):0.044'

[ INFO] [1520981858.508999398]: mmWaveQuickConfig: Ignored blank or comment line: '% Maximum unambiguous Range(m):9.01'

[ INFO] [1520981858.509816310]: mmWaveQuickConfig: Ignored blank or comment line: '% Maximum Radial Velocity(m/s):5.06'

[ INFO] [1520981858.510520982]: mmWaveQuickConfig: Ignored blank or comment line: '% Radial velocity resolution(m/s):0.64'

[ INFO] [1520981858.518476684]: mmWaveQuickConfig: Ignored blank or comment line: '% Frame Duration(msec):33.333'

[ INFO] [1520981858.519262364]: mmWaveQuickConfig: Ignored blank or comment line: '% Range Detection Threshold (dB):9'

[ INFO] [1520981858.519957764]: mmWaveQuickConfig: Ignored blank or comment line: '% Range Peak Grouping:disabled'

[ INFO] [1520981858.520157681]: mmWaveDataHdl: data_port = /dev/ttyACM1

[ INFO] [1520981858.520252841]: mmWaveDataHdl: data_rate = 921600

[ INFO] [1520981858.520315142]: mmWaveDataHdl: max_allowed_elevation_angle_deg = 90

[ INFO] [1520981858.520375654]: mmWaveDataHdl: max_allowed_azimuth_angle_deg = 90

[ INFO] [1520981858.520943849]: mmWaveQuickConfig: Ignored blank or comment line: '% Doppler Peak Grouping:disabled'

[ INFO] [1520981858.521671945]: mmWaveQuickConfig: Ignored blank or comment line: '% Static clutter removal:disabled'

[ INFO] [1520981858.522412729]: mmWaveQuickConfig: Ignored blank or comment line: '% ***************************************************************'

[ INFO] [1520981858.523396537]: mmWaveQuickConfig: Sending command: 'sensorStop'

[ INFO] [1520981858.533674630]: mmWaveCommSrv: Sending command to sensor: 'sensorStop'

[ INFO] [1520981858.536083724]: DataUARTHandler Read Thread: Port is open

[ INFO] [1520981858.548926257]: mmWaveCommSrv: Received response from sensor: 'sensorStop

Done

mmwDemo:/>'

[ INFO] [1520981858.550875817]: mmWaveQuickConfig: Command successful (mmWave sensor responded with 'Done')

[ INFO] [1520981858.551745758]: mmWaveQuickConfig: Sending command: 'flushCfg'

[ INFO] [1520981858.559882020]: mmWaveCommSrv: Sending command to sensor: 'flushCfg'

[ INFO] [1520981858.562726084]: mmWaveCommSrv: Received response from sensor: 'flushCfg

Done

mmwDemo:/>'

[ INFO] [1520981858.564378289]: mmWaveQuickConfig: Command successful (mmWave sensor responded with 'Done')

[ INFO] [1520981858.565240748]: mmWaveQuickConfig: Sending command: 'dfeDataOutputMode 1'

[ INFO] [1520981858.573026625]: mmWaveCommSrv: Sending command to sensor: 'dfeDataOutputMode 1'

[ INFO] [1520981858.576915985]: mmWaveCommSrv: Received response from sensor: 'dfeDataOutputMode 1

Done

mmwDemo:/>'

...

mmwDemo:/>'

[ INFO] [1520981858.776118886]: mmWaveQuickConfig: Command successful (mmWave sensor responded with 'Done')

[ INFO] [1520981858.776938726]: mmWaveQuickConfig: Sending command: 'compRangeBiasAndRxChanPhase 0.0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0'

[ INFO] [1520981858.782736816]: mmWaveCommSrv: Sending command to sensor: 'compRangeBiasAndRxChanPhase 0.0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0'

[ INFO] [1520981858.792102024]: mmWaveCommSrv: Received response from sensor: 'compRangeBiasAndRxChanPhase 0.0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0

Done

mmwDemo:/>'

[ INFO] [1520981858.793846462]: mmWaveQuickConfig: Command successful (mmWave sensor responded with 'Done')

[ INFO] [1520981858.794657355]: mmWaveQuickConfig: Sending command: 'measureRangeBiasAndRxChanPhase 0 1.5 0.2'

[ INFO] [1520981858.800233568]: mmWaveCommSrv: Sending command to sensor: 'measureRangeBiasAndRxChanPhase 0 1.5 0.2'

[ INFO] [1520981858.806256139]: mmWaveCommSrv: Received response from sensor: 'measureRangeBiasAndRxChanPhase 0 1.5 0.2

Done

mmwDemo:/>'

[ INFO] [1520981858.807890614]: mmWaveQuickConfig: Command successful (mmWave sensor responded with 'Done')

[ INFO] [1520981858.808687680]: mmWaveQuickConfig: Sending command: 'sensorStart'

[ INFO] [1520981858.814534734]: mmWaveCommSrv: Sending command to sensor: 'sensorStart'

[ INFO] [1520981858.822598283]: mmWaveCommSrv: Received response from sensor: 'sensorStart

Done

mmwDemo:/>'

[ INFO] [1520981858.824211611]: mmWaveQuickConfig: Command successful (mmWave sensor responded with 'Done')

[ INFO] [1520981858.824545077]: mmWaveQuickConfig: mmWaveQuickConfig will now terminate. Done configuring mmWave device using config file: /opt/ros/indigo/share/ti_mmwave_rospkg/cfg/1443_3d.cfg

[mmWaveQuickConfig-2] process has finished cleanly

3rd SSH terminal, to Sitara EVM

Bring up all ROS components for communicting and controlling Kobuki

source /opt/ros/indigo/setup.bash

roslaunch kobuki_node minimal.launch

4th SSH terminal, to Sitara EVM

Start Kobuki teleop console (remotely control Kobuki movement using keyboard)

source /opt/ros/indigo/setup.bash

roslaunch kobuki_keyop safe_keyop.launch

Operating kobuki from keyboard:

Forward/back arrows : linear velocity incr/decr.

Right/left arrows : angular velocity incr/decr.

Spacebar : reset linear/angular velocities.

d : disable motors.

e : enable motors.

q : quit.

5th SSH terminal, to Ubuntu Linux box

First, install ROS Indigo distribution on Ubuntu Linux box if it has not been done before.

Setup ROS variables on Ubuntu Linux box (to enable communication with ROS host on Sitara) then start RViz

export ROS_MASTER_URI=http://$SITARA_IP_ADDR:11311 (IP address of Sitara EVM, modify as needed)

export ROS_IP=$LINUX_BOX_IP_ADDR (IP address of Ubuntu Linux box, modify as needed)

source /opt/ros/indigo/setup.bash

rosrun rviz rviz

Alternatively, in order to get Kobuki avatar on the screen, install kobuki_description on Ubuntu Linux box and start RViz by launching view_model from kobuki_description.

git clone https://github.com/yujinrobot/kobuki

cd kobuki

cp -r kobuki_description /opt/ros/indigo/share

roslaunch kobuki_description view_model.launch

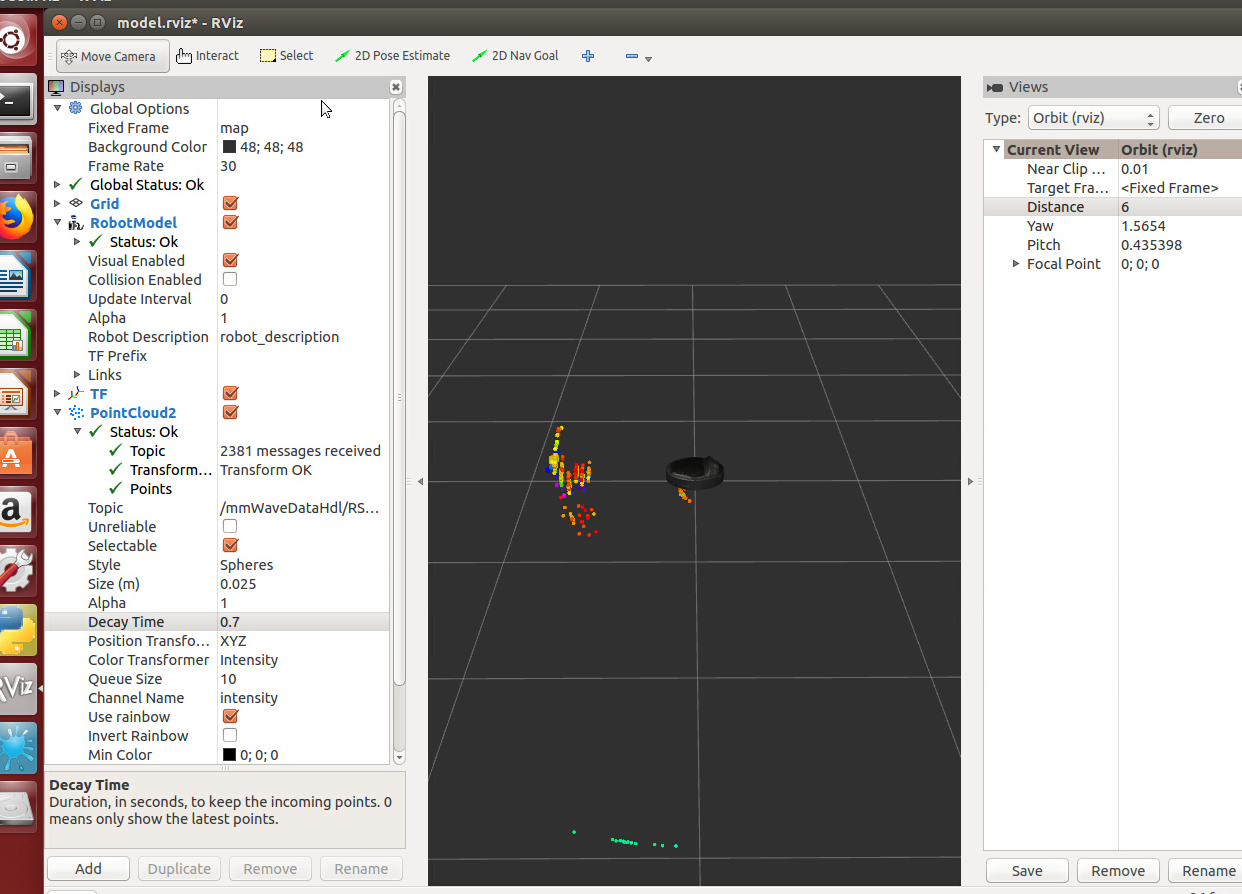

In RViz add point cloud from mmWave radar:

- Click Add->PointCloud2

- Select /mmWaveDataHdl/RScan from the Topic field dropdown for the PointCloud2 on the left hand panel

- Increase Size to 0.03, increase Decay Time to 0.25, and Select Style as “Spheres”.

- In rviz, select map for Fixed Frame in Global Options.

- If Kobuki is also started, set Reference Frame (left panel) to “map”.

You should see a point cloud image:

More information can be found in ROS driver document in chapters: “Visualizating the data”, “Reconfiguring the chirp profile”, and “How it works”

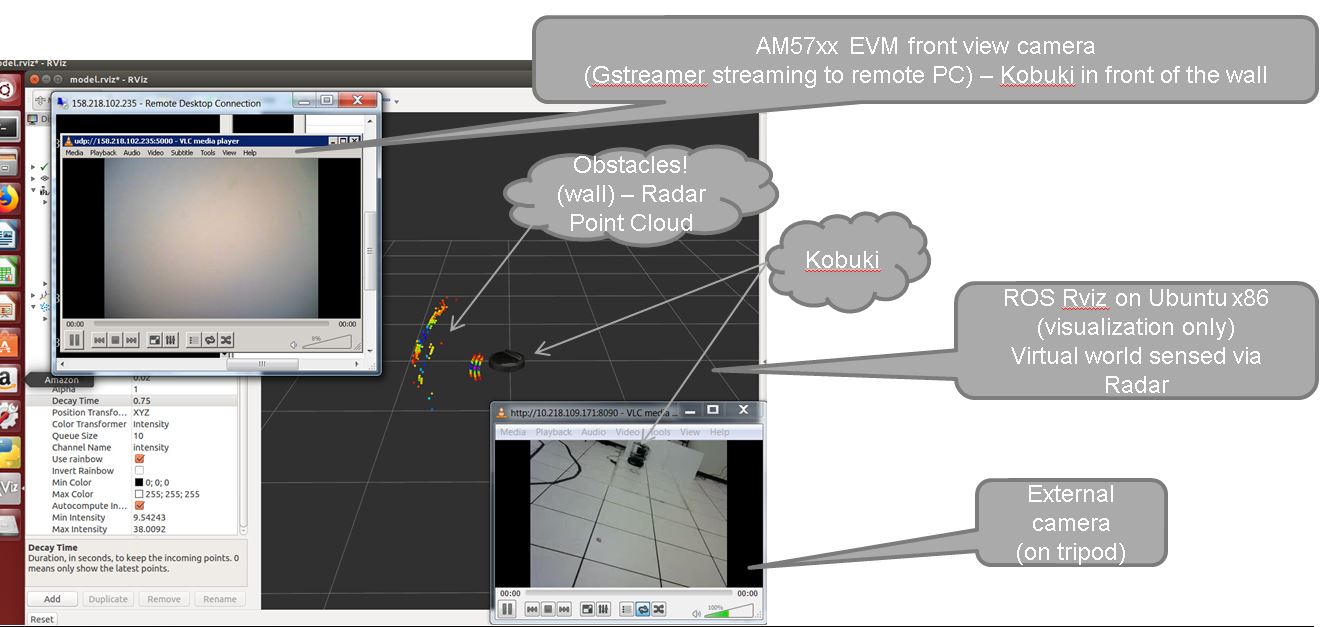

Starting GStreamer pipeline for streaming front view camer

It is possible to start GStreamer pipeline on Sitara and receive front-camera view on Ubuntu Linux box or Windows PC using VLC.

gst-launch-1.0 -e v4l2src device=/dev/video1 io-mode=5 ! 'video/x-raw, \

format=(string)NV12, width=(int)640, height=(int)480, framerate=(fraction)30/1' ! ducatih264enc bitrate=1000 ! queue ! h264parse config-interval=1 ! mpegtsmux ! udpsink host=192.168.0.100 sync=false port=5000

E.g. on Windows PC (192.168.0.100), you can watch the stream using: “Program Files (x86)VideoLANVLCvlc.exe” udp://@192.168.0.100:5000

Fig. 5.7 Multiple windows on Ubuntu Linux box showing ROS RViz, front camera view and external camera view

5.3.10.6. Sense and Avoid Demo with mmWave and Sitara¶

This section shows a complete sense and avoid navigation demo which runs on AM572x EVM with mmWave sensors. It will be available as a TI-design (TIDEP-01006) in the coming quarter.

5.3.10.6.1. Preparation on Ubuntu¶

First, install ROS Indigo distribution on Ubuntu Linux box if it has not been done before.

In order to run the RViz for this navigation demo, rviz_plugin_covariance is needed on Ubuntu Linux box. Follow instructions below to create ROS workspace, and then clone and build rviz_plugin_covariance.

Create ROS workspace

source /opt/ros/indigo/setup.bash

mkdir -p ~/catkin_ws/src

cd ~/catkin_ws/

catkin_make

Clone and build rviz_plugin_covariance

cd ~/catkin_ws/src

git clone https://github.com/laas/rviz_plugin_covariance

cd ~/catkin_ws/

catkin_make

At the end of catkin_make, there should be:

[100%] Built target rviz_plugin_covariance

After the plugin is successfully built, set up ROS variables on Ubuntu Linux box by adding the following two lines to ~/.bashrc

source /opt/ros/indigo/setup.bash

source ~/catkin_ws/devel/setup.bash

The last step of Ubuntu prepartation is copying the navigation demo packages from Sitara target filesystem (located under /opt/ros/indigo/share/) to Ubuntu Linux box (destitation is also /opt/ros/indigo/share/). The packages to be copied include:

/opt/ros/indigo/share/turtlebot_description/

/opt/ros/indigo/share/turtlebot_bringup/

/opt/ros/indigo/share/turtlebot_mmwave_launchers/

5.3.11. TIDL Classification Demo¶

Refer to various TIDL demos documented at Examples and Demos

5.3.12. PRU-ADC Demo¶

5.3.12.1. Introduction¶

This demo shows flexible interface for Simultaneous, Coherent DAQ Using Multiple ADCs via PRU-ICSS. More details can be found at https://www.ti.com/tool/TIDA-01555

5.3.12.2. Hardware Needed¶

Please refer to the TI Design to set up the hardware needed to run this demo.

- Beaglebone Black

- TIDA-01555 adapter card

- TIDA-01555 ADC board

- Desktop power supply to provide 5.5 volts to the ADC board

- Desktop signal generator to produce a 45-55Hz sine wave as an input

5.3.12.3. Steps to Run the Demo¶

Below are the steps to run the demo with Processor SDK Linux RT build for AM335x.

First, use /boot/am335x-boneblack-pru-adc.dtb as the default dtb:

cd /boot

cp am335x-boneblack.dtb am335x-boneblack.dtb.orig

cp am335x-boneblack-pru-adc.dtb am335x-boneblack.dtb

Then, link am335x-pru*_fw to the PRU-ADC firmware binaries:

cd /lib/firmware

ln -sf /lib/firmware/pru/PRU_ADS8688_Controller.out am335x-pru0-fw

ln -sf /lib/firmware/pru/PRU_ADS8688_Interface.out am335x-pru1-fw

After that, reboot the EVM, and then execute the ARM binary:

run-pru-adc.sh