5. Examples and Demos¶

Applications available by development platform

There are a number of Example Applications provided within the Processor SDK for Linux. Below are the applications available on each platform and the User’s Guides associated with each component.

Note

The example applications below assume that you are using the default pinmux/profile configuration that the board ships with, unless otherwise noted in the individual application’s User’s Guide

| Applications | AM335x EVM | AM335x ICE | AM335x SK | BeagleBone Black | AM437x EVM | AM437x Starter Kit | AM437x IDK | AM572x EVM | AM572x IDK | AM571x IDK | 66AK2Hx EVM & K2K EVM | K2Ex EVM | 66AK2L06 EVM | K2G EVM | OMAP-L138 LCDK | Users Guide | Description |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Matrix GUI | X | X | X | X | X | X | X | X | X | X | X | X | X | X | X | Matrix User’s Guide | Provides an overview and details of the graphical user interface (GUI) implementation of the application launcher provided in the Sitara Linux SDK |

| Power & Clocks | X | X | X | X | X | X | X | X | X | X | X | X | X | X | X | Sitara Power Management User Guide | Provides details of power management features for all supported platforms. |

| Multimedia | X | X | X | X | X | X | X | X | X | Multimedia User’s Guide | Provides details on implementing ARM/Neon based multimedia using GStreamer pipelines and FFMPEG open source codecs. | ||||||

| Accelerated Multimedia | X | X | X | X | X | X | X | Multimedia Training | Provides details on hardware accelerated (IVAHD/VPE/DSP) multimedia processing using GStreamer pipelines. | ||||||||

| Graphics | X | X | X | X | X | X | X | X | X | Graphics Getting Started Guide | Provides details on hardware accelerated 3D graphics demos. | ||||||

| OpenCL | X | X | X | X | X | X | X | OpenCL Examples | Provides OpenCL example descriptions. Matrix GUI provides two out of box OpenCL demos: Vector Addition and Floating Point Computation. | ||||||||

| Camera | X | X | X | X | X | X | Camera User’s Guide | Provides details on how to support smart sensor camera sensor using the Media Controller Framework | |||||||||

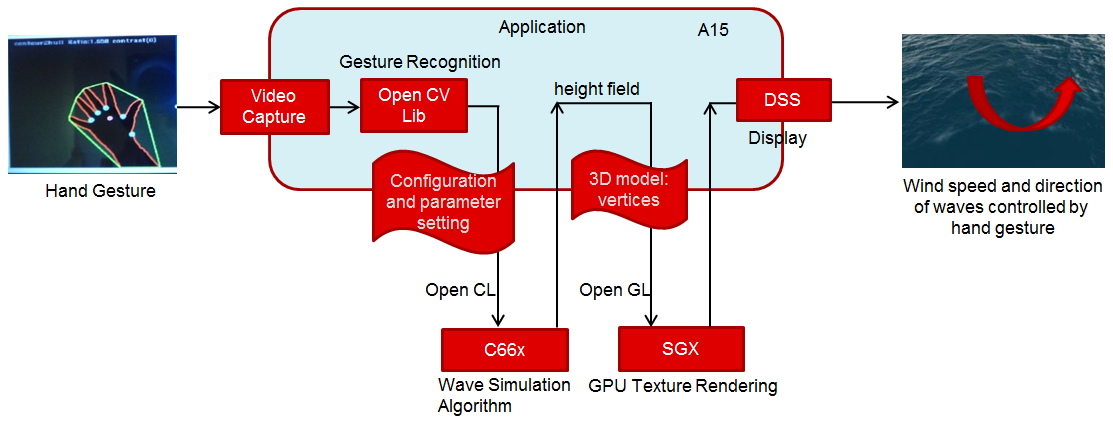

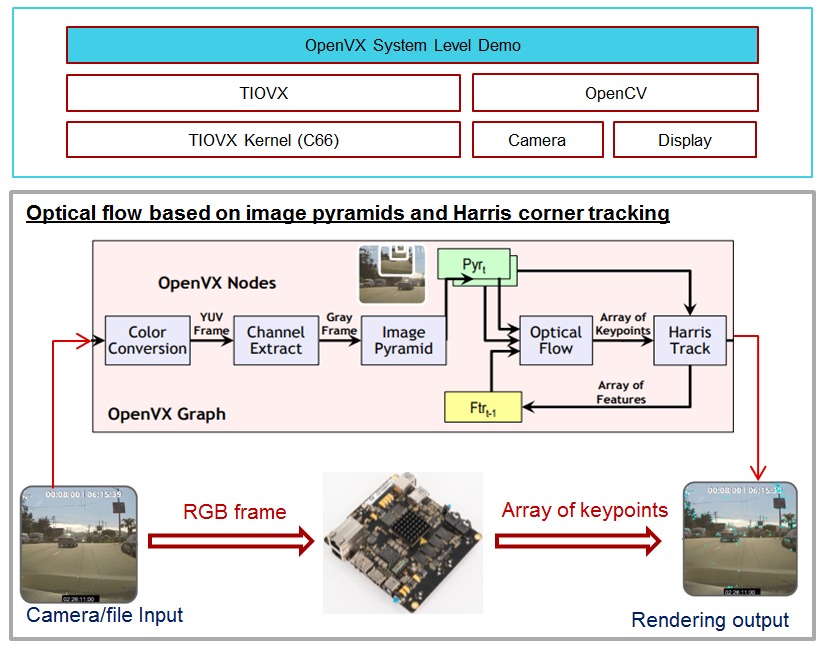

| Video Analytics | X | X | X | Video Analytics Demo | Demonstrates the capability of AM57x for video analytics. It builds on Qt and utilizes various IP blocks on AM57x. | ||||||||||||

| DLP 3D Scanner | X | X | X | 3D Machine Vision Reference Design | Demonstrates the capability of AM57x for DLP 3D scanning. | ||||||||||||

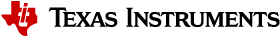

| Simple People Tracking | X | X | X | X | X | X | X | X | X | 3D TOF Reference Design | Demonstrates the capability of people tracking and detection with TI?s ToF (Time-of-Flight) sensor | ||||||

| Barcode Reader | X | X | X | X | X | X | X | X | X | X | X | X | X | X | Barcode Reader | Demonstrates the capability of detecting and decoding barcodes | |

| USB Profiler | X | X | X | X | X | X | X | X | X | X | X | X | X | X | X | NA | |

| ARM Benchmarks | X | X | X | X | X | X | X | X | X | X | X | X | X | X | X | NA | |

| Display | X | X | X | X | X | X | X | NA | |||||||||

| WLAN and Bluetooth | X | X | X | X | X | WL127x WLAN and Bluetooth Demos | Provides details on how to enable the WL1271 daughtercard which is connected to the EVM | ||||||||||

| QT Demos | X | X | X | X | X | X | X | X | X | Hands on with QT | Provides out of box Qt5.4 demos from Matrix GUI, including Calculator, Web Browser, Deform (shows vector deformation in the shape of a lens), and Animated Tiles. | ||||||

| Web Browser | X | X | X | X | X | X | X | X | X | NA | |||||||

| System Settings | X | X | X | X | X | X | X | X | X | X | X | X | X | X | X | NA | |

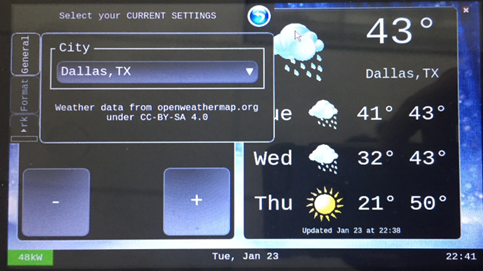

| EVSE Demo | X | X | X | X | X | X | X | X | X | HMI for EV charging infrastructure | Provides out of box demo to showcase Human Machine Interface (HMI) for Electric Vehicle Supply Equipment(EVSE) Charging Stations. | ||||||

| Protection Relay Demo | X | X | X | HMI for Protection Relay Demo | Matrix UI provides out of box demo to showcase Human Machine Interface (HMI) for Protection Relays. | ||||||||||||

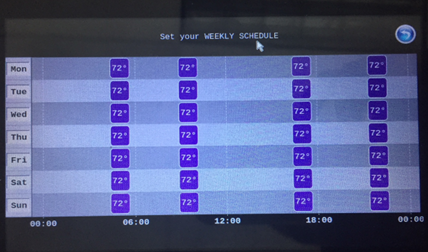

| Qt5 Thermostat HMI Demo | X | X | X | X | X | X | X | X | X | Qt5 Thermostat HMI Demo | Provides out of box Qt5-based HMI for Thermostat |

5.1. Matrix User Guide¶

Important Note

This guide is for the latest version of Matrix that is included in Processor SDK Linux. If you are looking for information about the old Matrix then this can be found at the following link **Previous Version of Matrix*** *

Supported Platforms

This version of Matrix supports all Sitara devices, as well as K2H/K2K, K2E, and K2L platforms.

Initial Boot Up

When you first boot up a target system which has display device attached (e.g., AM335x, AM437x, and AM57x platforms), Matrix should be automatically started. Matrix can be either operated by touchscreen or mouse. Default startup for most SDK platforms is touchscreen. Should you encounter any problems below are some tips to get everything running smoothly. See **Matrix Startup Debug**

When you boot up a target system without display (e.g., K2H/K2K, K2E, and K2L platforms), Matrix will not be automatically started during booting, and only Remote_Matrix is available for use after the booting.

Overview

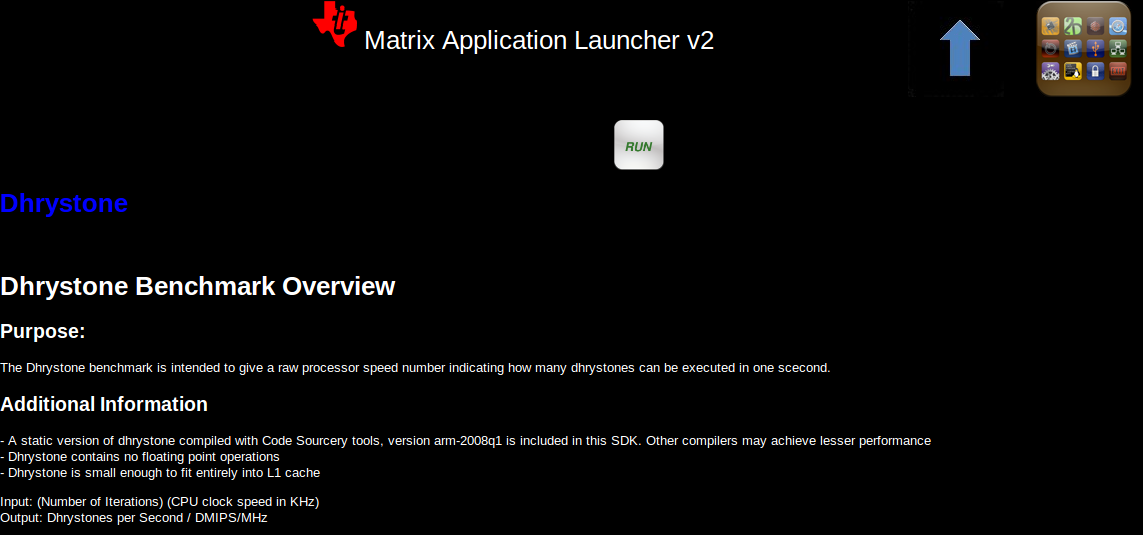

Matrix is an HTML 5 based application launcher created to highlight available applications and demos provided in new Software Development Kits. There are two forms of Matrix, local and remote Matrix. All of the example applications and demos are available using either the local or remote version. The local version launches by default when the target system is booted and uses the target system’s touchscreen interface for user input. Matrix comes as a 4x3 matrix of icons or as a 4x2 matrix depending on the display resolution.

Local and Remote Matrix

Local Matrix

Local Matrix refers to Matrix being displayed on a display device attached to the target system. The launcher for Matrix is just a simple QT application that displays a Webkit base browser that points to the URL http://localhost:80

NOTE Local matrix is not available for platforms without display support, nor the Keystone-2 platforms, such as K2H/K2K, K2E, K2L, and K2G platforms.

Remote Matrix

Remote Matrix refers to Matrix being ran in any modern day web browser not located on the target system.

The URL for Remote Matrix is http://<target system’s ip address>

You can find the target’s ip address by using local Matrix and clicking on the Settings icon and then on the Network Settings icon. Or using a terminal logged in to the target system enter the below command:

ifconfig

From the output displayed, look in the section that starts with eth0. You sould see an IP address right after “inet addr”. This is the IP address you should use for remote Matrix.

With Remote Matrix you can interact with Matrix on your PC, cellphone, tablet, or any device with a modern web browser. You can now launch text based applications or scripts and have the output streamed back to your web browser! Launching a gui application from Matrix requires you to still look at the display device connected to the target system.

Matrix Project Webpage

The offical website for Matrix is located at **gforge.ti.com/gf/project/matrix-gui-v2/** Any comments or bug reports for Matrix should be posted there.

How to Use the Matrix

Matrix is based on HTML 5 and is designed to be easily customizable. All applications and submenus for Matrix can be found in the target system’s usr/share/matrix-gui-2.0/apps/ directory. Matrix utilizes the .desktop standard along with some additional parameters to easily allow modifying, adding and removing an application or directory.

Matrix Components

Below is a summary of all the Matrix web pages:

- Contains all the directories or application that belongs to each directory level.

Application Description

- Optional and associated with a particular application.

- Provide additional information which can be useful for various reasons

- Displayed when the associated application icon is pressed.

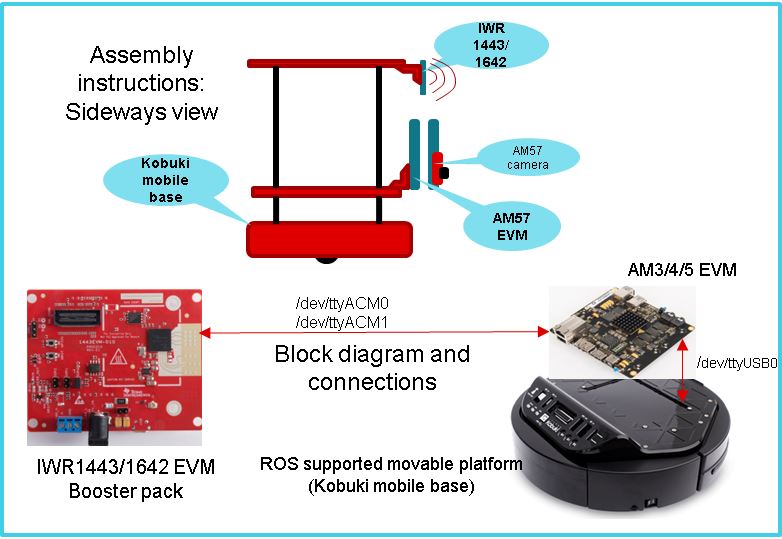

Example Application Description Page

Below is an example application description page. Description pages can be used to add additional information that may not be obvious.

Coming Soon Page

- Displayed for Matrix directories that doesn’t contain any applications within it.

Application/Script Execution Page

- For console based application, displays the output text of the application

Icons

- 96x96 png image files which are associated to a submenu or an application.

- Can be re-used by many applications

Applications

- Any application can be launched by Matrix

- Local Matrix uses the graphics display layer. If a launched application also uses the graphics display layer there will be a conflict.

Updating Matrix

Matrix 2 utilizes a caching system that caches the information read from the .desktop files and also the html that is generated from the various php pages. While this provides a substantial performance boost, developers must be aware that any changes to the Matrix apps folder which includes adding, deleting and modifying files can result in many problems within Matrix. To properly update Matrix with the latest information, Matrix’s caches need to be cleared.

Automatically Clearing Matrix Cache

The simpliest way to clear Matrix’s cache is to use the Refresh Matrix application found within Matrix’s Settings submenu. Simply running the application will cause Matrix to clear all the cached files and regenerate the .desktops cache file. Once the application is done running, Matrix will be updated with the latest information found from within the apps folder.

Manually Clearing Matrix Cache

Matrix caching system consists of 1 file and 1 directory. Within Matrix’s root directory there contains a file called json.txt. Json.txt is a JSON file that contains information gathered from all the .desktops located within the apps directory. This file is generated by executing the generate.php file.

Also located in Matrix’s root directory is a folder called cache. This folder contains all of the html files cached from the various dynamic php webpages.

To clear Matrix’s caches you need to perform only two steps:

- Execute the generate.php file.

In the terminal of the target system, enter the folllowing line of code.

php generate.php

or

In a browser enter the following url. Note replace <target ip> with the IP address of the target system.

http://<target ip>:80/generate.php

Viewing generate.php in the browser should display a blank page. There is no visual output to this webpage.

2. You need to clear the files located within Matrix’s cache folder. Enter the following commands.

cd /usr/share/matrix-gui-2.0/cache

rm -r *

Once the above steps are completed, Matrix will be updated.

Launching Matrix

Use the following shell script in the target’s terminal window to run Matrix as a background task:

/etc/init.d/matrix-gui-2.0 start

This script ensures that the touchscreen has been calibrated and that the Qt Window server is running.

Alternatively, Matrix can be launched manually with this full syntax:

matrix_browser -qws http://localhost:80

The “-qws” parameter is required to start the Qt window server if this is the only/first Qt application running on the system.

The third parameter is the URL that you want the application’s web browser to go to. http://localhost:80 points to the web server on the target system that is hosting Matrix.

Matrix Startup Debug

The following topics cover debugging Matrix issue at startup or disabling Matrix at start up.

Touchscreen not working

Please see this wiki page to recalibrate the touch screen: **How to Recalibrate the Touchscreen**

Matrix is running but I don’t want it running

- Exit Matrix by going to the Settings submenu and running the Exit Matrix application. Note that exiting Matrix only shuts down local Matrix. Remote Matrix can still be used.

- Or if the touchscreen is not working, from the console, type:

/etc/init.d/matrix-gui-2.0 stop

I don’t want Matrix to run on boot up

From the console type the following commands:

cd /etc/rc5.d

mv S97matrix-gui-2.0 K97matrix-gui-2.0

How to Enable Mouse Instead of Touchscreen for the Matrix

You can enable mouse by referring to the following: **How to Enable Mouse for the Matrix GUI*** *

How to Switch Display from LCD to DVI out for the Matrix

You can switch the display output by referring to the following: **How to Switch Display Output for the Matrix GUI*** *

Adding a New Application/Directory to Matrix

Below are step by step instructions.

- Create a new folder on your target file system at /usr/share/matrix-gui-2.0/apps/. The name should be a somewhat descriptive representation of the application or directory. The folder name must be different than any existing folders at that location.

- Create a .desktop file based on the parameters discussed below. It is recommended the name of the desktop file match the name of the newly created folder. No white spaces can be used for the .desktop filename. The .desktop file parameters should be set depending on if you want to add a new application or a new directory to Matrix. The Type field must be set according to your decision. The .desktop file must have the .desktop suffix.

- Update the Icon field in the .desktop to reference any existing Icon in the /usr/share/matrix-gui-2.0 directory or subdirectories. You can also add a new 96x96 png image and place it into your newly created folder.

- Optionally for applications you can add a HTML file that contains the application description into your newly created directory. If you add a description page then update the X-Matrix-Description field in the .desktop file.

- Refresh Matrix using the application “Refresh Matrix” located in the Settings submenu.

Run your new application from Matrix! See reference examples below: **Examples**

Blank template icons for Matrix can be found here: **gforge.ti.com/gf/download/frsrelease/712/5167/blank_icons_1.1.tar.gz**

Creating the .Desktop File

The .desktop file is based on standard specified at the **standards.freedesktop.org/desktop-entry-spec/latest/** Additional fields were added that are unique for Matrix.

Format for each parameter:

<Field>=<Value>

The fields and values are case sensitive.

Examples

Creating a New Matrix Directory

You can get all the files including the image discussed below from the following file: **Ex_directory.tar.gz**

Create a directory called ex_directory

Create a new file named hello_world_dir.desktop

Fill the contents of the file with the text shown below:

#!/usr/bin/env xdg-open

[Desktop Entry]

Name=Ex Demo

Icon=/usr/share/matrix-gui-2.0/apps/ex_directory/example-icon.png

Type=Directory

X-MATRIX-CategoryTarget=ex_dir

X-MATRIX-DisplayPriority=5

This .desktop above tells Matrix that this .desktop is meant to create a new directory since Type=Directory. The directory should be named “Ex Demo” and will use the icon located within the ex_directory directory. This new directory should be the 5th icon displayed as long as there aren’t any other .desktop files that specify X-MATRIX-DisplayPriority=5 and will be displayed in the Matrix Main Menu. Now any applications that wants to be displayed in this directory should have their .desktop Category parameter set to ex_dir.

- Note that sometimes Linux will rename the .desktop file to the name specified in the Name field. If this occurs don’t worry about trying to force it to use the file name specified.

- If you are writing these files in Windows, be sure to use Unix-style EOL characters

Now move the .desktop file and image into the ex_directory directory that was created.

Moving the Newly created Directory to the Target’s File System

Open the Linux terminal and go to the directory that contains the ex_directory.

Enter the below command to copy ex_directory to the /usr/share/matrix-gui-2.0/apps/ directory located in the target’s file system. Depending on the targetNFS directory premissions you might have to include sudo before the cp command.

host $ cp ex_directory ~/ti-processor-sdk-linux-[platformName]-evm-xx.xx.xx.xx/targetNFS/usr/share/matrix-gui-2.0/apps/

If NFS isn’t being used then you need to copy the ex_directory to the the /usr/share/matrix-gui-2.0/apps/ directory in the target’s filesystem.

Updating Matrix

Now in either local or remote Matrix go to the Settings directory and click on and then run the Refresh Matrix application. This will delete all the cache files that Matrix generates and regenerates all the needed files which will include any updates that you have made.

Now if you go back to Matrix’s Main Menu the 5th icon should be the icon for your Ex Demo.

Creating a New Application

This example is assuming that you completed the **Creating a New Matrix Directory** example.

You can get all the files including the image discussed below from the following file: **Ex_application.tar.gz*** *

Create a new directory called ex_application

Create a file named test.desktop

Fill the contents of the file with the below text:

#!/usr/bin/env xdg-open

[Desktop Entry]

Name=Test App

Icon=/usr/share/matrix-gui-2.0/apps/ex_application/example-icon.png

Exec=/usr/share/matrix-gui-2.0/apps/ex_application/test_script.sh

Type=Application

ProgramType=console

Categories=ex_dir

X-Matrix-Description=/usr/share/matrix-gui-2.0/apps/ex_application/app_desc.html

X-Matrix-Lock=test_app_lock

Type=Application lets Matrix know that this .desktop is for an application. The name of the application is “Test App”. The icon example-icon.png can be found within the ex_application directory. The command to execute is a shell script that will be located within ex_application. The script that is being ran is a simply shell script that output text to the terminal. Therefore, the ProgramType should be set to console. This application should be added to the Ex Demo directory from the previous example. Therefore, Categories will be set to ex_dir which is the same value that X-MATRIX-CategoryTarget is set to. You could optionally remove the Categories field to have this application displayed in Matrix’s Main Menu. This application will also have a description page. The html file to be used is located within the ex_application directory. A lock is also being used. Therefore, any other application including itself that has the same lock can’t run simultaneously.

Create a file named test_script.sh

echo "You are now running you first newly created application in Matrix"

echo "I am about to go to sleep for 30 seconds so you can test out the lock feature if you want"

sleep 30

echo "I am finally awake!"

host $ chmod 777 test_script.sh

Create a new file called app_desc.html

<h1>Test Application Overview</h1>

<h2>Purpose:</h2>

<p>The purpose of this application is to demonstrate the ease in adding a new application to Matrix.</p>

Now move the .desktop file, script file, the png image located in the Ex_application.tar.gz file and the html file into the ex_application folder.

Moving the newly created Directory to the Target System

Open the Linux terminal and go to the directory that contains the ex_application directory.

Enter the below command to copy the ex_application directory to /usr/share/matrix-gui-2.0/apps/ located in the target’s file system. Depending on the targetNFS directory permissions you might have to include sudo before the cp command.

host $ cp ex_application ~/ti-processor-sdk-linux-[platformName]-evm-xx.xx.xx.xx/targetNFS/usr/share/matrix-gui-2.0/apps/

If your not using NFS but instead are using a SD card then copy ex_application into the /usr/share/matrix-gui-2.0/apps/ directory in the target’s filesystem.

Updating Matrix

Now in either local or remote Matrix go to the Settings directory and click and then run the Refresh Matrix application. This will delete all the cache files that Matrix generates and regenerate all the needed files which will include any updates that you have made.

Now if you go back to the Matrix’s Main Menu and click on the Ex Demo directory you should see your newly created application. Click on the application’s icon and you will see the application’s description page. Click the Run button and your application will execute. If you try to run two instances of this application simultaneously via local and remote Matrtix you will get a message saying that the program can’t run because a lock exists. Because of X-Matrix-Lock being set to test_app_lock, Matrix knows not to run two instances of a program simultaneously that share the same lock. You can run the application again when the previous application is done running.

You have just successfully added a new application to Matrix using all the possibly parameters!

5.2. Sub-system Demos¶

5.2.1. Power Management¶

Overview

This page is the top level page for support of Power Management topics related to Sitara devices.

Please follow the appropriate link below to find information specific to your device.

Supported Devices

AM335x Power Management User Guide

- Note: BeagleBone users click here.

5.2.1.1. AM335x Power Management User Guide¶

Overview

This article provides a description of the example applications under the Power page of the Matrix application that comes with the Sitara SDK. This page is labled “Power” in the top-level Matrix GUI. The location of the Power icon on the main Matrix app list may be different than shown here, depending on screen size. (Screen shots from SDK 06.00)

Power Examples

Several power examples exist to provide users the ability to dynamically switch the CPU clock frequency. The frequencies shown are those available for your system. Upon making a selection, you will be presented a confirmation page. The readout number “BogoMIPS” will confirm the new clock frequency. Please note that the frequency will read out with a slight margin compare to the intended frequency. For example, if you select 1GHz, you may see a number like 998.84 (in MHz). This is normal. After reviewing the confirmation page, press the Close button to return to normal Matrix operation.

Other power examples are provided which may be useful for power management developers and power users. These have been included in Matrix in part to make users aware that these valuable debugging tools exist, in addition to the convenience of executing each application from the GUI. In depth descriptions for each application follow. Similar descriptions are also given via description pages in Matrix, which will be displayed when clicking the button. Where appropriate, the documentation will point out the corresponding command line operation.

The Suspend/Resume button demonstrates the ability to put the machine into a suspended state. See below for complete documentation of this feature.

Please note that the order of applications which appear on your screen may differ from the picture below, due to devices with different screen sizes, and differences between different versions of Matrix. Screen shot is from SDK 06.00.

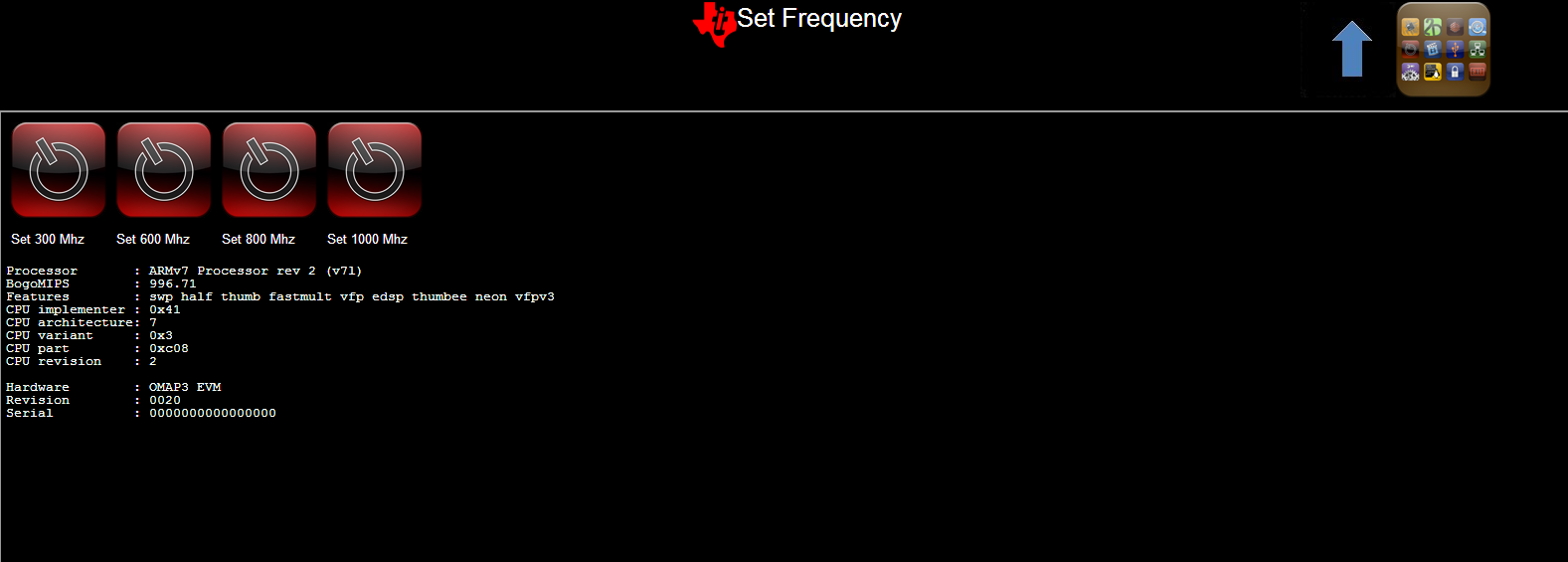

Set Frequency

This command opens up another screen from which you choose the frequency based on the available frequencies on the board. Here is a picture of the screen you will see:

The following are the Linux command line equivalents for selecting the operating frequency. Please note that changing the frequency also changes the MPU voltage accordingly. The commands are part of the “cpufreq” kernel interface for selecting the OPP (operating performance point). Cpufreq provides an opportunity to save power by adjusting/scaling voltage and frequency based on the current cpu load.

(command line equivalent)

cat /sys/devices/system/cpu/cpu0/cpufreq/scaling_available_frequencies

(view options, select one for next step)

echo <selected frequency, in KHz> > /sys/devices/system/cpu/cpu0/cpufreq/scaling_setspeed

cat /proc/cpuinfo

Suspend/Resume

(command line equivalent)

mkdir /debug

mount -t debugfs debugfs /debug

echo mem > /sys/power/state

SmartReflex

SmartReflex is an active power management technique which optimizes voltage based on silicon process (“hot” vs. “cold” silicon), temperature, and silicon degradation effects. In most cases, SmartReflex provides significant power savings by lowering operating voltage.

On AM335x, SmartReflex is enabled by default in Sitara SDK releases since 05.05.00.00. Please note that the kernel configuration menu presents two options: “AM33XX SmartReflex support” and “SmartReflex support”. For AM33XX SmartReflex, you must select “AM33XX SmartReflex support”, and ensure that the “SmartReflex support” option is disabled. The latter option is intended for AM37x and OMAP3 class devices.

The SmartReflex driver requires the use of either the TPS65217 or TPS65910 PMIC. Furthermore, SmartReflex is currently supported only on the ZCZ package. Please note that SmartReflex may not operate on AM335x sample devices which were not programmed with voltage targets. To disable SmartReflex, type the following commands at the target terminal:

mkdir /debug

mount -t debugfs debugfs /debug

cd /debug/smartreflex ==> NOTE: You may not see 'smartreflex' node if you have early silicon. In this case SmartReflex operation is not possible.

echo 0 > autocomp

(Performing "echo 1 > autocomp" will re-enable SmartReflex)

On AM335x, to compile SmartReflex support out of the kernel, follow this procedure to modify the kernel configuration:

cd <kernel source directory>

make ARCH=arm CROSS_COMPILE=arm-linux-gnueabihf- menuconfig

<the menuconfig interface should appear>

Select "System Type"

Select "TI OMAP Common Features"

Deselect "AM33xx SmartReflex Support"

Select "Exit" until you are prompted to save the configuration changes, and save them.

Rebuild the kernel.

Dynamic Frequency Scaling

This feature, which can be enabled via patch to the SDK, enables scaling frequency INDEPENDENT of voltage. It is also referred to as DFS (as in DVFS without the ‘V’).

Media:0001-Introduce-dynamic-frequency-scaling.patch

Discussion

Certain systems are unable to scale voltage, either because they employ a fixed voltage regulator, or use the ZCE package of AM335x. Without being able to scale voltage, the power savings enabled via DVFS are lost. This is because the current version of the omap-cpufreq driver requires a valid MPU voltage regulator in order to operate. The purpose of this DFS feature is to enable additional power savings for systems with these sort of limitations.

When using the ZCE package of AM335x, the CORE and MPU voltage domains are tied together. Due to Advisory 1.0.22, you are not allowed to dynamically modify the CORE frequency/voltage because the EMIF cannot support it. However, to achieve maximum power savings, it may still be desirable to use a PMIC which supports dynamic voltage scaling, in order to use Adaptive Voltage Scaling (aka SmartReflex or AVS). This implementation of DFS does not affect the ability of AVS to optimize the voltage and save additional power.

Using the patch

The patch presented here has been developed for and tested on the SDK 05.07. It modifies the omap-cpufreq driver to operate without requiring a valid MPU voltage regulator. From a user perspective, changing frequency via cpufreq is accomplished with exactly the same commands as typical DVFS. For example, switching to 300 MHz is accomplished with the following command:

echo 300000 > /sys/devices/system/cpu/cpu0/cpufreq/scaling_setspeed

After applying the patch, the user must modify the kernel defconfig in order to enable the DFS feature. You should also configure the “Maximum supported DFS voltage” (shown below) to whatever the fixed voltage level is for your system, in microvolts. For example, use the value 1100000 to signify 1.1V. The software will use the voltage level that you specify to automatically disable any Operating Performance Points (OPPs) which have voltages above that level.

On AM335x, first apply the patch, then follow this procedure to modify the kernel configuration:

cd <kernel source directory>

make ARCH=arm CROSS_COMPILE=arm-linux-gnueabihf- menuconfig

<the menuconfig interface should appear>

Select "System Type"

Select "TI OMAP Common Features"

Select "Dynamic Frequency Scaling"

Configure "Maximum supported DFS voltage (in microvolts)" (default is 1100000, or 1.1V)

Select "Exit" until you are prompted to save the configuration changes, and save them.

Rebuild the kernel.

Power Savings

- Tested on a rev 1.2 EVM, running Linux at idle.

- The delta between power consumption at 300MHz and 600MHz, with voltage unchanged, is approximately 75mW.

Static CORE OPP 50

Configuring the AM335x system to CORE OPP50 frequency and voltage is an advanced power savings method that can be used, provided that you understand the tradeoffs involved.

This patch, which was developed against the u-boot source tree from the SDK 05.07, configures the bootloader to statically program the system to CORE OPP50 voltage (0.95V) and frequencies. It also configures the MPU to OPP50 voltage (0.95V) and frequency (300MHz). DDR2 is configured with optimized timings to run at 125MHz.

Apply the following patch to your u-boot source tree and rebuild both MLO and u-boot.img. (Refer to AM335x_U-Boot_User’s_Guide#Building_U-Boot)

Media:0001-Static-CORE-OPP50-w-DDR2-125MHz-MPU-300MHz.patch

Caveats

- According to section 5.5.1 of the AM335x datasheet, operation of the Ethernet MAC and switch (CPSW) is NOT supported for CORE OPP50.

- Note that MPU OPP50 operation is not supported for the 1.0 silicon revision (silicon errata Advisory 1.0.15).

- Also be aware of Advisory 1.0.24, which states that boot may not be reliable because OPP100 frequencies are used by ROM at OPP50 voltages.

- DDR2 memory must be used (as on the AM335x EVM up to rev 1.2). DDR2 memory timings must be modified to operate at 125MHz.

Power Savings

- On an EVM (rev 1.2), active power consumption when Linux is idle for CORE and MPU rails was measured at 150mW. Using the out-of-the-box SDK at OPP100 (MPU and CORE), the comparable figure is 334mW.

- Further savings are possible by disabling Ethernet drivers in the Linux defconfig. Refer to AM335x_CPSW_(Ethernet)_Driver’s_Guide#Driver_Configuration and disable “Ethernet driver support” to acheive additional power savings.

Power Management Reference

Refer to this page for Linux specific information on AM335x devices.

The Power Estimation Tool (PET) provides users the ability to gain insight in to the power consumption of select Sitara processors.

This document discusses the power consumption for common system application usage scenarios for the AM335x ARM® Cortex™-A8 Microprocessors (MPUs).

Standby for AM335x is a inactive (system suspended) power saving mode in which the power savings achieved would be lesser than that achieved through DeepSleep0 mode but with lesser resume latency and additional wake-up sources.

5.2.2. ARM Multimedia Users Guide¶

Overview

Multimedia codecs on ARM based platforms could be optimised for better performance using the tightly coupled **Neon** co-processor. Neon architecture works with its own independent pipeline and register file. Neon technology is a 128 bit SIMD architecture extension for ARM Cortex-A series processors. It is designed to provide acceleration for multimedia applications.

Supported Platforms

- AM37x

- Beagleboard-xM

- AM35x

- AM335x EVM

- AM437x GP EVM

- AM57xx GP EVM

Multimedia on AM57xx Processor

On AM57xx processor, ARM offloads H.264, VC1, MPEG-4, MPEG-2 and MJPEG codecs processing to IVA-HD hardware accelerator. Please refer to AM57xx Multimedia Training guide to learn more on AM57xx multimedia capabilities, demos, software stack, gstreamer plugins and pipelines. Also refer to AM57xx Graphics Display Getting Started Guide to learn on AM57xx graphics software architecture, demos, tools and display applications.

Multimedia on Cortex-A8

Cortex-A8 Features and Benefits

- Support ARM v7 with Advanced SIMD (NEON)

- Support hierarchical cache memory

- Up to 1 MB L2 cache

- Up to 128-bit memory bandwidth

- 13-stage pipeline and enhanced branch prediction engine

- Dual-issue of instructions

Neon Features and Benefits

- Independent HW block to support advanced SIMD instructions

- Comprehensive instruction set with support of 8, 16 & 32-bit signed & unsigned data types

- 256 byte register file (dual 32x64/16x128 view) with hybrid 32/64/128 bit modes

- Large register files enables efficient data handling and minimizes access to memory, thus enhancing data throughput

- Processor can sleep sooner which leads to an overall dynamic power saving

- Independent 10-stage pipeline

- Dual-issue of limited instruction pairs

- Significant code size reduction

Neon support on opensource community

NEON is currently supported in the following Open Source projects.

- ffmpeg/libav

- LGPL media player used in many Linux distros

- NEON Video: MPEG-4 ASP, H.264 (AVC), VC-1, VP3, Theora

- NEON Audio: AAC, Vorbis, WMA

- x264 –Google Summer Of Code 2009

- GPL H.264 encoder –e.g. for video conferencing

- Bluez –official Linux Bluetooth protocol stack

- NEON sbc audio encoder

- Pixman (part of cairo 2D graphics library)

- Compositing/alpha blending

- X.Org, Mozilla Firefox, fennec, & Webkit browsers

- e.g. fbCompositeSolidMask_nx8x0565neon 8xfaster using NEON

- Ubuntu 09.04 & 09.10 –fully supports NEON

- NEON versions of critical shared-libraries

- Android –NEON optimizations

- Skia library, S32A_D565_Opaque 5xfaster using NEON

- Available in Google Skia tree from 03-Aug-2009

For additional details, please refer the **NEON - ARM website**.

SDK Example Applications

This application can be executed by selecting the “Multimedia” icon at the top-level matrix.

NOTE

The very first GStreamer launch takes some time to initialize outputs or set up decoders.

Codec portfolio

Processor SDK includes ARM based multimedia using opensource GPLv2+ FFmpeg/Libav codecs, the codec portfolio includes MPEG-4, H.264 for video in VGA/WQVGA/480p resolution and AAC codec for audio. Codec portforlio for Processor SDK on AM57xx device is listed here

The script file to launch multimedia demo detects the display enabled and accordingly decodes VGA or 480p video. In AM37x platform VGA clip is decoded when LCD is enabled and 480p is decoded when DVI out is enabled. Scripts in “Settings” menu can be used to switch between these two displays.

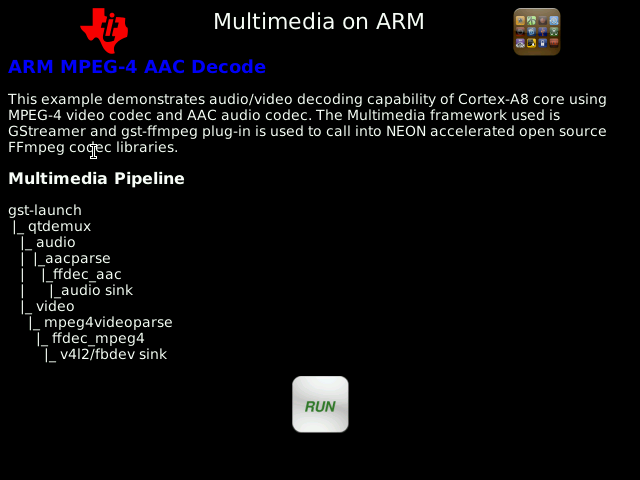

MPEG4 + AAC Decode

MPEG-4 + AAC Dec example application demonstrates use of MPEG-4 video and AAC audio codec as mentioned in the description page below.

The multimedia pipeline is constructed using gst-launch, GStreamer elements such as qtdemux is used for demuxing AV content. Parsers are elements with single source pad and can be used to cut streams into buffers, they do not modify the data otherwise.

gst-launch-0.10 filesrc location=$filename ! qtdemux name=demux demux.audio_00 ! queue ! ffdec_aac ! alsasink sync=false demux.video_00 ! queue ! ffdec_mpeg4 ! ffmpegcolorspace ! fbdevsink device=/dev/fb0

“filename” is defined based on the selected display device which could be LCD of DVI.

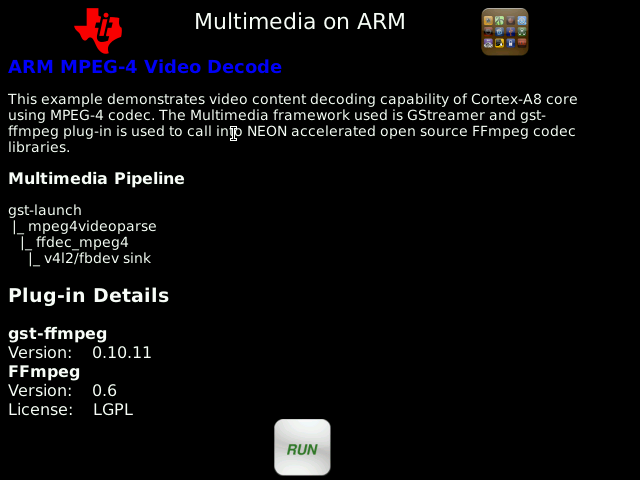

MPEG4 Decode

MPEG-4 decode example application demonstrates use of MPEG-4 video codec as mentioned in the description page below.

gst-launch-0.10 filesrc location=$filename ! mpeg4videoparse ! ffdec_mpeg4 ! ffmpegcolorspace ! fbdevsink device=/dev/fb0

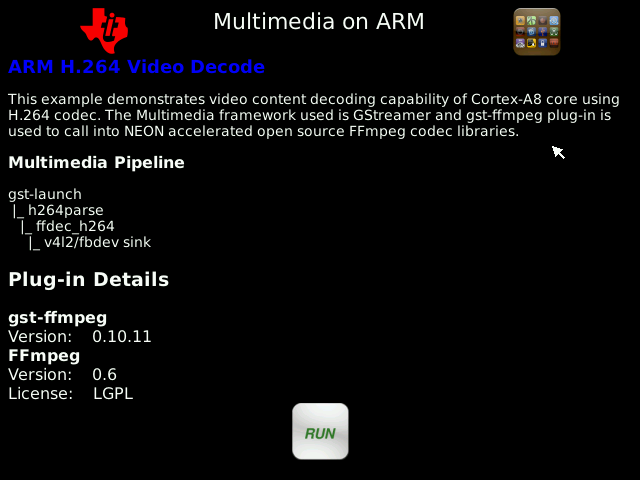

H.264 Decode

H.264 decode example application demonstrates use of H.264 video codec as mentioned in the description page below.

gst-launch-0.10 filesrc location=$filename ! h264parse ! ffdec_h264 ! ffmpegcolorspace ! fbdevsink device=/dev/fb0

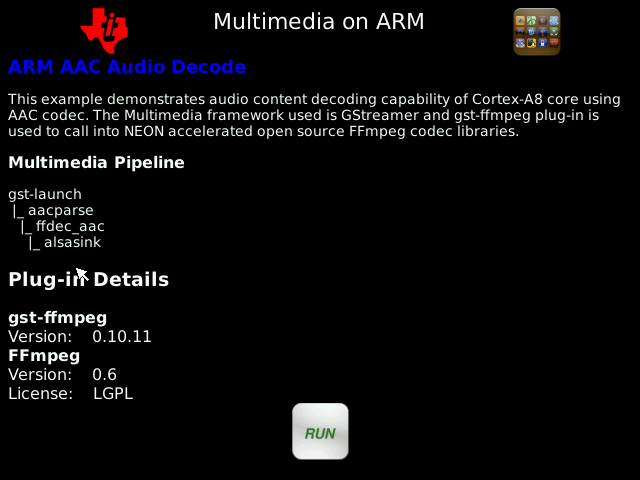

AAC Decode

AAC decode example application demonstrates use of AAC video codec as mentioned in the description page below.

gst-launch-0.10 filesrc location=$filename ! aacparse ! faad ! alsasink

Streaming

Audio/Video data can be streamed from a server using souphttpsrc. For example to stream audio content, if you set-up an apache server on your host machine you can stream the audio file HistoryOfTI.aac located in the files directory using the pipeline

gst-launch souphttpsrc location=http://<ip address>/files/HistoryOfTI.aac ! aacparse ! faad ! alsasink

Multimedia Peripheral Examples

Examples of how to use several different multimedia peripherals can be found on the ARM Multimedia Peripheral Examples page.

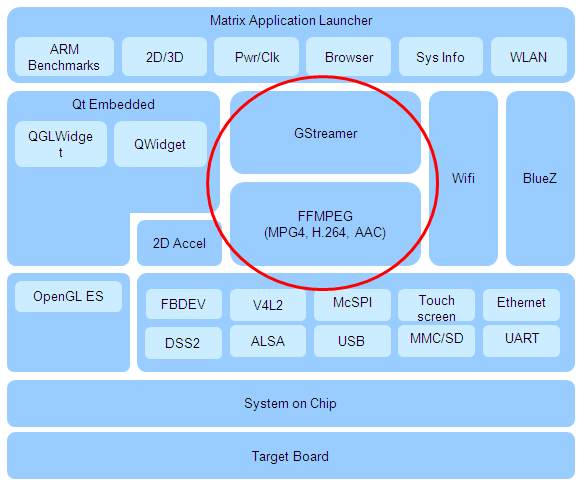

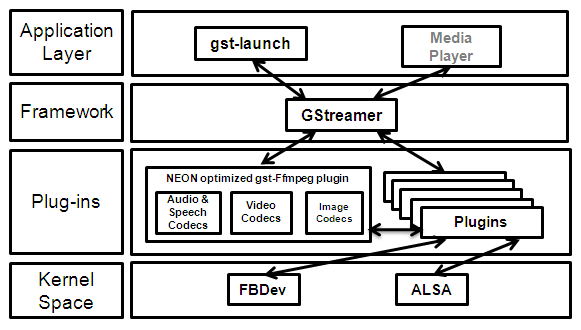

SDK Multimedia Framework

Multimedia framework for cortex-a8 SDK will leverage GStreamer multimedia stack with gst-ffmpeg plug-in’s to support GPLv2+ FFmpeg/libav library code.

gst-launch is used to build and run basic multimedia pieplines to demonstrate audio/avideo decoding examples.

GStreamer

- Multimedia processing library

- Provides uniform framework across platforms

- Includes parsing & A/V sync support

- Modular with flexibility to add new functionality via plugins

- Easy bindings to other frameworks

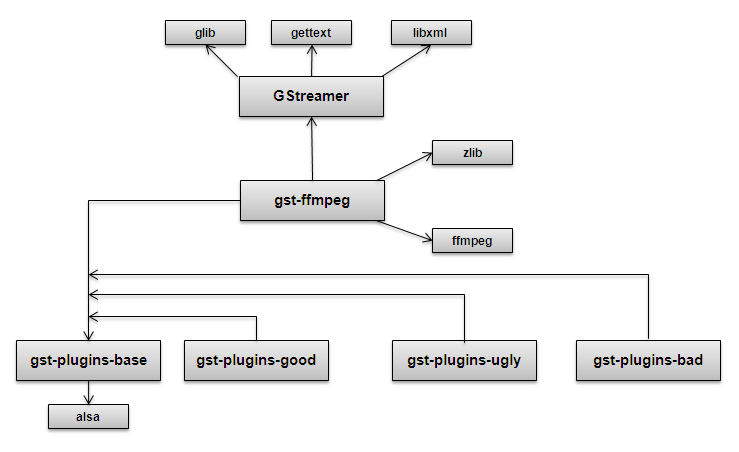

Some of the build dependencies for GStreamer are shown here:

Open Source FFmpeg Codecs

**FFmpeg** is an open source project which provides a cross platform multimedia solution.

- Free audio and video decoder/encoder code licensed under GPLv2+ (GPLv3 licensed codecs can be build separately)

- A comprehensive suite of standard compliant multimedia codecs

- Codec software package

- Codec libraries with standard C based API

- Audio/Video parsers that support popular multimedia content

- Use of SIMD/NEON instructions **cortex-A8 neon architecture**

- Neon provides 1.6x-2.5x performance on complex video codecs

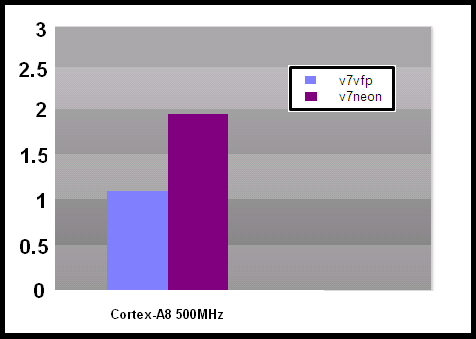

Multimedia Neon Benchmark

Test Parameters:

- Sep 21 2009 snapshot of gst-ffmpeg.org

- Real silicon measurements on Omap3 Beagleboard

| Resolution | 480x270 |

| Frame Rate | 30fps |

| Audio | 44.1KHz |

| Video Codec | H.264 |

| Audio Codec | AAC |

- Benchmarks released by ARM demonstrating an overall performance improvement of ~2x.

FFmpeg Codecs List

FFmpeg Codec Licensing

FFmpeg libraries include LGPL, GPLv2, GPLv3 and other license based codecs, enabling GPLv3 codecs subjects the entire framework to GPLv3 license. In the Sitara SDK GPLv2+ licensed codecs are enabled. Enabling Additional details of **legal and license** of these codecs can be found on the FFmpeg/libav webpage.

GPLv2+ codecs list

| Codec | Description |

| ffenc_a64multi | FFmpeg Multicolor charset for Commodore 64 encoder |

| ffenc_a64multi5 | FFmpeg Multicolor charset for Commodore 64, extended with 5th color (colram) encoder |

| ffenc_asv1 | FFmpeg ASUS V1 encoder |

| ffenc_asv2 | FFmpeg ASUS V2 encoder |

| ffenc_bmp | FFmpeg BMP image encoder |

| ffenc_dnxhd | FFmpeg VC3/DNxHD encoder |

| ffenc_dvvideo | FFmpeg DV (Digital Video) encoder |

| ffenc_ffv1 | FFmpeg FFmpeg video codec #1 encoder |

| ffenc_ffvhuff | FFmpeg Huffyuv FFmpeg variant encoder |

| ffenc_flashsv | FFmpeg Flash Screen Video encoder |

| ffenc_flv | FFmpeg Flash Video (FLV) / Sorenson Spark / Sorenson H.263 encoder |

| ffenc_h261 | FFmpeg H.261 encoder |

| ffenc_h263 | FFmpeg H.263 / H.263-1996 encoder |

| ffenc_h263p | FFmpeg H.263+ / H.263-1998 / H.263 version 2 encoder |

| ffenc_huffyuv | FFmpeg Huffyuv / HuffYUV encoder |

| ffenc_jpegls | FFmpeg JPEG-LS encoder |

| ffenc_ljpeg | FFmpeg Lossless JPEG encoder |

| ffenc_mjpeg | FFmpeg MJPEG (Motion JPEG) encoder |

| ffenc_mpeg1video | FFmpeg MPEG-1 video encoder |

| ffenc_mpeg4 | FFmpeg MPEG-4 part 2 encoder |

| ffenc_msmpeg4v1 | FFmpeg MPEG-4 part 2 Microsoft variant version 1 encoder |

| ffenc_msmpeg4v2 | FFmpeg MPEG-4 part 2 Microsoft variant version 2 encoder |

| ffenc_msmpeg4 | FFmpeg MPEG-4 part 2 Microsoft variant version 3 encoder |

| ffenc_pam | FFmpeg PAM (Portable AnyMap) image encoder |

| ffenc_pbm | FFmpeg PBM (Portable BitMap) image encoder |

| ffenc_pcx | FFmpeg PC Paintbrush PCX image encoder |

| ffenc_pgm | FFmpeg PGM (Portable GrayMap) image encoder |

| ffenc_pgmyuv | FFmpeg PGMYUV (Portable GrayMap YUV) image encoder |

| ffenc_png | FFmpeg PNG image encoder |

| ffenc_ppm | FFmpeg PPM (Portable PixelMap) image encoder |

| ffenc_qtrle | FFmpeg QuickTime Animation (RLE) video encoder |

| ffenc_roqvideo | FFmpeg id RoQ video encoder |

| ffenc_rv10 | FFmpeg RealVideo 1.0 encoder |

| ffenc_rv20 | FFmpeg RealVideo 2.0 encoder |

| ffenc_sgi | FFmpeg SGI image encoder |

| ffenc_snow | FFmpeg Snow encoder |

| ffenc_svq1 | FFmpeg Sorenson Vector Quantizer 1 / Sorenson Video 1 / SVQ1 encoder |

| ffenc_targa | FFmpeg Truevision Targa image encoder |

| ffenc_tiff | FFmpeg TIFF image encoder |

| ffenc_wmv1 | FFmpeg Windows Media Video 7 encoder |

| ffenc_wmv2 | FFmpeg Windows Media Video 8 encoder |

| ffenc_zmbv | FFmpeg Zip Motion Blocks Video encoder |

| ffenc_aac | FFmpeg Advanced Audio Coding encoder |

| ffenc_ac3 | FFmpeg ATSC A/52A (AC-3) encoder |

| ffenc_alac | FFmpeg ALAC (Apple Lossless Audio Codec) encoder |

| ffenc_mp2 | FFmpeg MP2 (MPEG audio layer 2) encoder |

| ffenc_nellymoser | FFmpeg Nellymoser Asao encoder |

| ffenc_real_144 | FFmpeg RealAudio 1.0 (14.4K) encoder encoder |

| ffenc_sonic | FFmpeg Sonic encoder |

| ffenc_sonicls | FFmpeg Sonic lossless encoder |

| ffenc_wmav1 | FFmpeg Windows Media Audio 1 encoder |

| ffenc_wmav2 | FFmpeg Windows Media Audio 2 encoder |

| ffenc_roq_dpcm | FFmpeg id RoQ DPCM encoder |

| ffenc_adpcm_adx | FFmpeg SEGA CRI ADX ADPCM encoder |

| ffenc_g722 | FFmpeg G.722 ADPCM encoder |

| ffenc_g726 | FFmpeg G.726 ADPCM encoder |

| ffenc_adpcm_ima_qt | FFmpeg ADPCM IMA QuickTime encoder |

| ffenc_adpcm_ima_wav | FFmpeg ADPCM IMA WAV encoder |

| ffenc_adpcm_ms | FFmpeg ADPCM Microsoft encoder |

| ffenc_adpcm_swf | FFmpeg ADPCM Shockwave Flash encoder |

| ffenc_adpcm_yamaha | FFmpeg ADPCM Yamaha encoder |

| ffenc_ass | FFmpeg Advanced SubStation Alpha subtitle encoder |

| ffenc_dvbsub | FFmpeg DVB subtitles encoder |

| ffenc_dvdsub | FFmpeg DVD subtitles encoder |

| ffenc_xsub | FFmpeg DivX subtitles (XSUB) encoder |

| ffdec_aasc | FFmpeg Autodesk RLE decoder |

| ffdec_amv | FFmpeg AMV Video decoder |

| ffdec_anm | FFmpeg Deluxe Paint Animation decoder |

| ffdec_ansi | FFmpeg ASCII/ANSI art decoder |

| ffdec_asv1 | FFmpeg ASUS V1 decoder |

| ffdec_asv2 | FFmpeg ASUS V2 decoder |

| ffdec_aura | FFmpeg Auravision AURA decoder |

| ffdec_aura2 | FFmpeg Auravision Aura 2 decoder |

| ffdec_avs | FFmpeg AVS (Audio Video Standard) video decoder |

| ffdec_bethsoftvid | FFmpeg Bethesda VID video decoder |

| ffdec_bfi | FFmpeg Brute Force & Ignorance decoder |

| ffdec_binkvideo | FFmpeg Bink video decoder |

| ffdec_bmp | FFmpeg BMP image decoder |

| ffdec_c93 | FFmpeg Interplay C93 decoder |

| ffdec_cavs | FFmpeg Chinese AVS video (AVS1-P2, JiZhun profile) decoder |

| ffdec_cdgraphics | FFmpeg CD Graphics video decoder |

| ffdec_cinepak | FFmpeg Cinepak decoder |

| ffdec_cljr | FFmpeg Cirrus Logic AccuPak decoder |

| ffdec_camstudio | FFmpeg CamStudio decoder |

| ffdec_cyuv | FFmpeg Creative YUV (CYUV) decoder |

| ffdec_dnxhd | FFmpeg VC3/DNxHD decoder |

| ffdec_dpx | FFmpeg DPX image decoder |

| ffdec_dsicinvideo | FFmpeg Delphine Software International CIN video decoder |

| ffdec_dvvideo | FFmpeg DV (Digital Video) decoder |

| ffdec_dxa | FFmpeg Feeble Files/ScummVM DXA decoder |

| ffdec_eacmv | FFmpeg Electronic Arts CMV video decoder |

| ffdec_eamad | FFmpeg Electronic Arts Madcow Video decoder |

| ffdec_eatgq | FFmpeg Electronic Arts TGQ video decoder |

| ffdec_eatgv | FFmpeg Electronic Arts TGV video decoder |

| ffdec_eatqi | FFmpeg Electronic Arts TQI Video decoder |

| ffdec_8bps | FFmpeg QuickTime 8BPS video decoder |

| ffdec_8svx_exp | FFmpeg 8SVX exponential decoder |

| ffdec_8svx_fib | FFmpeg 8SVX fibonacci decoder |

| ffdec_escape124 | FFmpeg Escape 124 decoder |

| ffdec_ffv1 | FFmpeg FFmpeg video codec #1 decoder |

| ffdec_ffvhuff | FFmpeg Huffyuv FFmpeg variant decoder |

| ffdec_flashsv | FFmpeg Flash Screen Video v1 decoder |

| ffdec_flic | FFmpeg Autodesk Animator Flic video decoder |

| ffdec_flv | FFmpeg Flash Video (FLV) / Sorenson Spark / Sorenson H.263 decoder |

| ffdec_4xm | FFmpeg 4X Movie decoder |

| ffdec_fraps | FFmpeg Fraps decoder |

| ffdec_FRWU | FFmpeg Forward Uncompressed decoder |

| ffdec_h261 | FFmpeg H.261 decoder |

| ffdec_h263 | FFmpeg H.263 / H.263-1996, H.263+ / H.263-1998 / H.263 version 2 decoder |

| ffdec_h263i | FFmpeg Intel H.263 decoder |

| ffdec_h264 | FFmpeg H.264 / AVC / MPEG-4 AVC / MPEG-4 part 10 decoder |

| ffdec_huffyuv | FFmpeg Huffyuv / HuffYUV decoder |

| ffdec_idcinvideo | FFmpeg id Quake II CIN video decoder |

| ffdec_iff_byterun1 | FFmpeg IFF ByteRun1 decoder |

| ffdec_iff_ilbm | FFmpeg IFF ILBM decoder |

| ffdec_indeo2 | FFmpeg Intel Indeo 2 decoder |

| ffdec_indeo3 | FFmpeg Intel Indeo 3 decoder |

| ffdec_indeo5 | FFmpeg Intel Indeo Video Interactive 5 decoder |

| ffdec_interplayvideo | FFmpeg Interplay MVE video decoder |

| ffdec_jpegls | FFmpeg JPEG-LS decoder |

| ffdec_kgv1 | FFmpeg Kega Game Video decoder |

| ffdec_kmvc | FFmpeg Karl Morton’s video codec decoder |

| ffdec_loco | FFmpeg LOCO decoder |

| ffdec_mdec | FFmpeg Sony PlayStation MDEC (Motion DECoder) decoder |

| ffdec_mimic | FFmpeg Mimic decoder |

| ffdec_mjpeg | FFmpeg MJPEG (Motion JPEG) decoder |

| ffdec_mjpegb | FFmpeg Apple MJPEG-B decoder |

| ffdec_mmvideo | FFmpeg American Laser Games MM Video decoder |

| ffdec_motionpixels | FFmpeg Motion Pixels video decoder |

| ffdec_mpeg4 | FFmpeg MPEG-4 part 2 decoder |

| ffdec_mpegvideo | FFmpeg MPEG-1 video decoder |

| ffdec_msmpeg4v1 | FFmpeg MPEG-4 part 2 Microsoft variant version 1 decoder |

| ffdec_msmpeg4v2 | FFmpeg MPEG-4 part 2 Microsoft variant version 2 decoder |

| ffdec_msmpeg4 | FFmpeg MPEG-4 part 2 Microsoft variant version 3 decoder |

| ffdec_msrle | FFmpeg Microsoft RLE decoder |

| ffdec_msvideo1 | FFmpeg Microsoft Video 1 decoder |

| ffdec_mszh | FFmpeg LCL (LossLess Codec Library) MSZH decoder |

| ffdec_nuv | FFmpeg NuppelVideo/RTJPEG decoder |

| ffdec_pam | FFmpeg PAM (Portable AnyMap) image decoder |

| ffdec_pbm | FFmpeg PBM (Portable BitMap) image decoder |

| ffdec_pcx | FFmpeg PC Paintbrush PCX image decoder |

| ffdec_pgm | FFmpeg PGM (Portable GrayMap) image decoder |

| ffdec_pgmyuv | FFmpeg PGMYUV (Portable GrayMap YUV) image decoder |

| ffdec_pictor | FFmpeg Pictor/PC Paint decoder |

| ffdec_png | FFmpeg PNG image decoder |

| ffdec_ppm | FFmpeg PPM (Portable PixelMap) image decoder |

| ffdec_ptx | FFmpeg V.Flash PTX image decoder |

| ffdec_qdraw | FFmpeg Apple QuickDraw decoder |

| ffdec_qpeg | FFmpeg Q-team QPEG decoder |

| ffdec_qtrle | FFmpeg QuickTime Animation (RLE) video decoder |

| ffdec_r10k | FFmpeg AJA Kona 10-bit RGB Codec decoder |

| ffdec_rl2 | FFmpeg RL2 video decoder |

| ffdec_roqvideo | FFmpeg id RoQ video decoder |

| ffdec_rpza | FFmpeg QuickTime video (RPZA) decoder |

| ffdec_rv10 | FFmpeg RealVideo 1.0 decoder |

| ffdec_rv20 | FFmpeg RealVideo 2.0 decoder |

| ffdec_rv30 | FFmpeg RealVideo 3.0 decoder |

| ffdec_rv40 | FFmpeg RealVideo 4.0 decoder |

| ffdec_sgi | FFmpeg SGI image decoder |

| ffdec_smackvid | FFmpeg Smacker video decoder |

| ffdec_smc | FFmpeg QuickTime Graphics (SMC) decoder |

| ffdec_snow | FFmpeg Snow decoder |

| ffdec_sp5x | FFmpeg Sunplus JPEG (SP5X) decoder |

| ffdec_sunrast | FFmpeg Sun Rasterfile image decoder |

| ffdec_svq1 | FFmpeg Sorenson Vector Quantizer 1 / Sorenson Video 1 / SVQ1 decoder |

| ffdec_svq3 | FFmpeg Sorenson Vector Quantizer 3 / Sorenson Video 3 / SVQ3 decoder |

| ffdec_targa | FFmpeg Truevision Targa image decoder |

| ffdec_thp | FFmpeg Nintendo Gamecube THP video decoder |

| ffdec_tiertexseqvideo | FFmpeg Tiertex Limited SEQ video decoder |

| ffdec_tiff | FFmpeg TIFF image decoder |

| ffdec_tmv | FFmpeg 8088flex TMV decoder |

| ffdec_truemotion1 | FFmpeg Duck TrueMotion 1.0 decoder |

| ffdec_truemotion2 | FFmpeg Duck TrueMotion 2.0 decoder |

| ffdec_camtasia | FFmpeg TechSmith Screen Capture Codec decoder |

| ffdec_txd | FFmpeg Renderware TXD (TeXture Dictionary) image decoder |

| ffdec_ultimotion | FFmpeg IBM UltiMotion decoder |

| ffdec_vb | FFmpeg Beam Software VB decoder |

| ffdec_vc1 | FFmpeg SMPTE VC-1 decoder |

| ffdec_vcr1 | FFmpeg ATI VCR1 decoder |

| ffdec_vmdvideo | FFmpeg Sierra VMD video decoder |

| ffdec_vmnc | FFmpeg VMware Screen Codec / VMware Video decoder |

| ffdec_vp3 | FFmpeg On2 VP3 decoder |

| ffdec_vp5 | FFmpeg On2 VP5 decoder |

| ffdec_vp6 | FFmpeg On2 VP6 decoder |

| ffdec_vp6a | FFmpeg On2 VP6 (Flash version, with alpha channel) decoder |

| ffdec_vp6f | FFmpeg On2 VP6 (Flash version) decoder |

| ffdec_vp8 | FFmpeg On2 VP8 decoder |

| ffdec_vqavideo | FFmpeg Westwood Studios VQA (Vector Quantized Animation) video decoder |

| ffdec_wmv1 | FFmpeg Windows Media Video 7 decoder |

| ffdec_wmv2 | FFmpeg Windows Media Video 8 decoder |

| ffdec_wmv3 | FFmpeg Windows Media Video 9 decoder |

| ffdec_wnv1 | FFmpeg Winnov WNV1 decoder |

| ffdec_xan_wc3 | FFmpeg Wing Commander III / Xan decoder |

| ffdec_xl | FFmpeg Miro VideoXL decoder |

| ffdec_yop | FFmpeg Psygnosis YOP Video decoder |

| ffdec_zlib | FFmpeg LCL (LossLess Codec Library) ZLIB decoder |

| ffdec_zmbv | FFmpeg Zip Motion Blocks Video decoder |

| ffdec_aac | FFmpeg Advanced Audio Coding decoder |

| ffdec_aac_latm | FFmpeg AAC LATM (Advanced Audio Codec LATM syntax) decoder |

| ffdec_ac3 | FFmpeg ATSC A/52A (AC-3) decoder |

| ffdec_alac | FFmpeg ALAC (Apple Lossless Audio Codec) decoder |

| ffdec_als | FFmpeg MPEG-4 Audio Lossless Coding (ALS) decoder |

| ffdec_amrnb | FFmpeg Adaptive Multi-Rate NarrowBand decoder |

| ffdec_ape | FFmpeg Monkey’s Audio decoder |

| ffdec_atrac1 | FFmpeg Atrac 1 (Adaptive TRansform Acoustic Coding) decoder |

| ffdec_atrac3 | FFmpeg Atrac 3 (Adaptive TRansform Acoustic Coding 3) decoder |

| ffdec_binkaudio_dct | FFmpeg Bink Audio (DCT) decoder |

| ffdec_binkaudio_rdft | FFmpeg Bink Audio (RDFT) decoder |

| ffdec_cook | FFmpeg COOK decoder |

| ffdec_dca | FFmpeg DCA (DTS Coherent Acoustics) decoder |

| ffdec_dsicinaudio | FFmpeg Delphine Software International CIN audio decoder |

| ffdec_eac3 | FFmpeg ATSC A/52B (AC-3, E-AC-3) decoder |

| ffdec_flac | FFmpeg FLAC (Free Lossless Audio Codec) decoder |

| ffdec_gsm | FFmpeg GSM decoder |

| ffdec_gsm_ms | FFmpeg GSM Microsoft variant decoder |

| ffdec_imc | FFmpeg IMC (Intel Music Coder) decoder |

| ffdec_mace3 | FFmpeg MACE (Macintosh Audio Compression/Expansion) 3 |

| ffdec_mace6 | FFmpeg MACE (Macintosh Audio Compression/Expansion) 6 |

| ffdec_mlp | FFmpeg MLP (Meridian Lossless Packing) decoder |

| ffdec_mp1float | FFmpeg MP1 (MPEG audio layer 1) decoder |

| ffdec_mp2float | FFmpeg MP2 (MPEG audio layer 2) decoder | |

| ffdec_mpc7 | FFmpeg Musepack SV7 decoder |

| ffdec_mpc8 | FFmpeg Musepack SV8 decoder |

| ffdec_nellymoser | FFmpeg Nellymoser Asao decoder |

| ffdec_qcelp | FFmpeg QCELP / PureVoice decoder |

| ffdec_qdm2 | FFmpeg QDesign Music Codec 2 decoder |

| ffdec_real_144 | FFmpeg RealAudio 1.0 (14.4K) decoder |

| ffdec_real_288 | FFmpeg RealAudio 2.0 (28.8K) decoder |

| ffdec_shorten | FFmpeg Shorten decoder |

| ffdec_sipr | FFmpeg RealAudio SIPR / ACELP.NET decoder |

| ffdec_smackaud | FFmpeg Smacker audio decoder |

| ffdec_sonic | FFmpeg Sonic decoder |

| ffdec_truehd | FFmpeg TrueHD decoder |

| ffdec_truespeech | FFmpeg DSP Group TrueSpeech decoder |

| ffdec_tta | FFmpeg True Audio (TTA) decoder |

| ffdec_twinvq | FFmpeg VQF TwinVQ decoder |

| ffdec_vmdaudio | FFmpeg Sierra VMD audio decoder |

| ffdec_wmapro | FFmpeg Windows Media Audio 9 Professional decoder |

| ffdec_wmav1 | FFmpeg Windows Media Audio 1 decoder |

| ffdec_wmav2 | FFmpeg Windows Media Audio 2 decoder |

| ffdec_wmavoice | FFmpeg Windows Media Audio Voice decoder |

| ffdec_ws_snd1 | FFmpeg Westwood Audio (SND1) decoder |

| ffdec_pcm_lxf | FFmpeg PCM signed 20-bit little-endian planar decoder |

| ffdec_interplay_dpcm | FFmpeg DPCM Interplay decoder |

| ffdec_roq_dpcm | FFmpeg DPCM id RoQ decoder |

| ffdec_sol_dpcm | FFmpeg DPCM Sol decoder |

| ffdec_xan_dpcm | FFmpeg DPCM Xan decoder |

| ffdec_adpcm_4xm | FFmpeg ADPCM 4X Movie decoder |

| ffdec_adpcm_adx | FFmpeg SEGA CRI ADX ADPCM decoder |

| ffdec_adpcm_ct | FFmpeg ADPCM Creative Technology decoder |

| ffdec_adpcm_ea | FFmpeg ADPCM Electronic Arts decoder |

| ffdec_adpcm_ea_maxis_xa | FFmpeg ADPCM Electronic Arts Maxis CDROM XA decoder |

| ffdec_adpcm_ea_r1 | FFmpeg ADPCM Electronic Arts R1 decoder |

| ffdec_adpcm_ea_r2 | FFmpeg ADPCM Electronic Arts R2 decoder |

| ffdec_adpcm_ea_r3 | FFmpeg ADPCM Electronic Arts R3 decoder |

| ffdec_adpcm_ea_xas | FFmpeg ADPCM Electronic Arts XAS decoder |

| ffdec_g722 | FFmpeg G.722 ADPCM decoder |

| ffdec_g726 | FFmpeg G.726 ADPCM decoder |

| ffdec_adpcm_ima_amv | FFmpeg ADPCM IMA AMV decoder |

| ffdec_adpcm_ima_dk3 | FFmpeg ADPCM IMA Duck DK3 decoder |

| ffdec_adpcm_ima_dk4 | FFmpeg ADPCM IMA Duck DK4 decoder |

| ffdec_adpcm_ima_ea_eacs | FFmpeg ADPCM IMA Electronic Arts EACS decoder |

| ffdec_adpcm_ima_ea_sead | FFmpeg ADPCM IMA Electronic Arts SEAD decoder |

| ffdec_adpcm_ima_iss | FFmpeg ADPCM IMA Funcom ISS decoder |

| ffdec_adpcm_ima_qt | FFmpeg ADPCM IMA QuickTime decoder |

| ffdec_adpcm_ima_smjpeg | FFmpeg ADPCM IMA Loki SDL MJPEG decoder |

| ffdec_adpcm_ima_wav | FFmpeg ADPCM IMA WAV decoder |

| ffdec_adpcm_ima_ws | FFmpeg ADPCM IMA Westwood decoder |

| ffdec_adpcm_ms | FFmpeg ADPCM Microsoft decoder |

| ffdec_adpcm_sbpro_2 | FFmpeg ADPCM Sound Blaster Pro 2-bit decoder |

| ffdec_adpcm_sbpro_3 | FFmpeg ADPCM Sound Blaster Pro 2.6-bit decoder |

| ffdec_adpcm_sbpro_4 | FFmpeg ADPCM Sound Blaster Pro 4-bit decoder |

| ffdec_adpcm_swf | FFmpeg ADPCM Shockwave Flash decoder |

| ffdec_adpcm_thp | FFmpeg ADPCM Nintendo Gamecube THP decoder |

| ffdec_adpcm_xa | FFmpeg ADPCM CDROM XA decoder |

| ffdec_adpcm_yamaha | FFmpeg ADPCM Yamaha decoder |

| ffdec_ass | FFmpeg Advanced SubStation Alpha subtitle decoder |

| ffdec_dvbsub | FFmpeg DVB subtitles decoder |

| ffdec_dvdsub | FFmpeg DVD subtitles decoder |

| ffdec_pgssub | FFmpeg HDMV Presentation Graphic Stream subtitles decoder |

| ffdec_xsub | FFmpeg XSUB decoder |

Third Party Solutions

Third parties like Ittiam and VisualON provide highly optimized ARM only codecs on Linux, WinCE and Android OS.

Software Components & Dependencies

Dependancies: Required packages to build Gstreamer on Ubuntu:

sudo apt-get install automake autoconf libtool docbook-xml docbook-xsl fop libxml2 gnome-doc-utils

- build-essential

- libtool

- automake

- autoconf

- git-core

- svn

- liboil0.3-dev

- libxml2-dev

- libglib2.0-dev

- gettext

- corkscrew

- socket

- libfaad-dev

- libfaac-dev

Software components for Sitara SDK Release:

- glib

- gstreamer

- liboil

- gst-plugins-good

- gst-ffmpeg

- gst-plugins-bad

- gst-plugins-base

Re-enabling Mp3 and Mpeg2 decode in the Processor SDK

Starting with version 05.05.01.00, mp3 and mpeg2 codecs are no longer distributed as part of the SDK. These plugins can be re-enabled by the end user through rebuilding the gst-plugins-ugly package. The following instructions have been tested with gst-plugins-ugly-0.10.19 which can be found at **gstreamer.freedesktop.org**. Note that these instructions will work for any of the gstreamer plugin packages found in the sdk.

- Source environment-setup at the terminal

- Navigate into the example-applications path under the SDK install directory

- Extract the GStreamer plug-in source archive

- Navigate into the folder that was created

- On the command line type ./configure --host=arm-arago-linux-gnueabi --prefix=/usr

- Notice that some components are not built because they have dependencies that are not part of our SDK

- Run make to build the plugins.

- Run make install DESTDIR=<PATH TO TARGET ROOT>

5.2.3. Accelerated Multimedia¶

Refer to various GStreamer pipelines documented at Multimedia chapter.

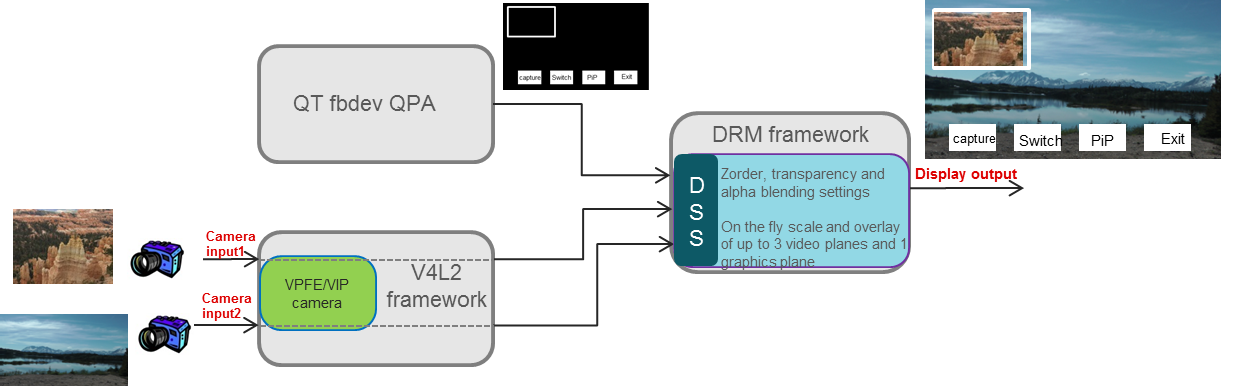

5.2.4. Graphics and Display¶

Refer to various SGX 3D demos and other Graphics applications at Graphics & Display chapter.

5.2.5. DSP offload with OpenCL¶

See https://software-dl.ti.com/mctools/esd/docs/opencl/examples/index.html

5.2.6. Camera Users Guide¶

Introduction

This users guide provides an overview of camera loopback example integrated in matrix application on AM37x platform. This application can be executed by selecting the “Camera” icon at the top-level matrix.

Supported Platforms

- AM37x

Camera Loopback Example

Camera example application demonstrates streaming of YUV 4:2:2 parallel data from Leopard Imaging LI-3M02 daughter card with MT9T111 sensor on AM37x EVM. Here, driver allocated buffers are used to capture data from the sensor and display to any active display device (LCD/DVI/TV) in VGA resolution (640x480).

Since the LCD is at inverted VGA resolution video buffer is rotated by 90 degree. Rotation is enabled using the hardware rotation engine called Virtual Rotated Frame Buffer (VRFB), VRFB provides four virtual frame buffers: 0, 90, 180 and 270. For statically compiled drivers, VRFB buffer can be declared in the bootloader. In this example, data is displayed through video1 pipeline and “omap_vout.vid1_static_vrfb_alloc=y” bootargument is included in the bootargs.

Sensor Daughter card

Smart sensor modules have control algorithms such as AE, AWB and AF are implemented in the module. Third parties like Leopard Imaging and E-Con Systems provide smart sensor solutions for OMAP35x/AM37x Rev G EVM and Beagle Board. SDK 5.02 integrates support for LI-3M02 daughter card which has MT9T111 sensor, the sensor is configured in 8-bit YUV4:2:2 mode. Leopard Imaging daughter card LI-3M02 can be ordered from their website.

For additional support on daughter cards or sensor details please contact the respective third parties

Contact: support@leopardimaging.com

Contact: Harishankkar

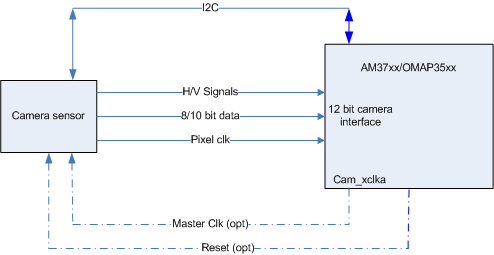

Connectivity

| OMAP35xx/AM/DM37xx | Signal Description | Comment | IO Type |

| cam_hs | Camera Horizontal Sync | IO | |

| cam_vs | Camera Vertical Sync | IO | |

| cam_xclka | Camera Clock Output a | Optional | O |

| cam_xclkb | Camera Clock Output b | Optional | O |

| cam_[d0:12] | Camera/Video Digital Image Data | I | |

| cam_fld | Camera Field ID | Optional | IO |

| cam_pclk | Camera Pixel Clock | I | |

| cam_wen | Camera Write Enable | Optional | I |

| cam_strobe | Flash Strobe Contrl Signal | Optional | O |

| cam_global_reset | Global Reset - Strobe Synchronization | Optional | IO |

| cam_shutter | Mechanical Shutter Control Signa | Optional | O |

LI-3M02 daughter card is connected to the 26-pin J31 connector on the EVM. 24MHz oscillator on the LI daughter card is used to drive the sensor device, alternatively camera clock output generated by processor ISP block can be used to drive the sensor pixel clock. Horizontal sync (HS) and vertical sync (VS) lines are used to detect end of line and field respectively. Sensor data line CMOS D4:D11 are connected to the processor D4:D11.

Connectivity of LI-3M02 daughter card to AM/DM37x

- Note: H/V signal connectivity not required for ITU-R BT.656 input since synchronization signals are extracted from BT.656 bit stream

Media Controller Framework

SDK 5.02 includes support for media controller framework, for additional details of media controller framework and its usage please refer the PSP capture driver users guide

In order to enable streaming each links between each entity is established. Currently only MT9T111/TV5146 => CCDC => Memory has been validated.

Media: Opened Media Device

Enumerating media entities

[1]:OMAP3 ISP CCP2

[2]:OMAP3 ISP CCP2 input

[3]:OMAP3 ISP CSI2a

[4]:OMAP3 ISP CSI2a output

[5]:OMAP3 ISP CCDC

[6]:OMAP3 ISP CCDC output

[7]:OMAP3 ISP preview

[8]:OMAP3 ISP preview input

[9]:OMAP3 ISP preview output

[10]:OMAP3 ISP resizer

[11]:OMAP3 ISP resizer input

[12]:OMAP3 ISP resizer output

[13]:OMAP3 ISP AEWB

[14]:OMAP3 ISP AF

[15]:OMAP3 ISP histogram

[16]:mt9t111 2-003c

[17]:tvp514x 3-005c

Total number of entities: 17

Enumerating links/pads for entities

pads for entity 1=(0 INPUT) (1 OUTPUT)

[1:1]===>[5:0] INACTIVE

pads for entity 2=(0 OUTPUT)

[2:0]===>[1:0] INACTIVE

pads for entity 3=(0 INPUT) (1 OUTPUT)

[3:1]===>[4:0] INACTIVE

[3:1]===>[5:0] INACTIVE

pads for entity 4=(0 INPUT)

pads for entity 5=(0 INPUT) (1 OUTPUT) (2 OUTPUT)

[5:1]===>[6:0] ACTIVE

[5:2]===>[7:0] INACTIVE

[5:1]===>[10:0] INACTIVE

[5:2]===>[13:0] ACTIVE

[5:2]===>[14:0] ACTIVE

[5:2]===>[15:0] ACTIVE

pads for entity 6=(0 INPUT)

pads for entity 7=(0 INPUT) (1 OUTPUT)

[7:1]===>[9:0] INACTIVE

[7:1]===>[10:0] INACTIVE

pads for entity 8=(0 OUTPUT)

[8:0]===>[7:0] INACTIVE

pads for entity 9=(0 INPUT)

pads for entity 10=(0 INPUT) (1 OUTPUT)

[10:1]===>[12:0] INACTIVE

pads for entity 11=(0 OUTPUT)

[11:0]===>[10:0] INACTIVE

pads for entity 12=(0 INPUT)

pads for entity 13=(0 INPUT)

pads for entity 14=(0 INPUT)

pads for entity 15=(0 INPUT)

pads for entity 16=(0 OUTPUT)

[16:0]===>[5:0] ACTIVE

pads for entity 17=(0 OUTPUT)

[17:0]===>[5:0] INACTIVE

Enabling link [MT9T111]===>[ccdc]

[MT9T111]===>[ccdc] enabled

Enabling link [ccdc]===>[video_node]

[ccdc]===>[video_node] enabled

Capture: Opened Channel

successfully format is set on all pad [WxH] - [640x480]

Capture: Capable of streaming

Capture: Number of requested buffers = 3

Capture: Init done successfully

Display: Opened Channel

Display: Capable of streaming

Display: Number of requested buffers = 3

Display: Init done successfully

Display: Stream on...

Capture: Stream on...

AM/DM37x ISP Configuration

ISP CCDC block should be configured to enable 8-bit YUV4:2:2 parallel data input, the registers below provide details of ISP and CCDC registers in this mode.

ISP Registers:

ISP_CTRL: 0x480BC040

29C14C

ISP_SYSCONFIG: 0x480BC004

2000

ISP_SYSSTATUS: 0x480BC008

1

ISP_IRQ0ENABLE: 0x480BC00C

811B33F9

ISP_IRQ0STATUS: 0x480BC010

0

ISP_IRQ1ENABLE: 0x480BC014

0

ISP_IRQ1STATUS: 0x480BC018

80000300

CCDC Registers:

CCDC_PID: 0x480BC600

1FE01

CCDC_PCR

0

CCDC_SYN_MODE: 0x480BC604

31000

CCDC_HD_VD_WID: 0x480BC60C

0

CCDC_PIX_LINES: 0x480BC610

0

CCDC_HORZ_INFO: 0x480BC614

27F

CCDC_VERT_START: 0x480BC618

0

CCDC_VERT_LINES: 0x480BC61C

1DF

CCDC_CULLING: 0x480BC620

FFFF00FF

CCDC_HSIZE_OFF: 0x480BC624

500

CCDC_SDOFST: 0x480BC628

0

CCDC_SDR_ADDR: 0x480BC62C

1C5000

CCDC_CLAMP: 0x480BC630

10

CCDC_DCSUB: 0x480BC634

40

CCDC_COLPTN: 0x480BC63

0

CCDC_BLKCMP: 0x480BC63C

0

CCDC_FPC: 0x480BC640

0

CCDC_FPC_ADDR: 0x480BC644

0

CCDC_VDINT: 0x480BC648

1DE0140

CCDC_ALAW: 0x480BC64C

0

CCDC_REC: 0x480BC650

0

CCDC_CFG: 0x480BC65

8800

CCDC_FMTCFG: 0x480BC658

0

CCDC_FMT_HORZ: 0x480BC65C

280

CCDC_FMT_VERT: 0x480BC660

1E0

CCDC_PRGEVEN0: 0x480BC684

0

CCDC_PRGEVEN1: 0x480BC688

0

CCDC_PRGODD0: 0x480BC68C

0

CCDC_PRGODD1: 0x480BC690

0

CCDC_VP_OUT: 0x480BC694

3BE2800

CCDC_LSC_CONFIG: 0x480BC698

6600

CCDC_LSC_INITIAL: 0x480BC69C

0

CCDC_LSC_TABLE_BA: 0x480BC6A0

0

CCDC_LSC_TABLE_OF: 0x480BC6A4

0

5.2.7. WLAN and Bluetooth¶

Introduction

This page is a landing page for the entire set of WLAN and Bluetooth Demos available for the WL127x. Many of the demos are platform-agnostic, others apply specifically to a single platform.

The WL127x’s dual mode 802.11 b/g/n and Bluetooth transceiver gives users a robust selection of applications. A list of some basic use cases preloaded on the EVMs can be seen below:

| Senario | Description |

|---|---|

| Bluetooth A2DP profile | Play a *.wav music file from the EVM on a stereo headset |

| Bluetooth OPP profile | Send a *.jpg image from the EVM to a cellular phone via OPP profile |

| Bluetooth FTP profile | Sends a text file from the EVM to a PC via FTP profile |

| Wireless LAN ping | Connect to an Access Point and perform a ping test |

| Wireless LAN throughput | Test UDP downstream throughput using the iPerf tool |

| Web browsing through the WLAN | Browse the web over WLAN using a PC connected to the EVM Ethernet port |

| Bluetooth and WLAN coexistence | Play a *.wav music file from the EVM on a stereo headset while browsing the web over WLAN |

Bluetooth Demos

Classic Bluetooth

- Demos using GUI

- SPP

- HID

- A2DP

- Bluetooth Demos using Command Line in Linux

- Bluetooth Demos using Command Line in Windows

Bluetooth Low-Energy (BLE)

WLAN Demos

First Time

If running the WLAN demos on an EVM for the first time, it is recommended that you first complete the two steps below:

- Step 1: Calibration – Calibration is a one-time procedure, performed before any WLAN operation. Calibration is performed once after the board assembly, or in case the 12xx connectivity daughtercard or EVM are replaced (refer to Calibration Process).

You may refer to Linux Wireless Calibrator page for more instruction.

- Step 2: MAC address settings - This is a one-time procedure, done after the calibration, and before any WLAN operation is performed (refer to: <modifying WLAN MAC address>)

WLAN Station Demos

- Connect to AP (Ping Test)

- QT GUI

- Command line - IW commands

- Command line - configuration file

- Command line - WPA supplicant scripts

- WLAN Throughput Test Utility (Command line)

- WLAN Throughput Test

- Web-Browsing on Matrix UI and Remote (PC Based) Web Browser (QT GUI)

- QT Web Browser - Connect to AP using Command line

- QT Web Browser - Connect to AP using QT GUI

- WLAN Low Power Use Case Demo (Magic Packet visibly wakes up system)

- AM37x platform:

- Shut Down Mode

- WOWLAN (Wake On LAN) Mode

- AM18x platform:

- Shut Down Mode

- AM335x platform:

- Currently Not Supported

WLAN Soft AP Demos

- Soft AP - no security Demo (Command Line)

- Soft AP Configuration Utility (QT GUI)

- Soft AP Wireless Gateway

WLAN - WiFi Direct Demos

- WiFi Direct Demos (Command line)

- Generating P2P Group using Push button

- Generating Autonomous P2P Group - Push Button

- Generating P2P Group using Pin Code

- WiFi Direct Battleship Demo

Miscellaneous WLAN Demos

- `WLAN Code Descriptions and Examples <https://processors.wiki.ti.com/index.php/OMAP35x_Wireless_Connectivity_Code_Descriptions_and_Examples>`__

- Enabling Multiple WLAN Roles on the WL127x

- How-to configure Ethernet to WLAN Gateway

- How-to configure Ethernet to WLAN Bridge

Miscellaneous Demos

- Setting Host CPU clock

- AM37x Wlan Throughput Measurement

- Suspend Resume Operation

- How to work with A band

Regulatory Domain

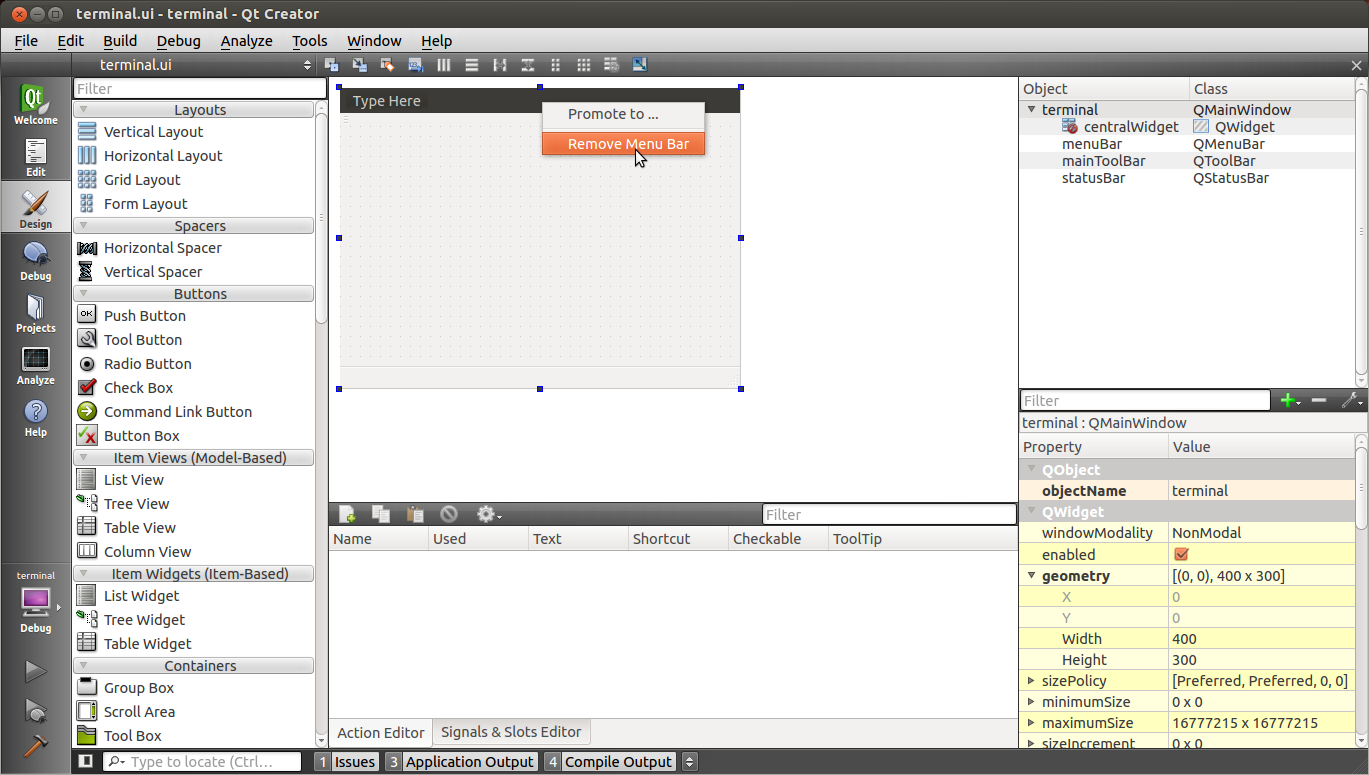

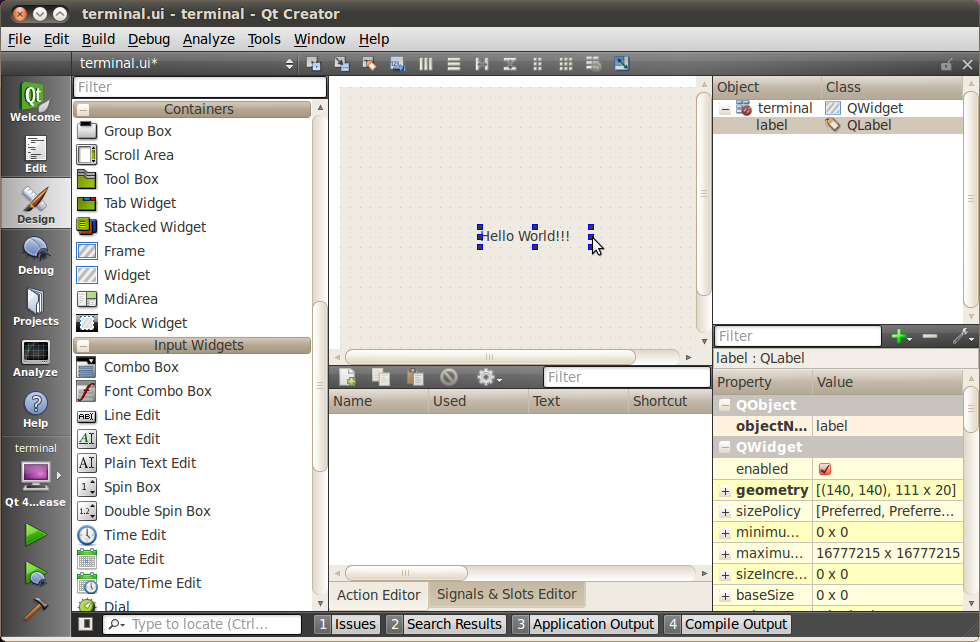

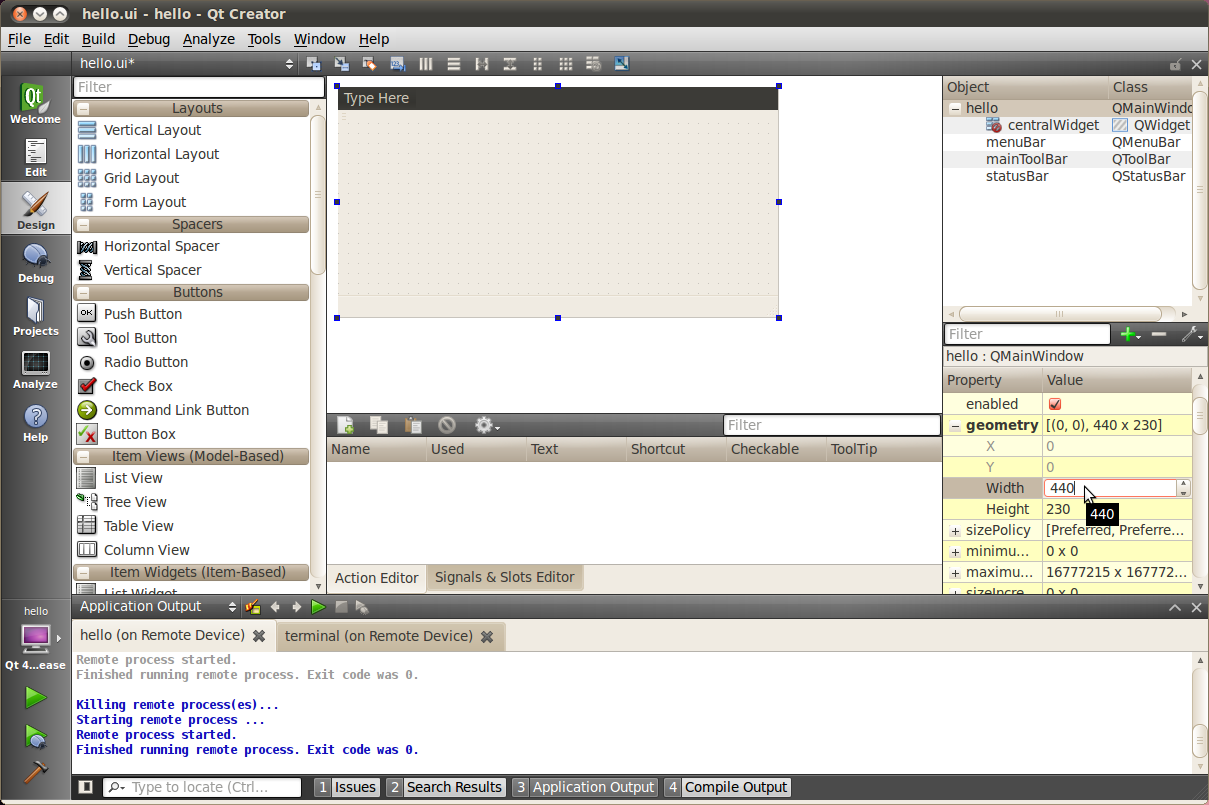

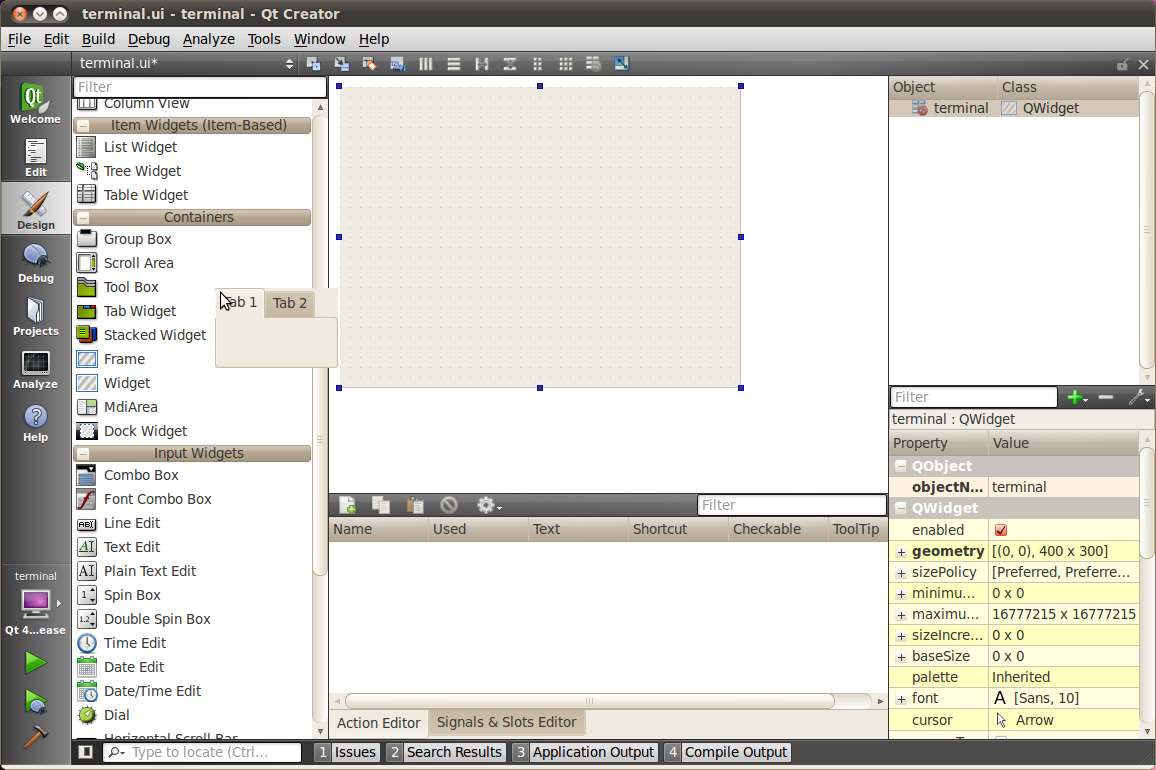

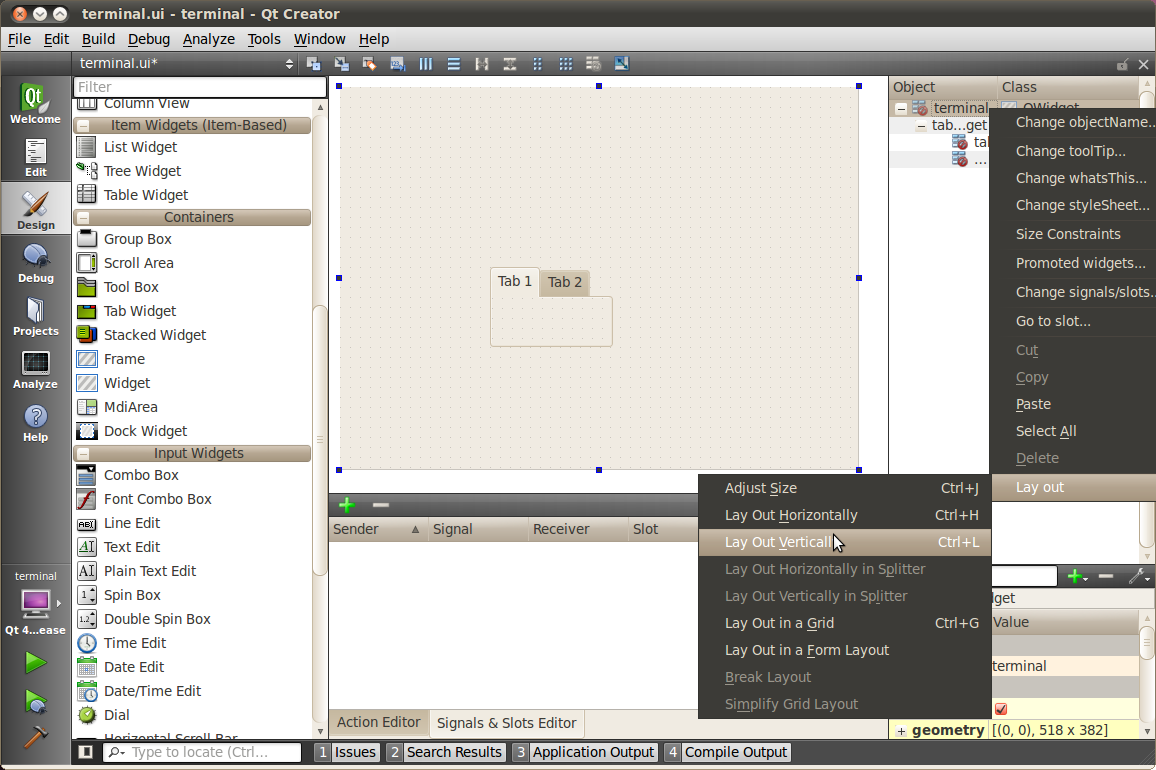

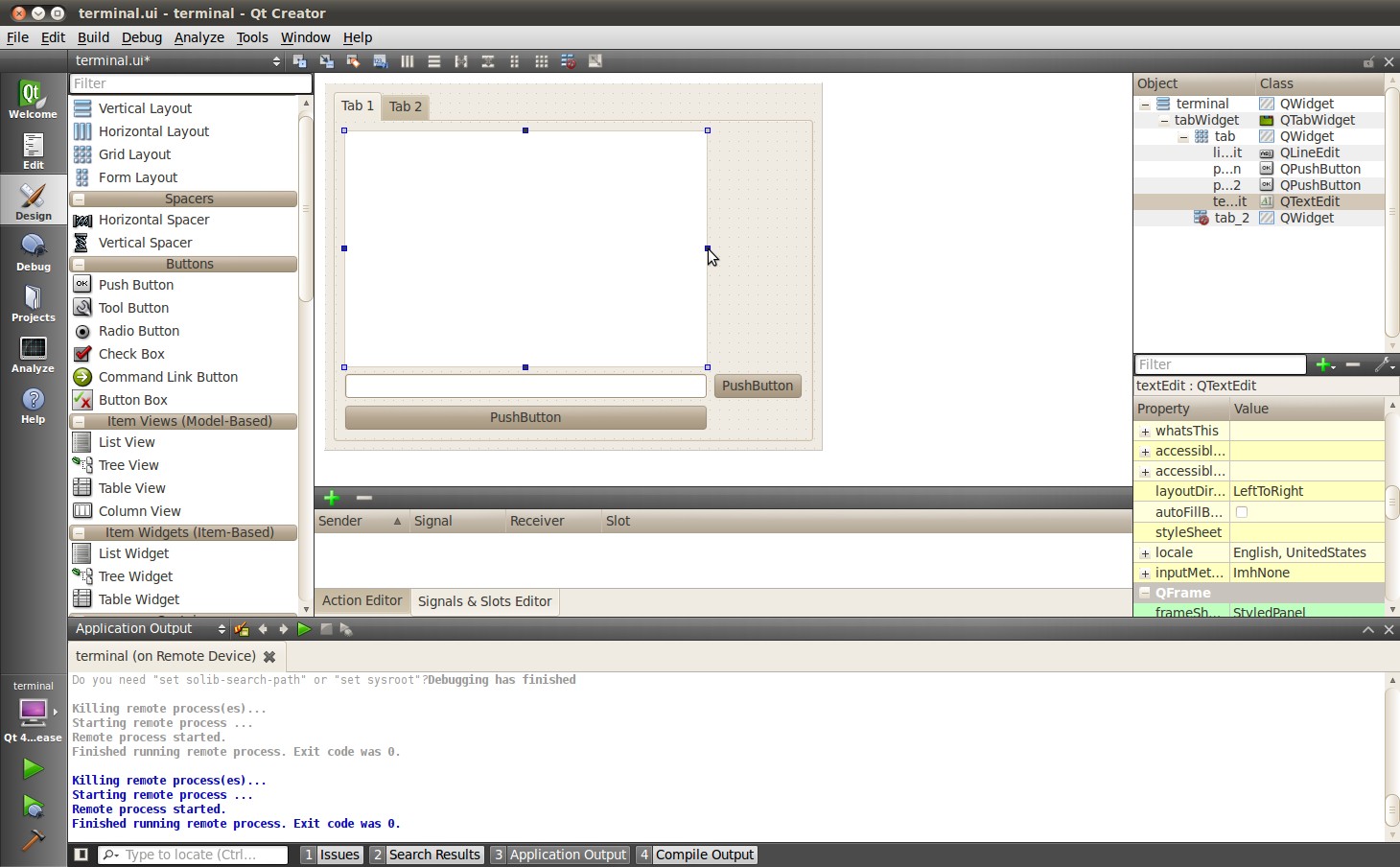

5.2.8. Hands on with QT¶

Introduction

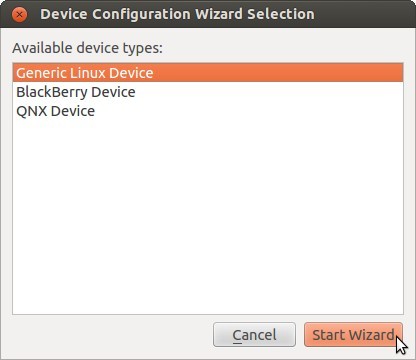

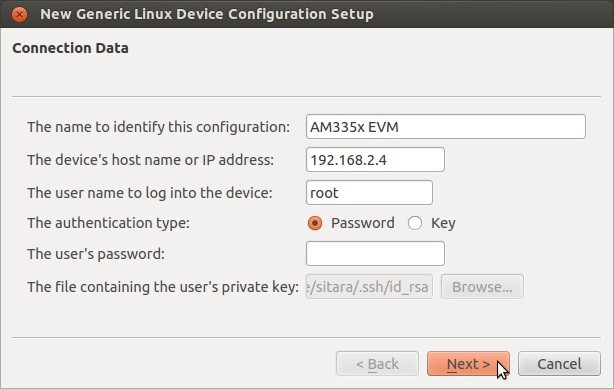

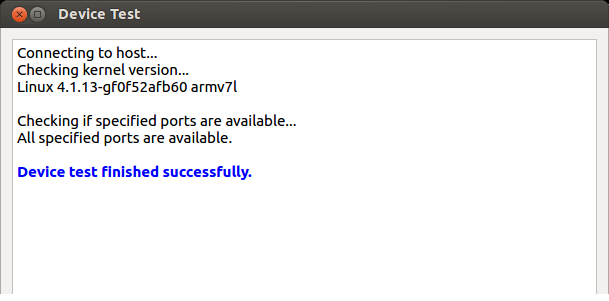

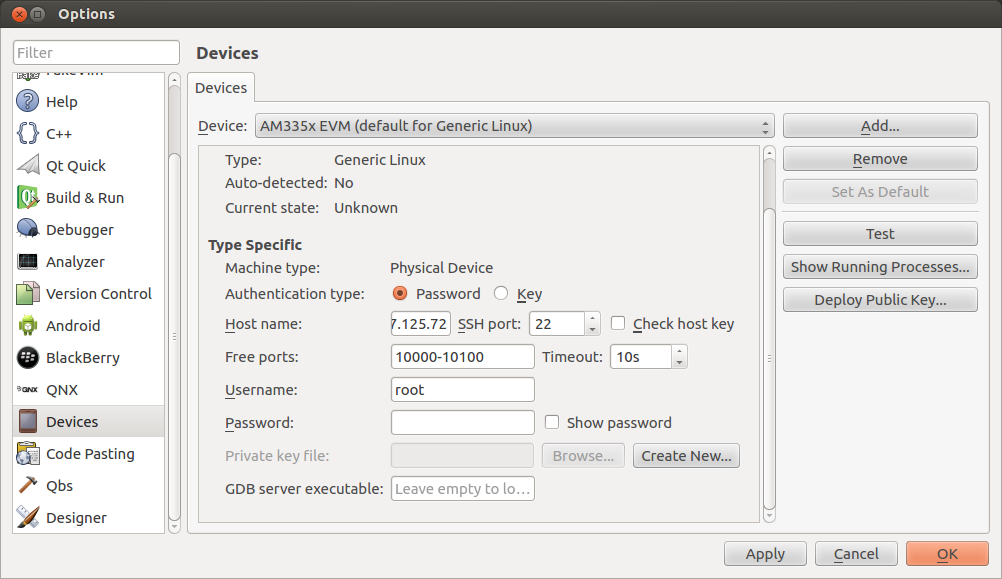

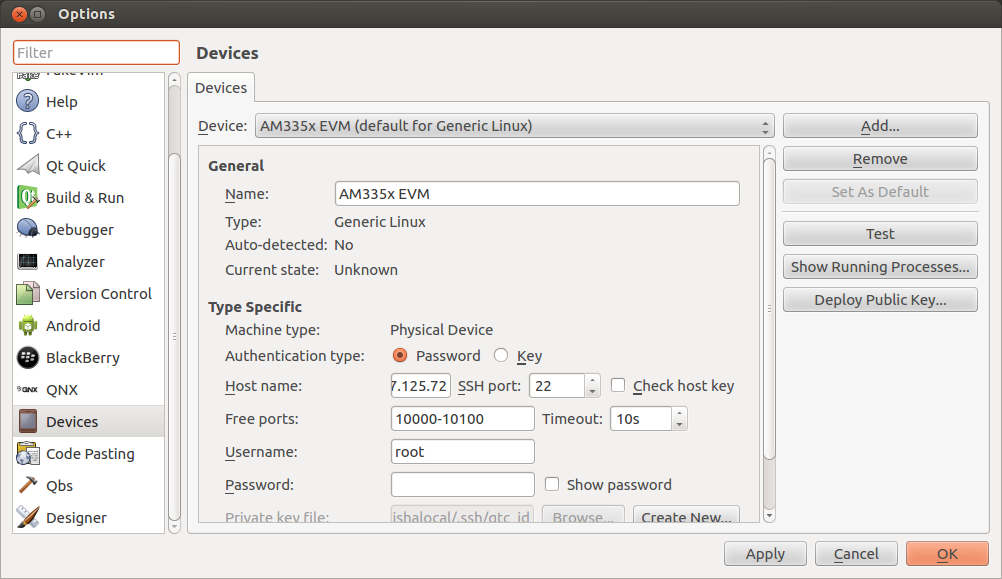

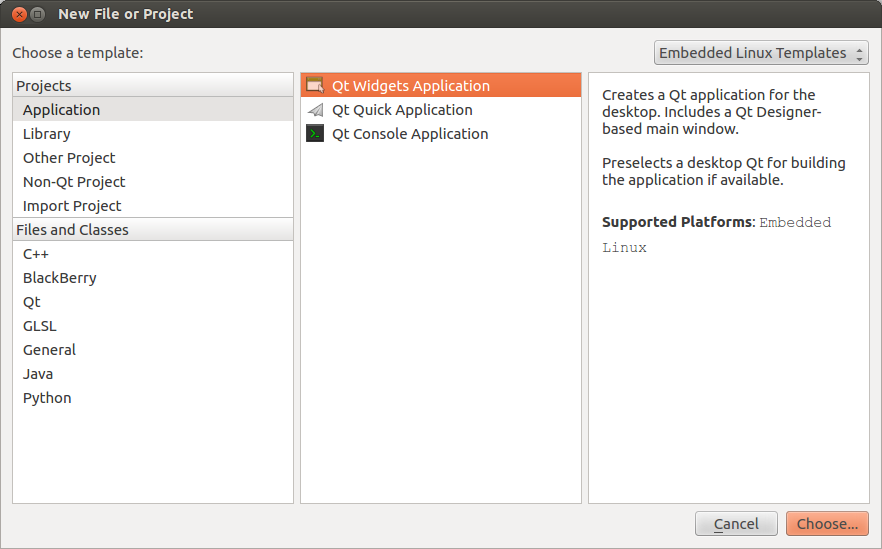

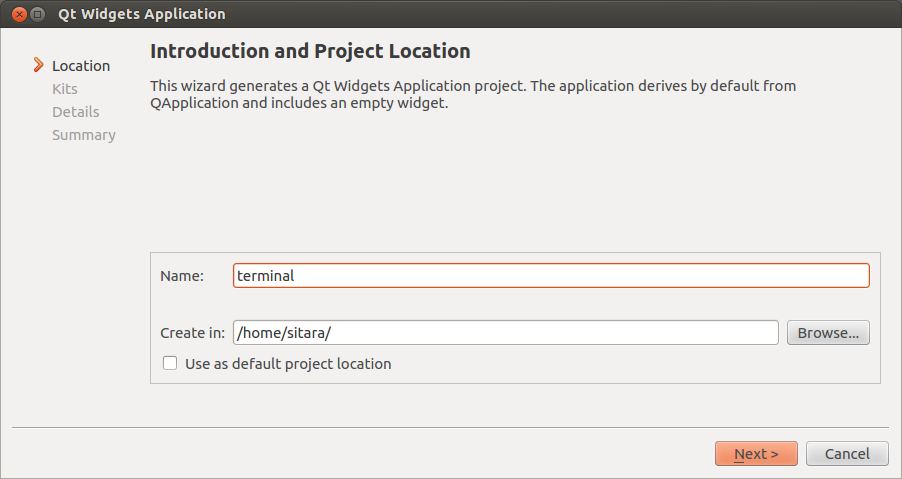

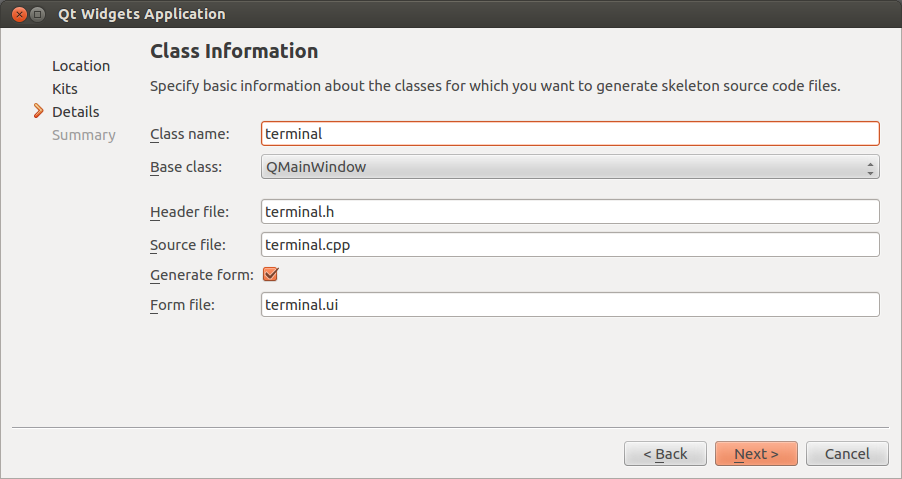

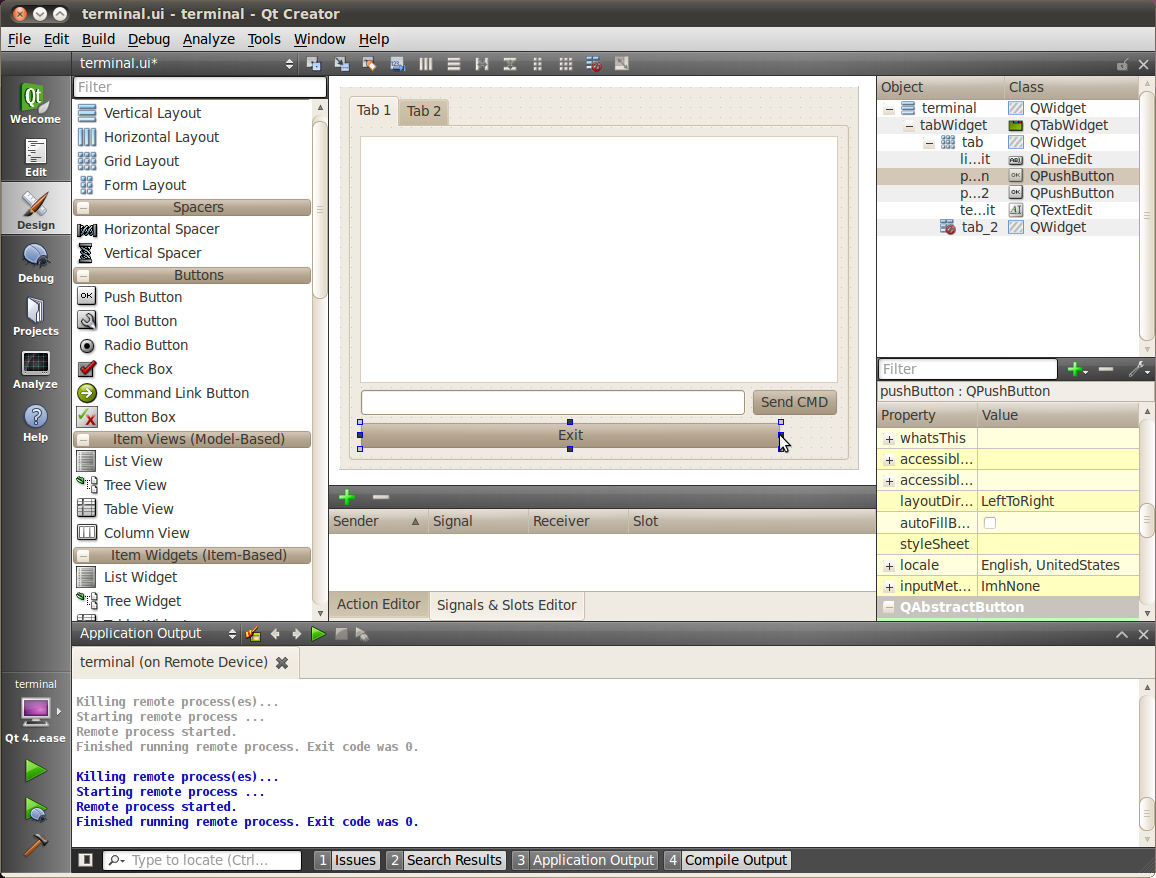

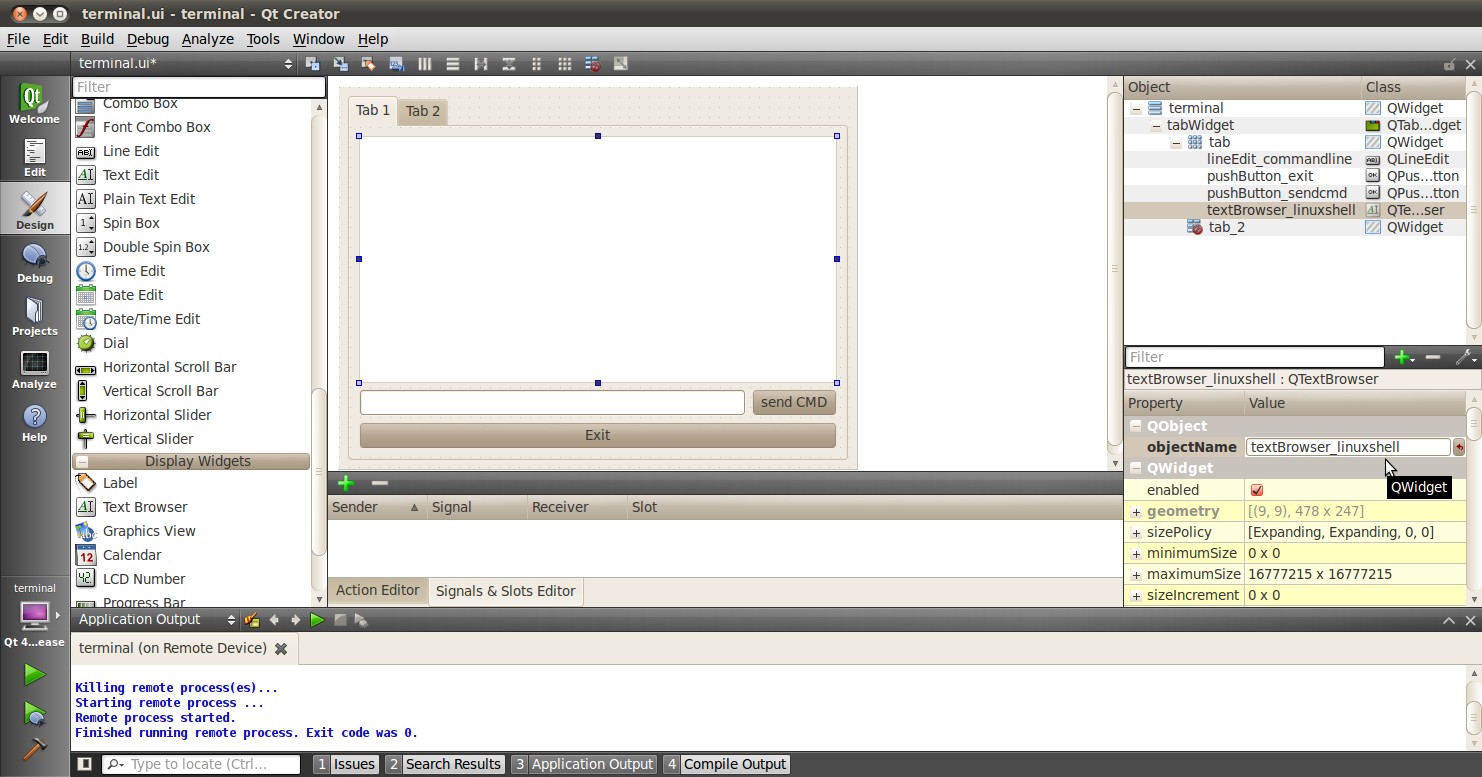

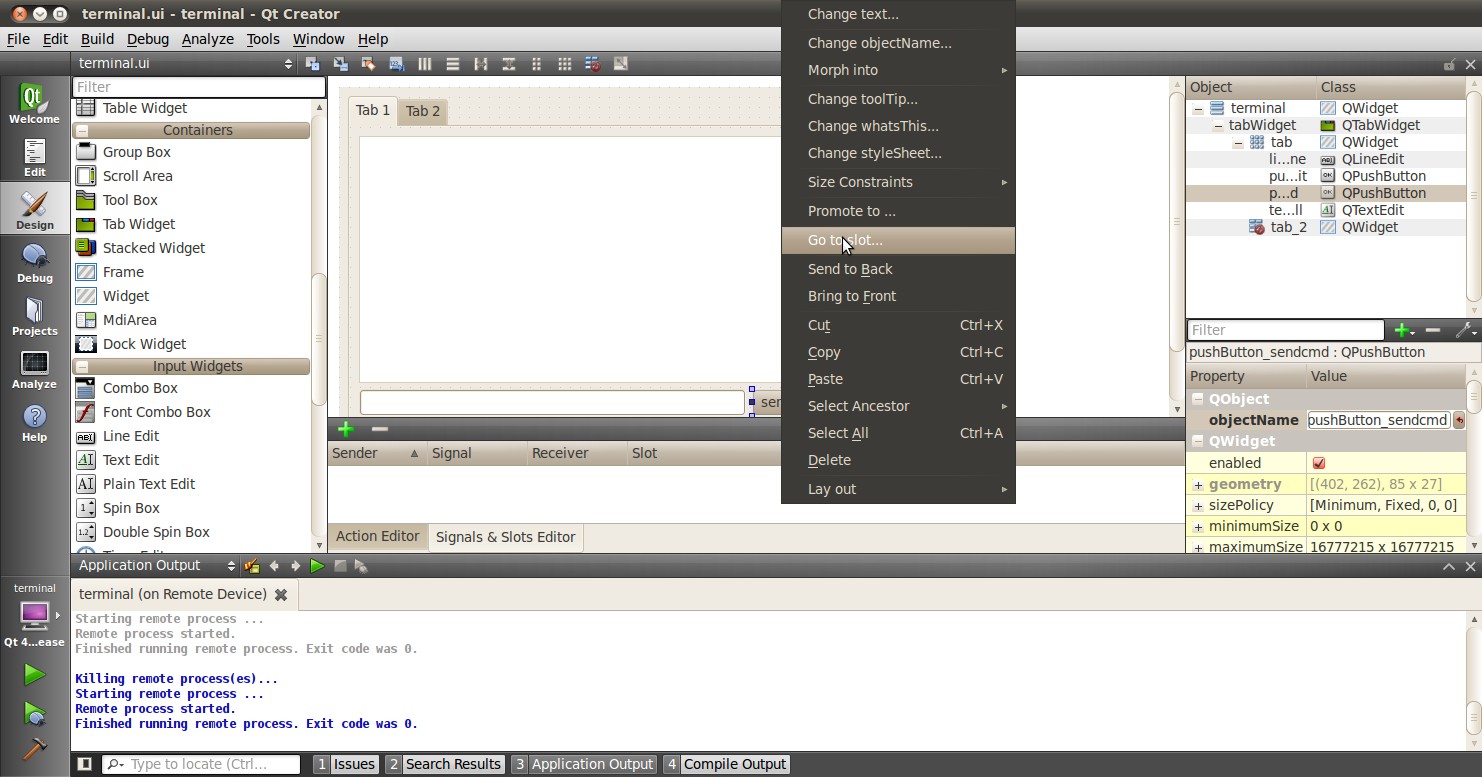

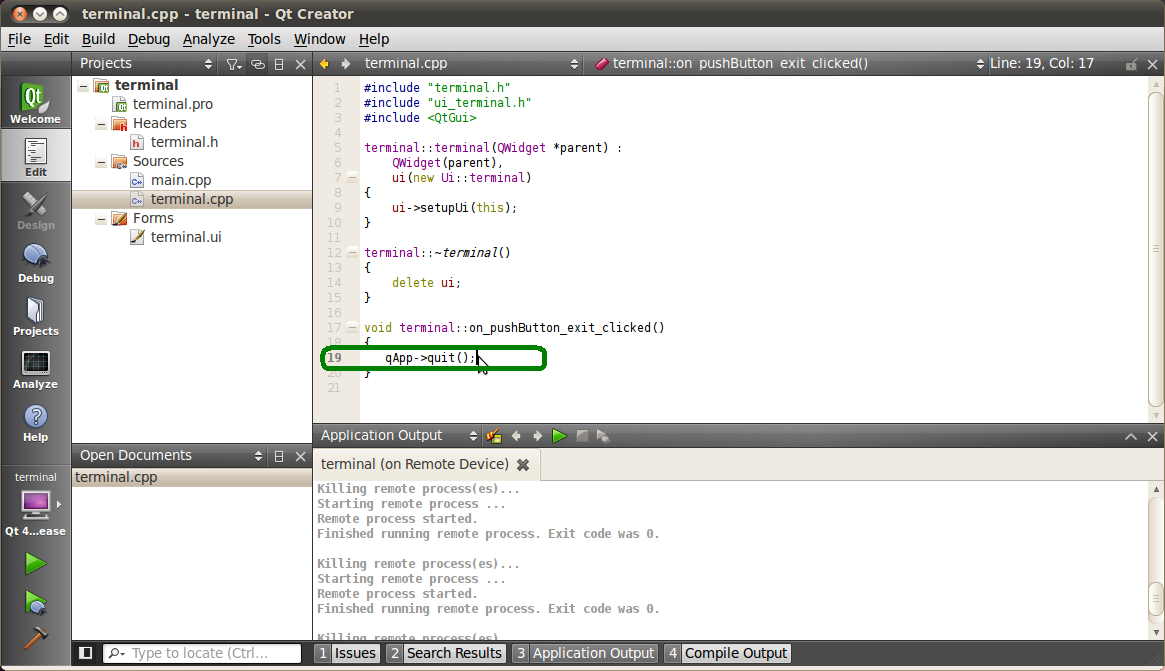

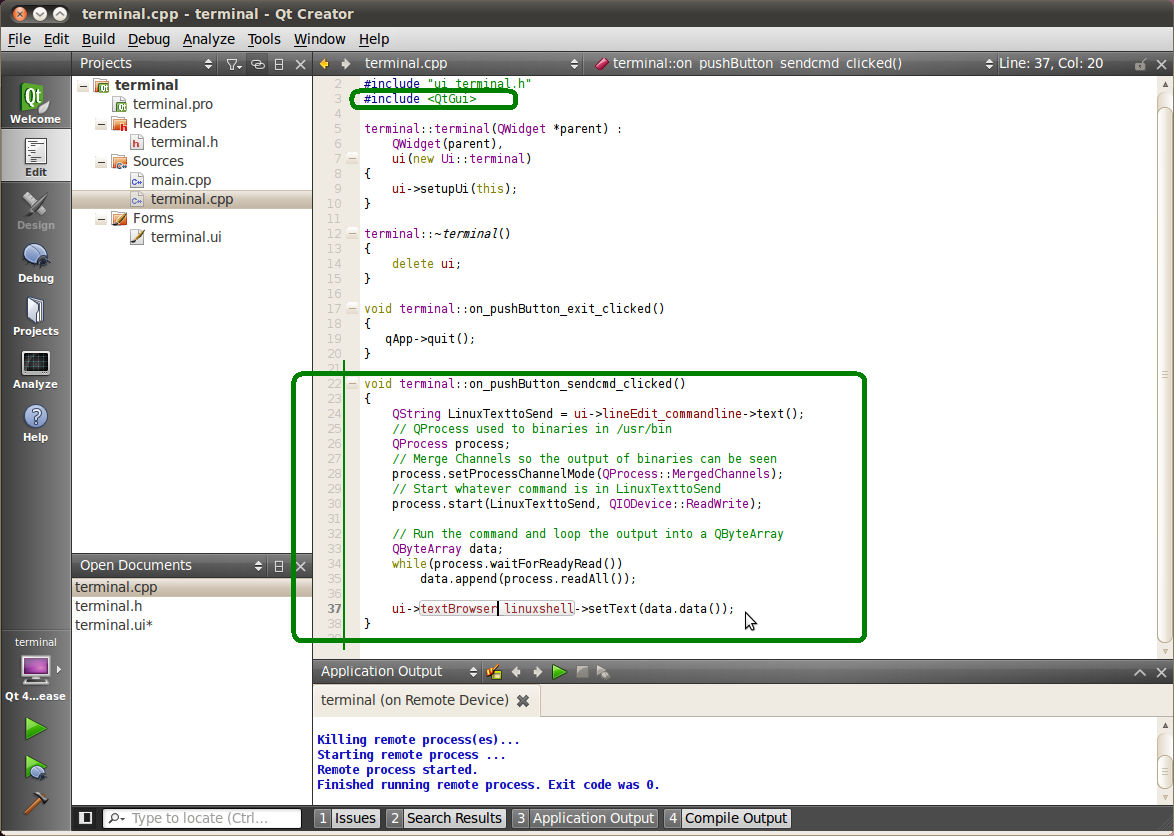

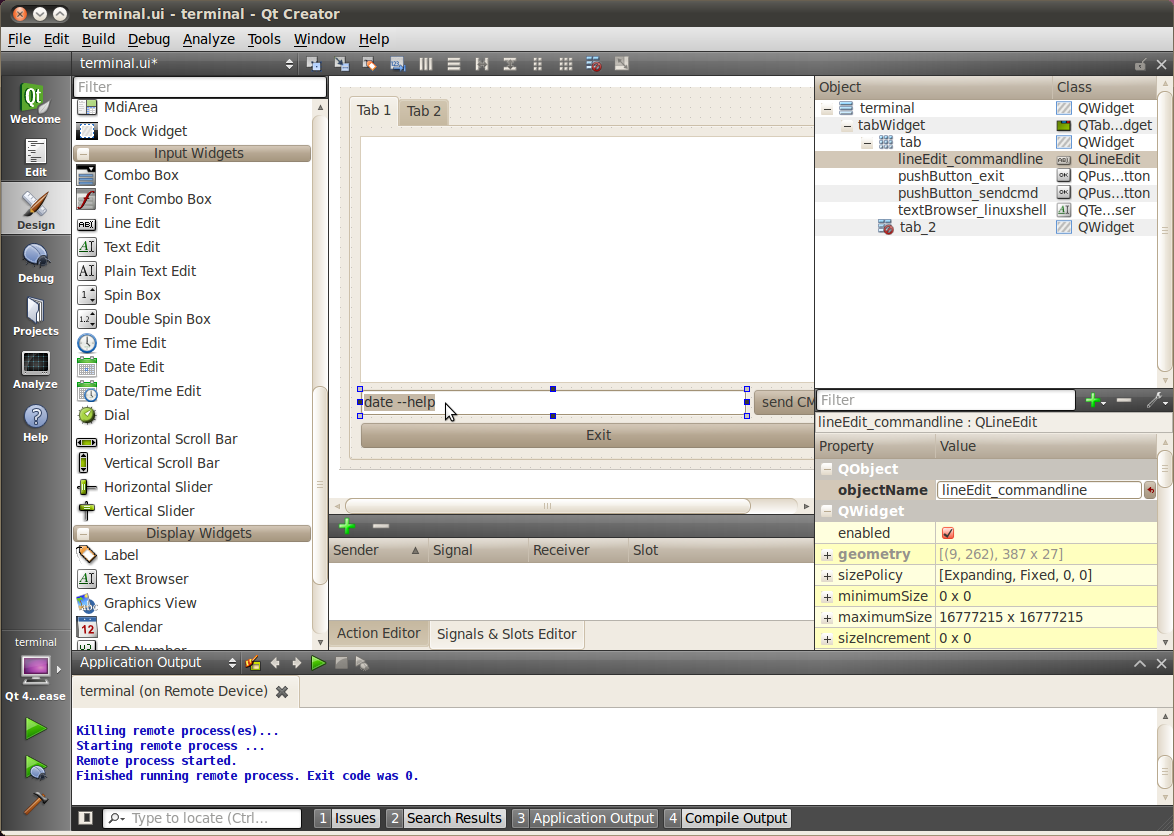

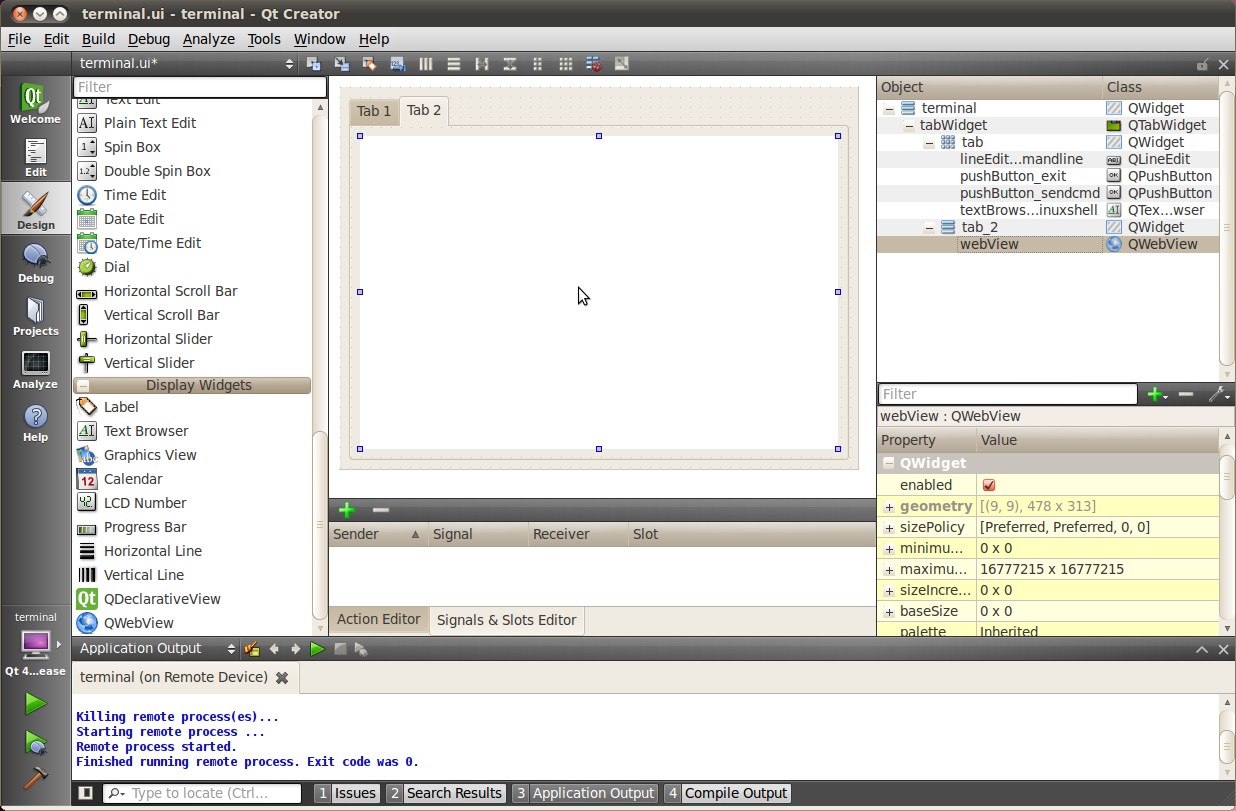

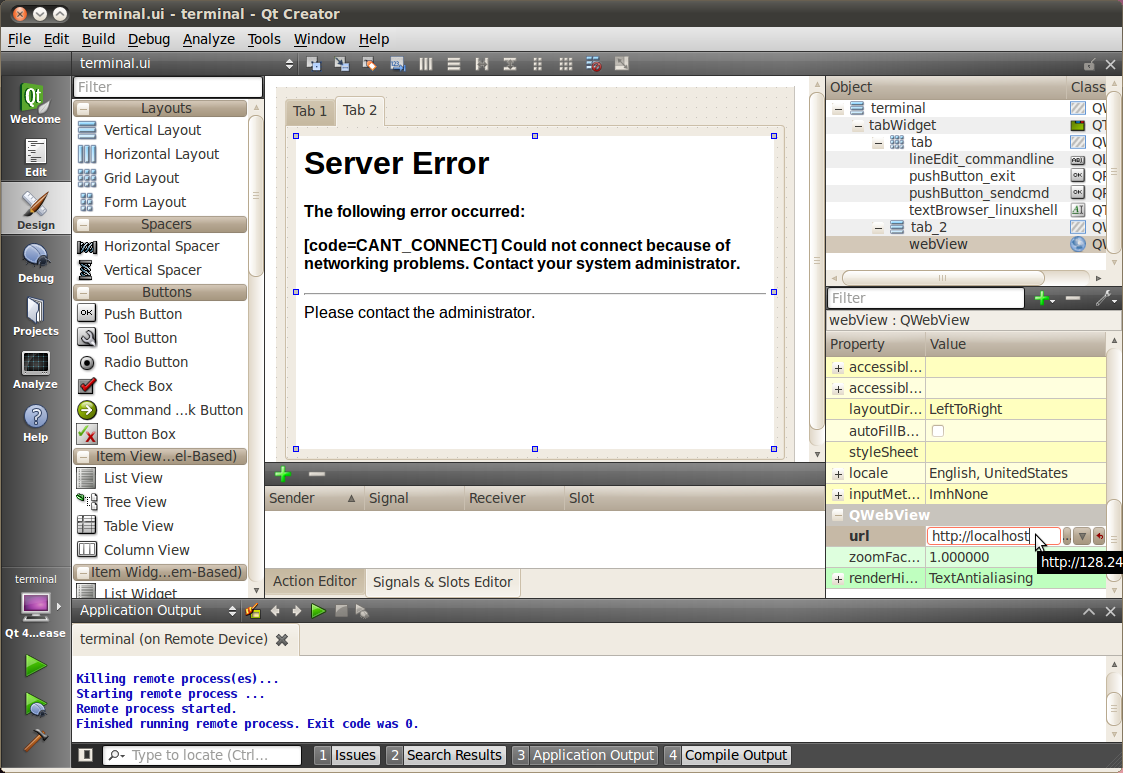

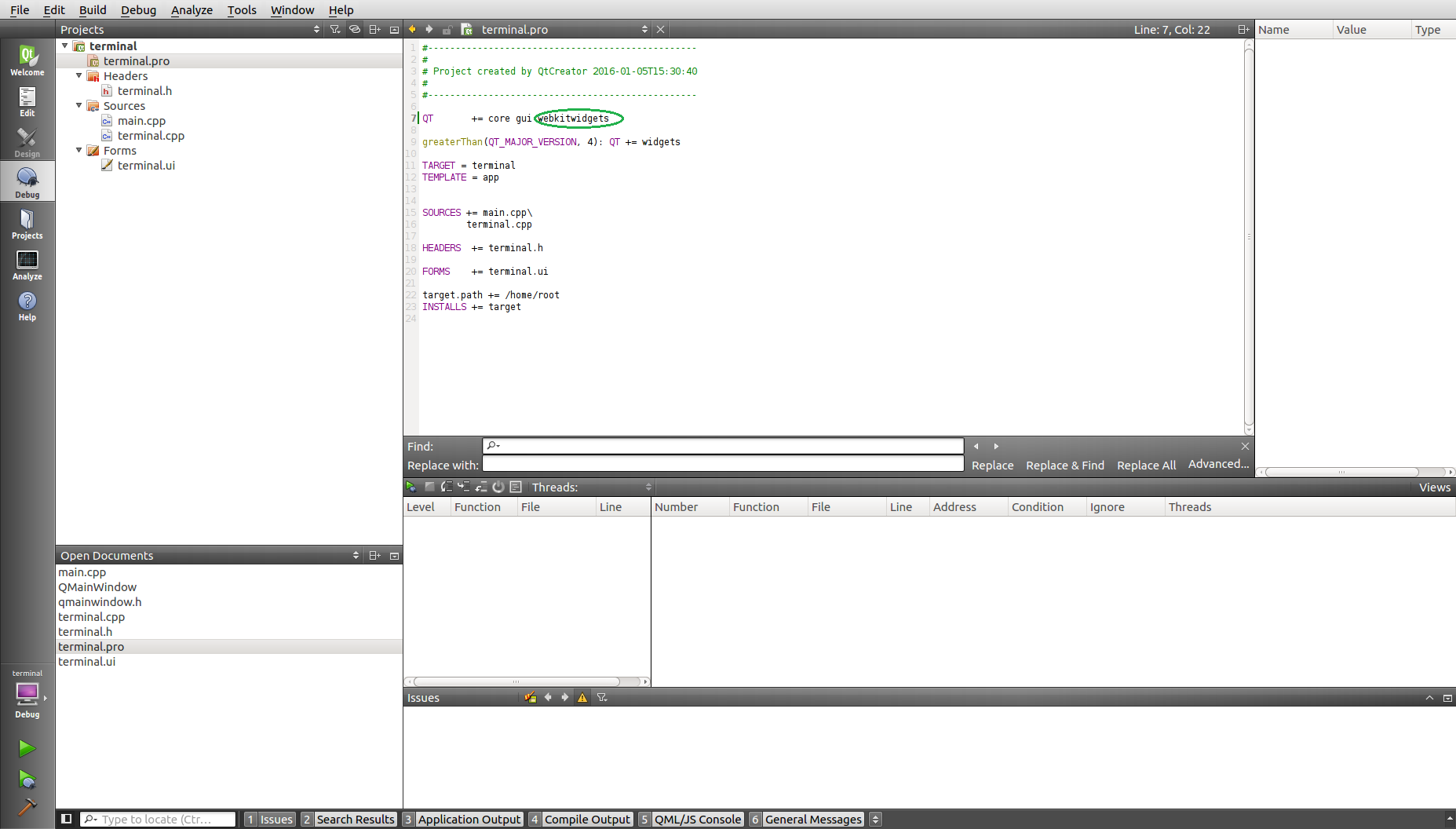

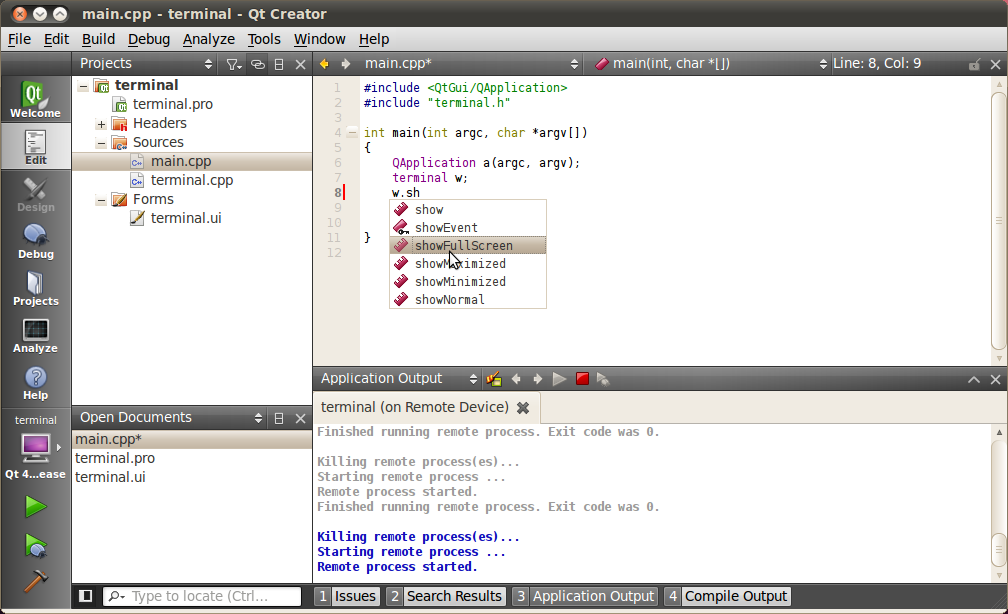

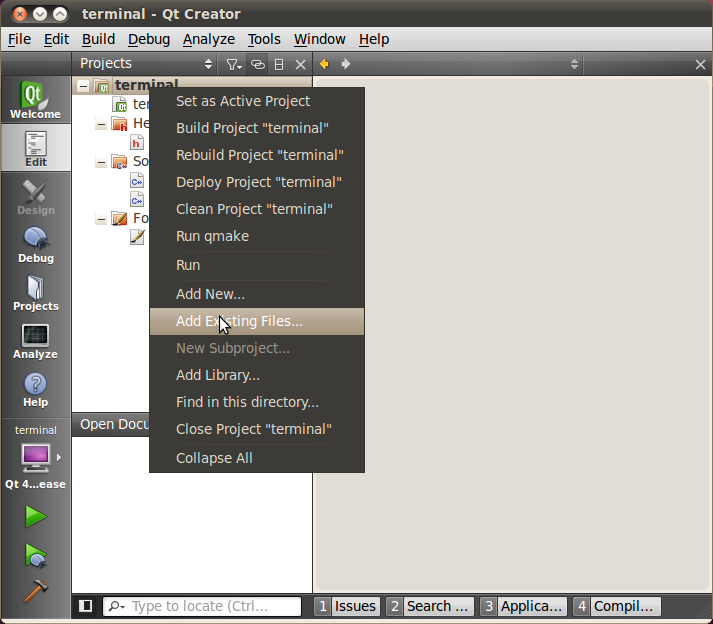

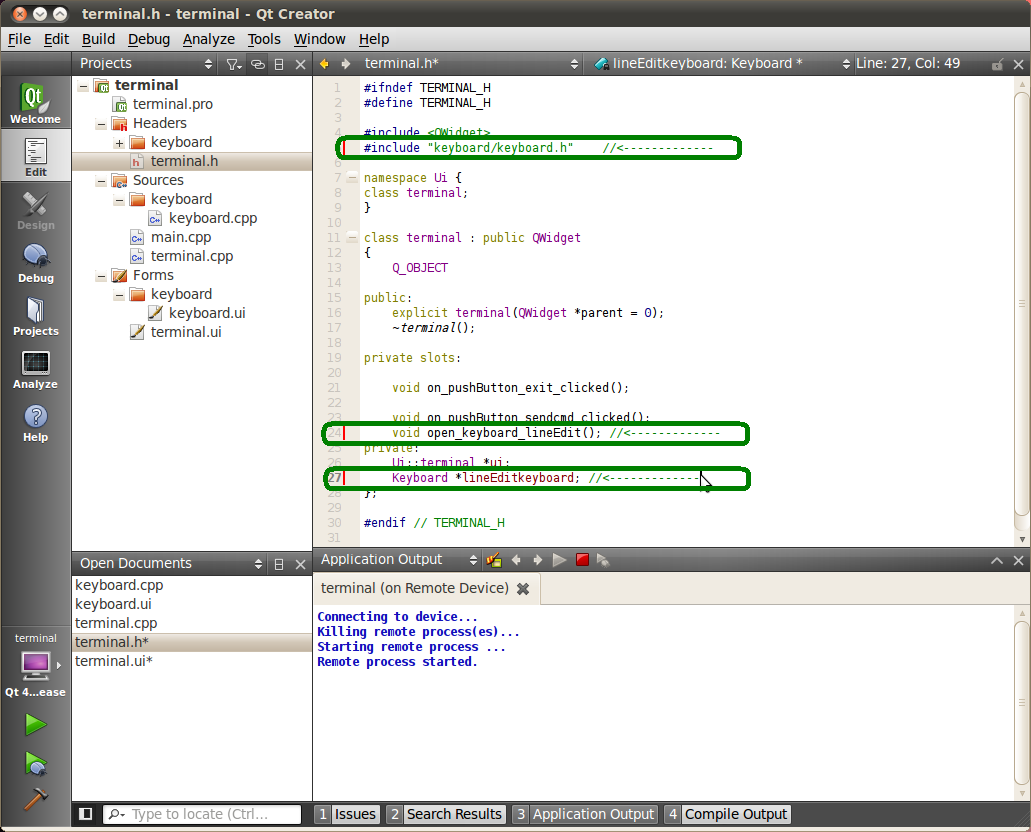

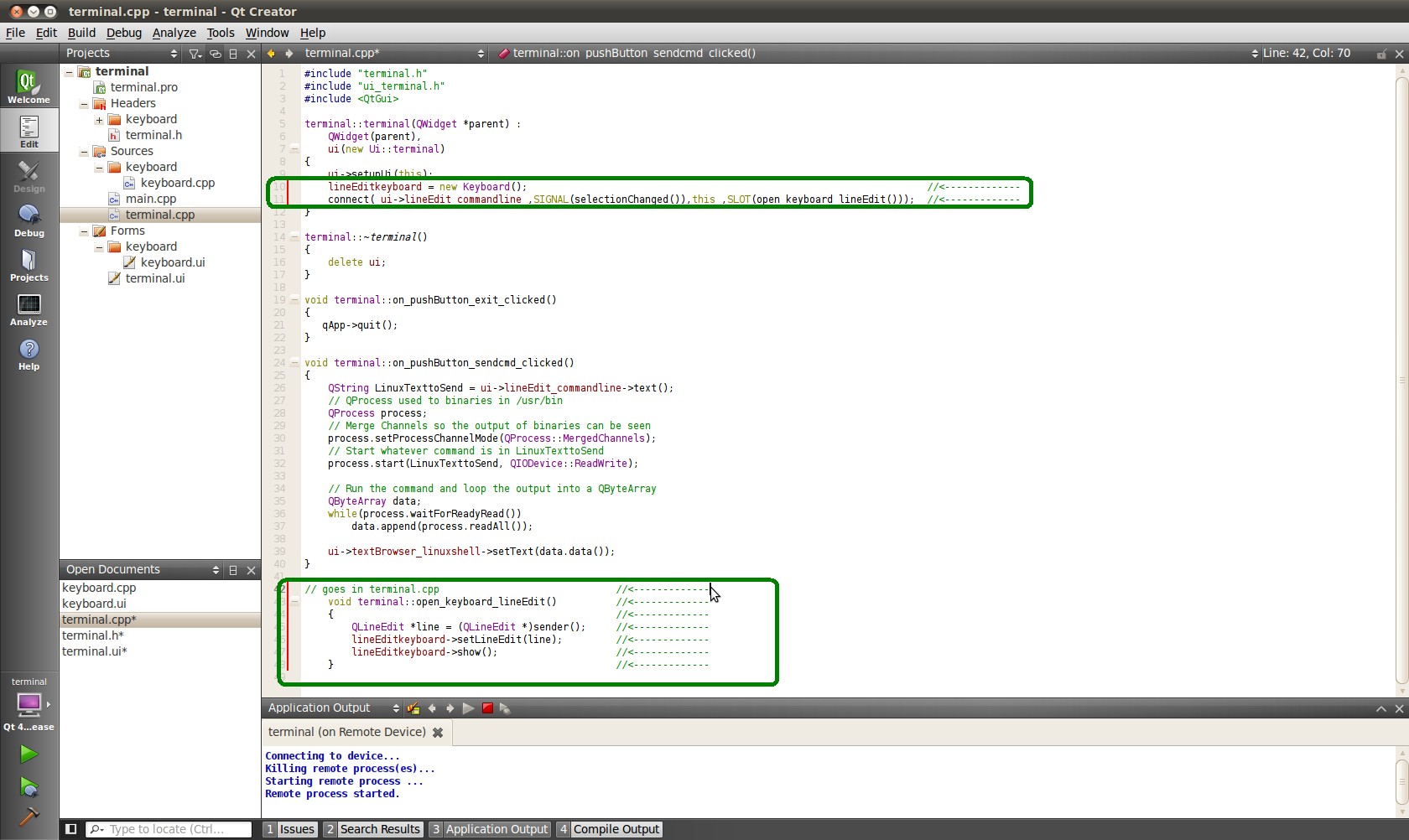

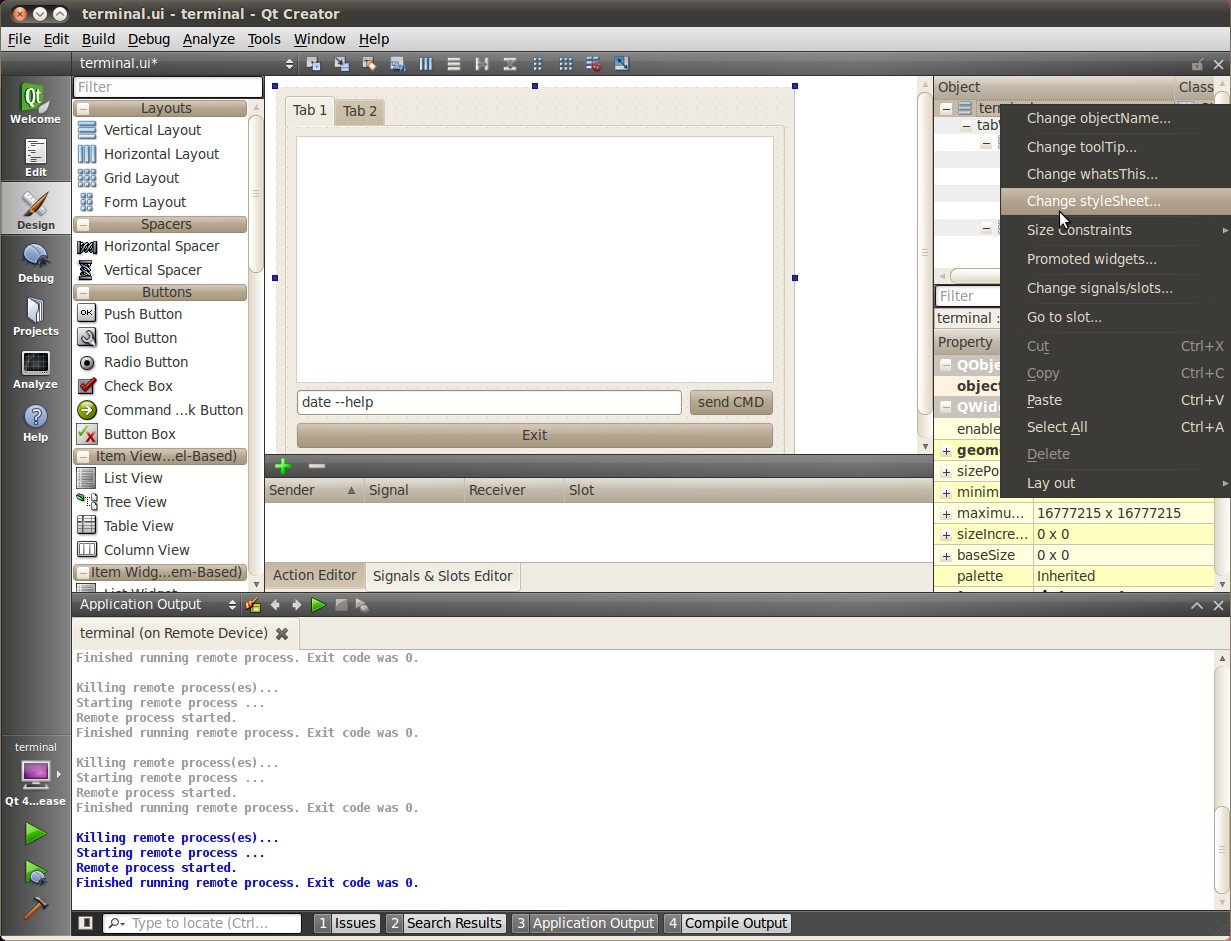

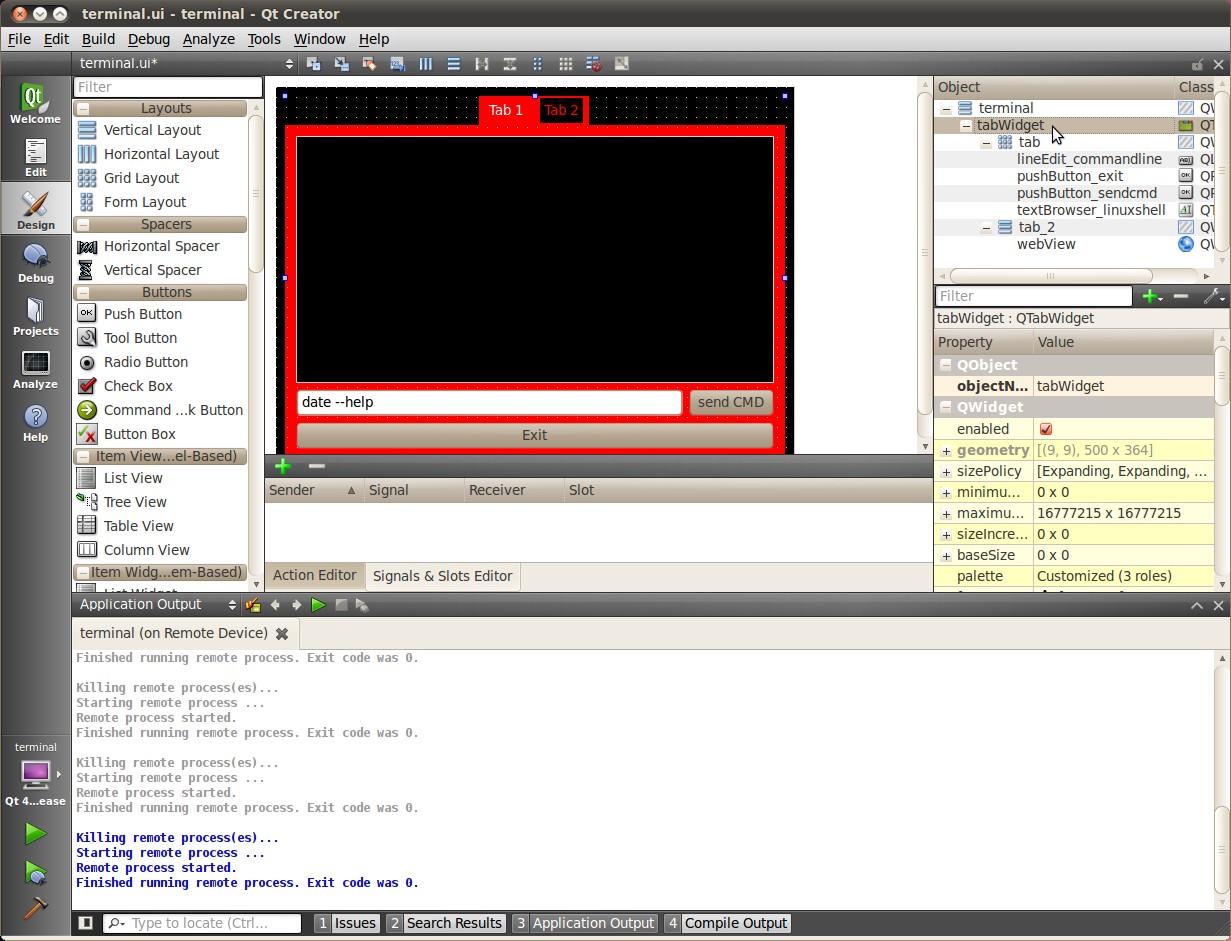

This lab is going to give you a hands on tutorial on QT, the GUI devolpment tool, which is a component of the Sitara SDK. Each of the following sections below will walk you through a particular Lab exercise, the key points to take away, and the step-by-step instructions to complete the lab. The labs in this section will utilize both a command line build approach and a IDE approach using QT Creator.

Training: - You can find the necessary code segments embedded in the this article. You can cut and paste them from the wiki as needed.

Hands On Session: - In the vmware image, there are cheater files: /home/sitara/QT_Lab You can cut and paste section of code from this file rather than have to type them in. You can find a copy here, just right click and select “Save Target As”: File:QT Lab.tar.gz

NOTE: In this guide commands to be executed for each step will be marked in BOLD

Lab Configuration

The following are the hardware and software configurations for this lab. The steps in this lab are written against this configuration. The concepts of the lab will apply to other configurations but will need to be adapted accordingly.

Hardware

AM335x EVM-SK (TMDSSK3358) - Order Now

NOTE All Sitara boards with an LCD display or HDMI/DVI out can be used for these labs, but the steps below related to serial and ethernet connections may differ.

Router connecting AM335x EVM-SK and Linux Host

USB cable connection between AM335x EVM-SK and Linux Host using the micro-USB connector (J3/USB0)

NOTE The AM335x EVM uses a standard DB9 connector and serial cable. New Win7 based Host PCs may require a USB-to-Serial cable since newer laptops do not have serial ports. Additionally, since the serial connection is not required for these labs, you can telnet or ssh into the target over ethernet instead of using a serial terminal for target interaction.

5V power supply (typically provided with the AM335x EVM-SK)

Software

- A Linux host PC configured as per the Linux Host

Configuration

page

- **PLEASE NOTE** currently the Linux Host Configuration page is under revision. Please download Ubuntu 14.04 rather than Ubuntu 12.04 at this link https://releases.ubuntu.com/14.04/

- Sitara Linux SDK installed. This lab assumes the latest Sitara Linux SDK is installed in /home/sitara. If you use a different location please modify the below steps accordingly.

- SD card with Sitara Linux SDK installed.

- For help creating a 2 partition SD card with the SDK content see the create_sdcard.sh script page

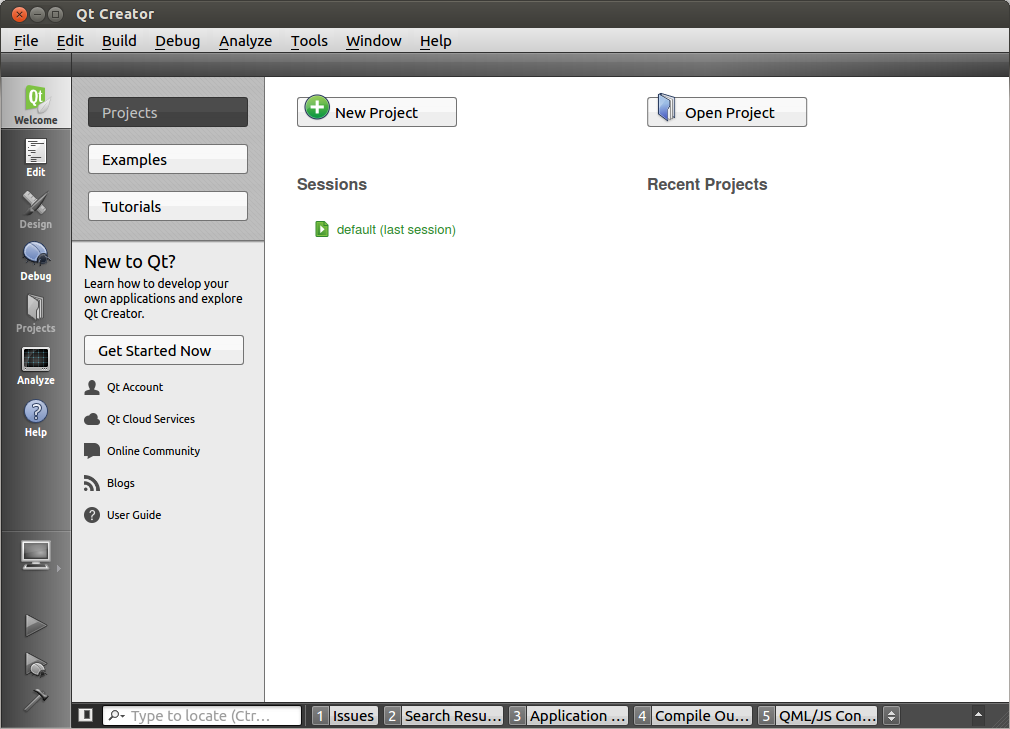

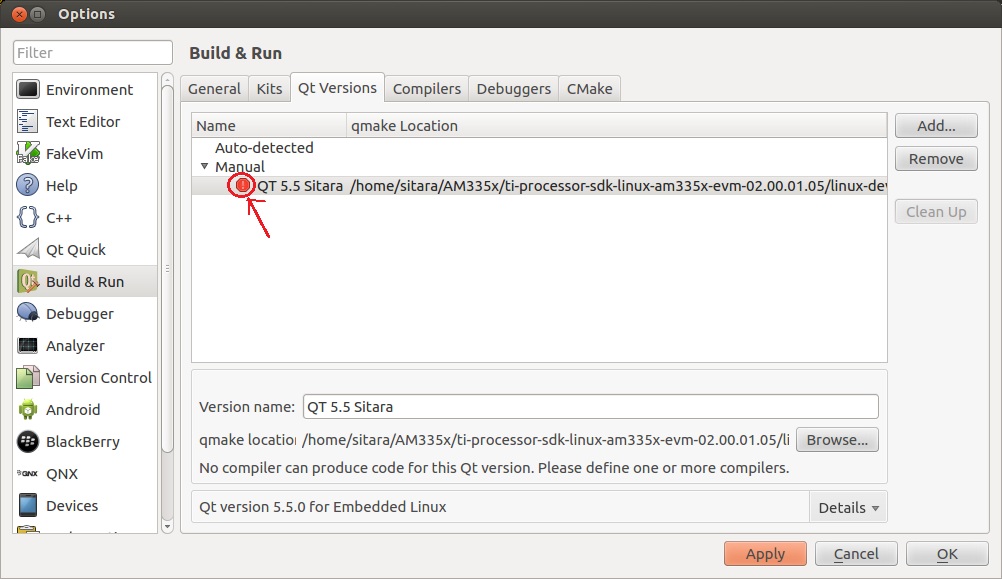

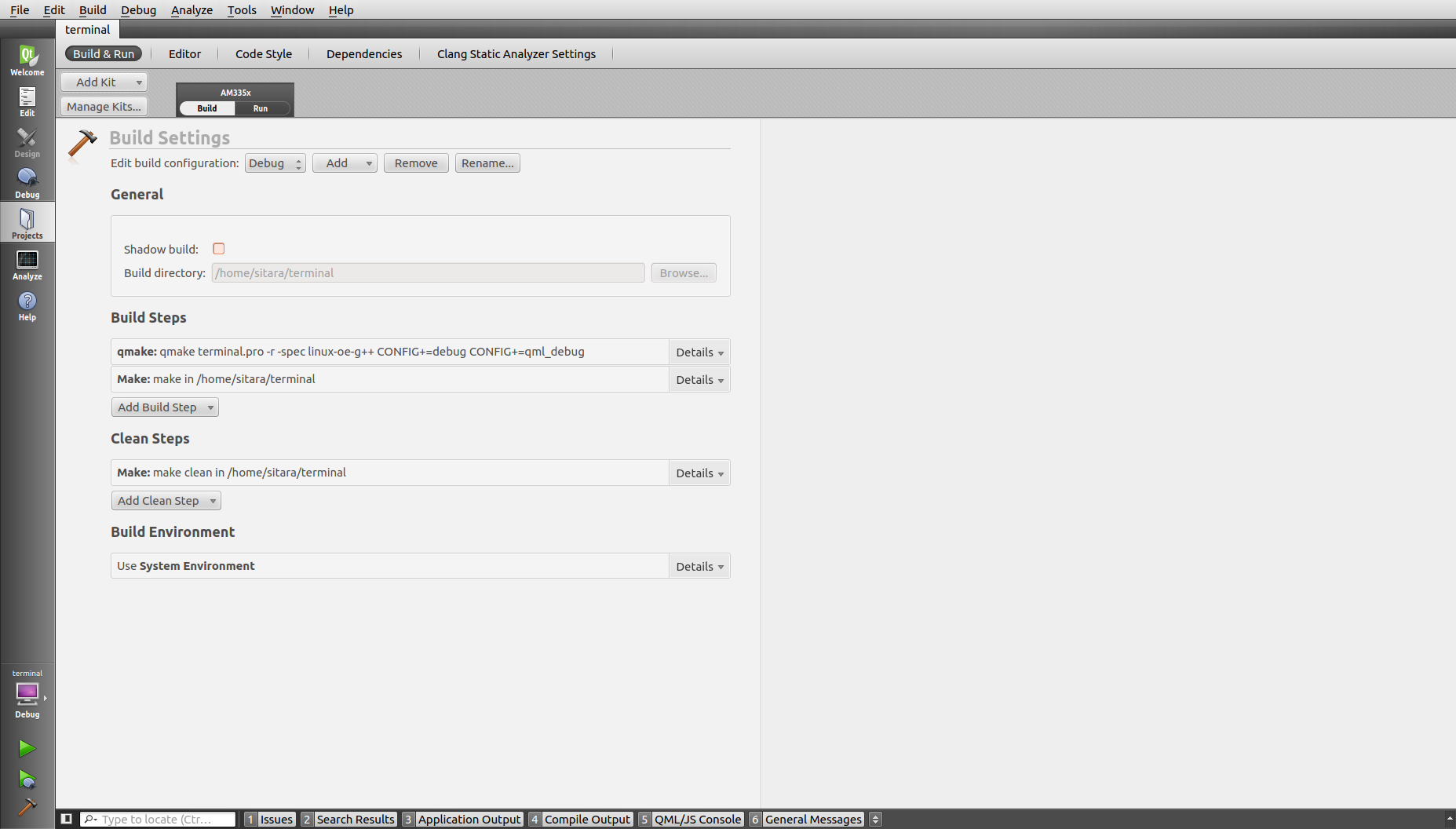

- QT Creator 5.7.0 installed on your Linux host.

- You can download Qt Creator from open source distribution version of Qt https://download.qt.io/official_releases/qt/5.7/5.7.0/

- QT will download as a .run file. Make the file executable by running the chmod + <qtfile> command and ./<qtfile>. These steps should launch the QT installer.

- Extract the package. Qt creator will be under Tools/Qt5.7.0 folder. In some cases it may also be under the opt folder. Type cd /opt/Qt5.7.0 into the command line to locate the file.

- The labs in this wiki page is validated with Ubuntu 14.04 on 64 bit machine.

Supported Platforms and revisions

The following platforms are system tested to verify proper operation.

- am335x

- am37x

- am35x

- am180x

- BeagleBoard XM

This current version has been validated on ti-processor-sdk version 02.00.01.07 using QT Creator 3.6.0

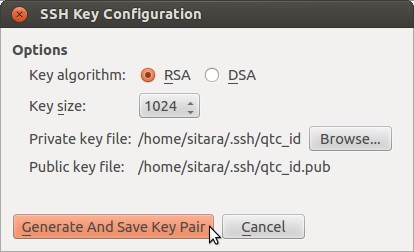

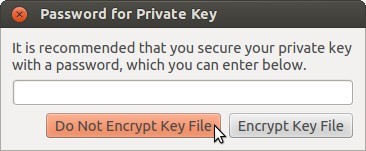

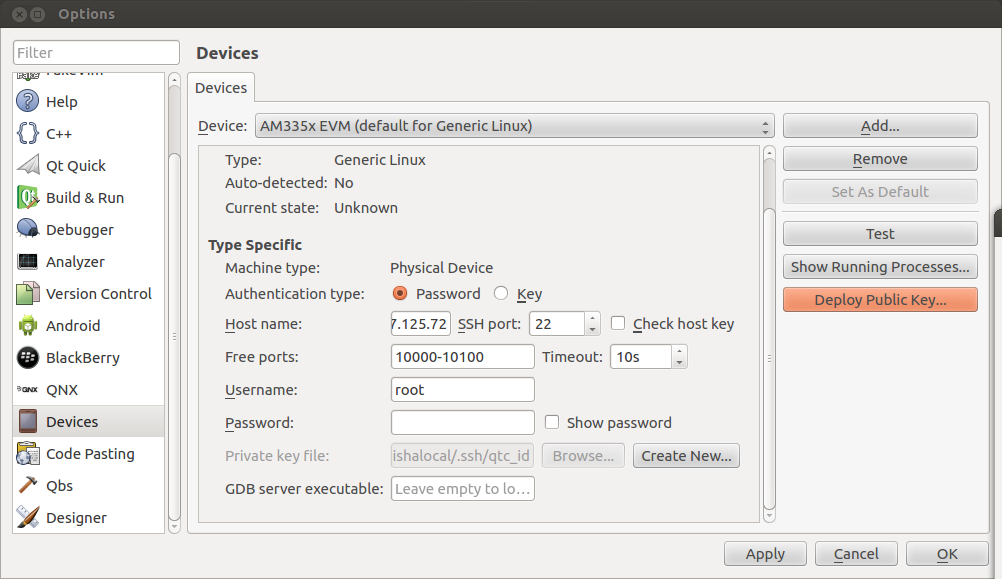

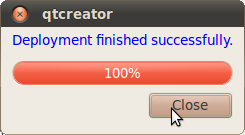

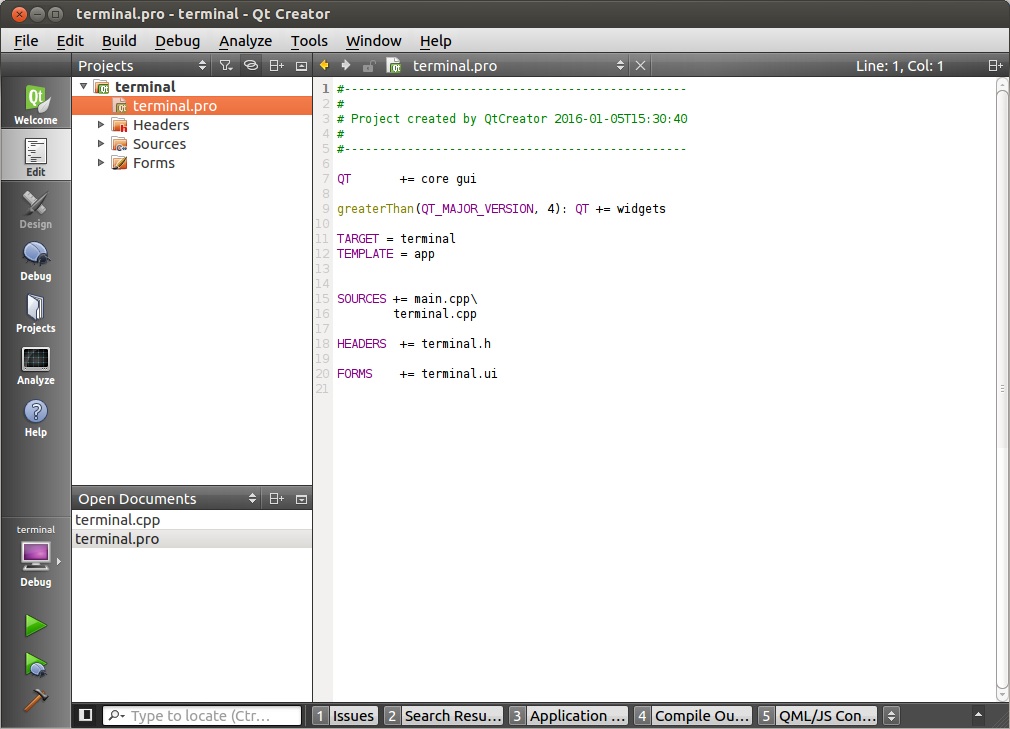

LAB 1: Hello World Command Line

Description

This LAB is optional, it introduces where to find QT components and build tools in the Sitara SDK. Approximate time to complete this LAB: 15 minutes. This section will cover the following topics

- Introduction to build tools

- enviroment setup script

- The QT component of the Sitara SDK

- where to find things in the Sitara SDK

Key Points

- Where in the SDK to find the build tools

- Where in the SDK to find the QT components

- How to setup your build environment

- How to utilize the above points to create a Hello World application.

Lab Steps

Connect the cables to the EVM. For details on where to connect these cables see the Quick Start Guide that came with your EVM.

- Connect the Serial cable to provide access to the console.

- Connect the network cable

- Insert the SD card into the SD connector

- Insert the power cable into the 5V power jack

Power on the EVM and allow the boot process to finish. You will know when the boot process has finished when you see the Matrix application launcher on the LCD screen

NOTE You may be required to calibrate the touchscreen. If so follow the on screen instructions to calibrate the touchscreen.

Open a terminal window on your Linux host by double clicking the Terminal icon on the desktop

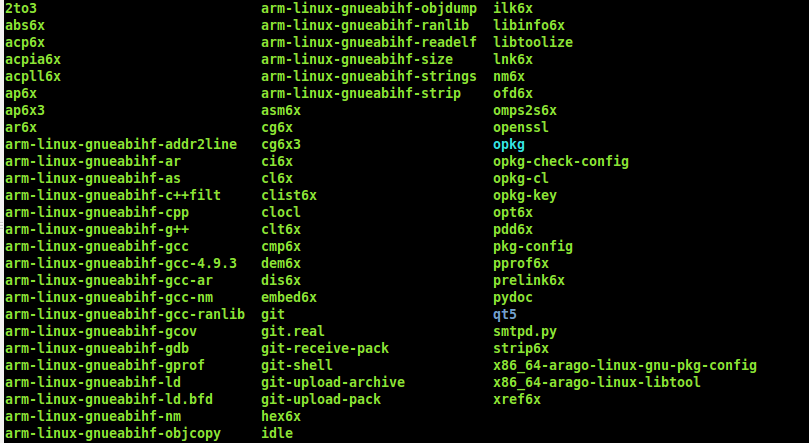

The cross-compiler is located in the linux-devkit/bin directory of the SDK installation directory. In the terminal window enter the following commands, replacing the <machine> and <sdk version> fields with the target machine you are using and the SDK version installed.

NOTE You can use TAB completion to help with this

- cd /home/sitara/AM335x/ti-processor-sdk-linux-<machine>-<sdk version>/linux-devkit/sysroots/x86_64-arago-linux/usr/bin

- ls

You should see a listing of the cross-compile tools available like the one below.

- To locate the pre-built ARM libraries perform the following commands:

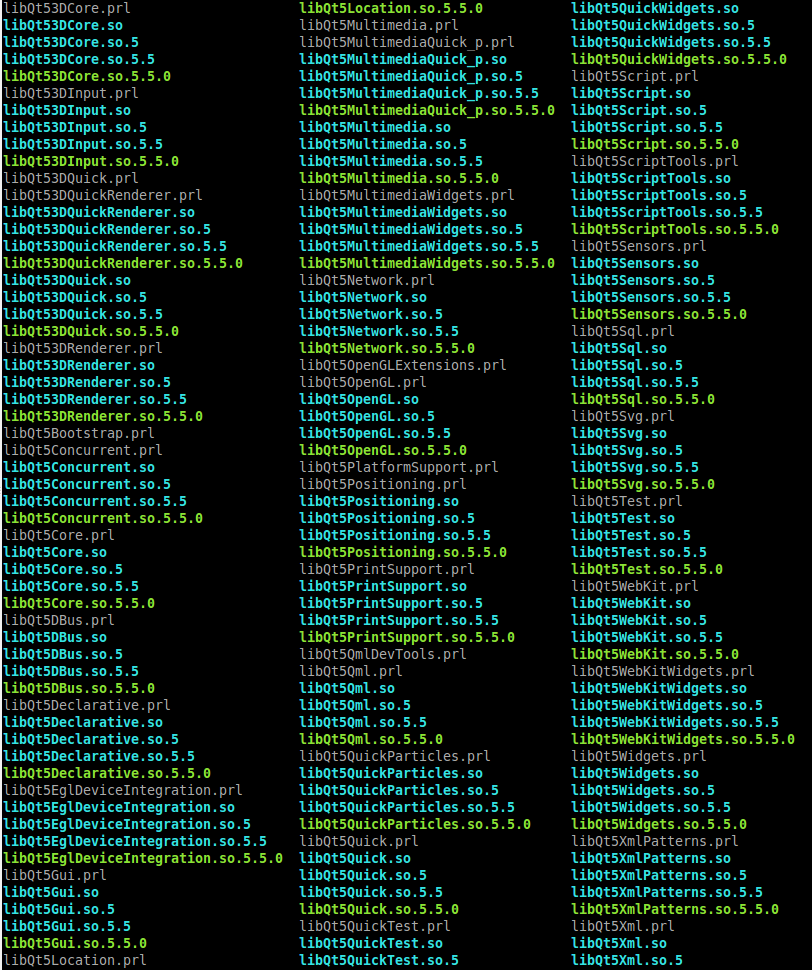

- cd /home/sitara/AM335x/ti-processor-sdk-linux-<machine>-<sdk version>/linux-devkit/sysroots/cortexa8hf-vfp-neon-linux-gnueabi/usr/lib

- ls

- You should now see a listing of all the libraries (some are contained within their individual sub-directories) available as pre-built packages within the SDK.

- Now list only the QT libraries from the same directory by listing all

libs starting with libQt.

- ls libQt*

- You should see a listing of QT related libraries that can be used to build and run QT projects.

- You can also find out where the QT header files are located. At the

directory below are sub directories full of QT header files.

- cd /home/sitara/AM335x/ti-processor-sdk-linux-<machine>-<sdk version>/linux-devkit/sysroots/cortexa8hf-vfp-neon-linux-gnueabi/usr/include/qt5

- ls

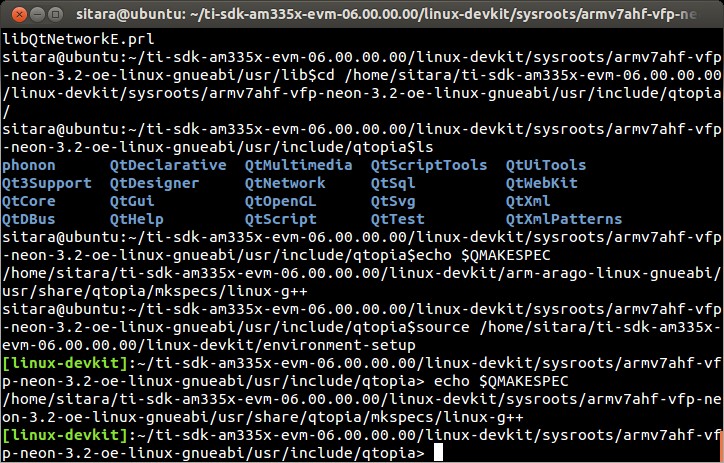

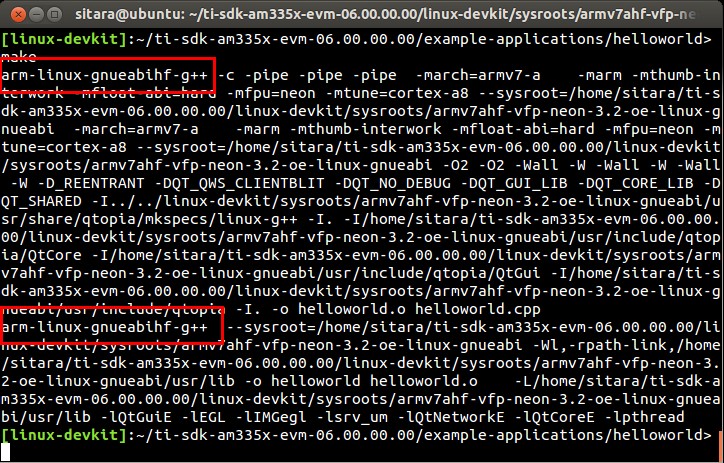

- In order to make it easier to perform cross-compilations and ensure

linking with the proper cross-compiled libraries instead of the host

system libraries the environment-setup script has been created in

the linux-devkit directory. This script will configure many standard

variables such as CC to use the cross-compile toolchain, as well as

adding the toolchain to your PATH and configuring paths for library

locations. To utilize the setting provided by the environment-setup

script you will need to source the script. Perform the following

commands to source the environment-setup script and observe the

change in the QMAKESPEC variable:

- echo $QMAKESPEC

- source /home/sitara/AM335x/ti-processor-sdk-linux-<machine>-<sdk version>/linux-devkit/environment-setup

- echo $QMAKESPEC

- You should see the changes that were applied by executing the setup script.

You should have observed that the QMAKESPEC variable now contains the path to the QMAKESPEC files. Additionally your compile tools were added. There was also another change that occurred which was that your standard prompt changed from sitara@ubuntu to [linux-devkit]. The purpose of this change is to make it easy to identify when the environment-setup script has been sourced. This is important because there are times when you DO NOT want to source the environment-setup script. A perfect example is when building the Linux kernel. During the kernel build there are some applications that get compiled which are meant to be run on the host to assist in the kernel build process. If the environment-setup script has been sourced then the standard CC variable will cause these applications to be built for the ARM, which in turn will cause them to fail to execute on the x86 host system.

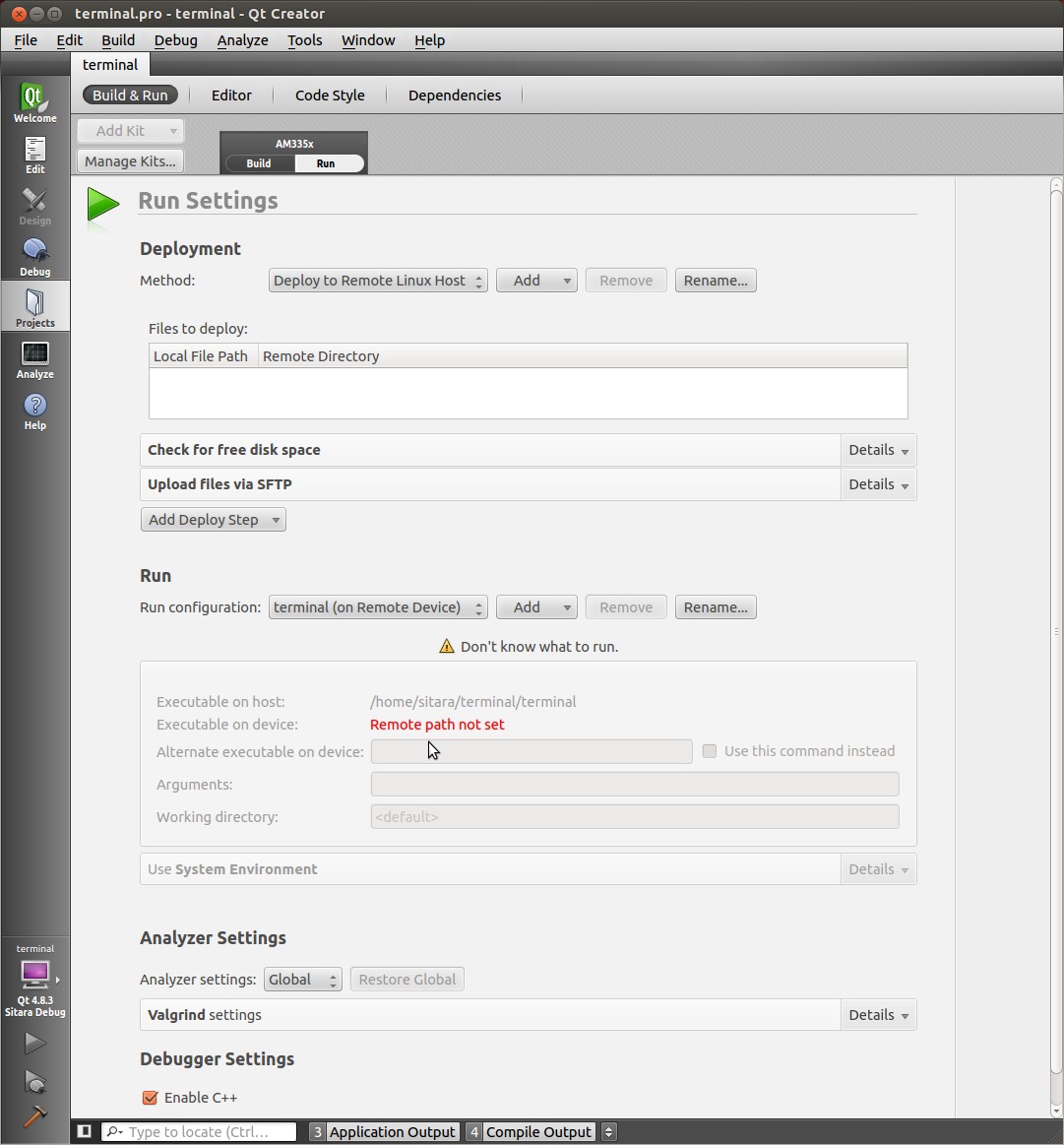

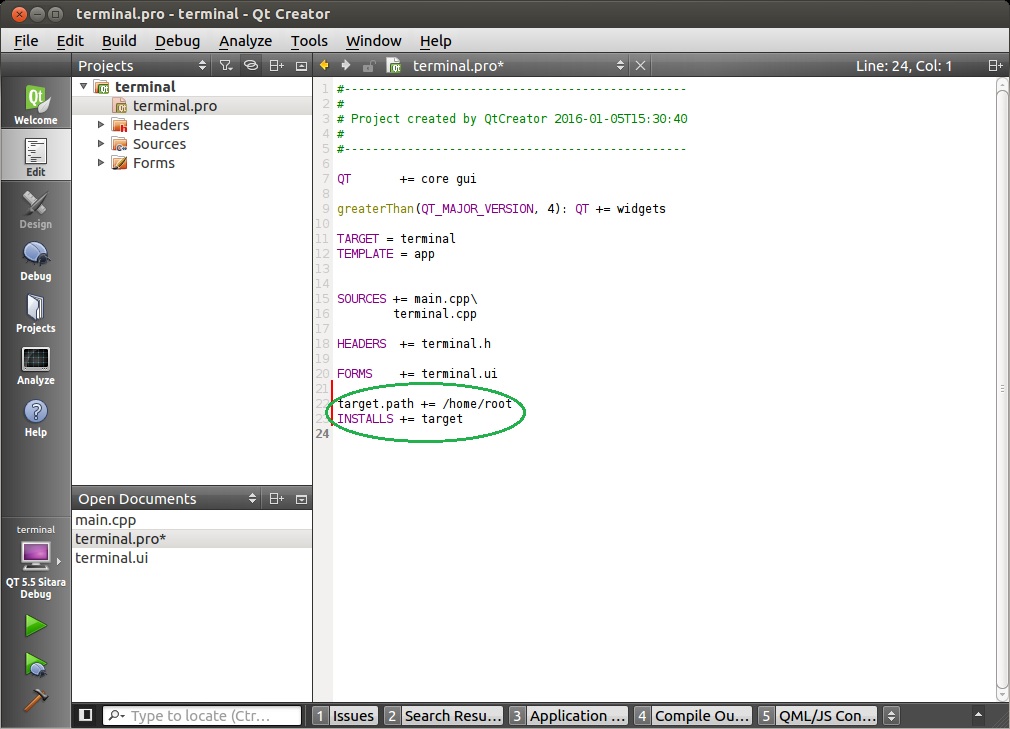

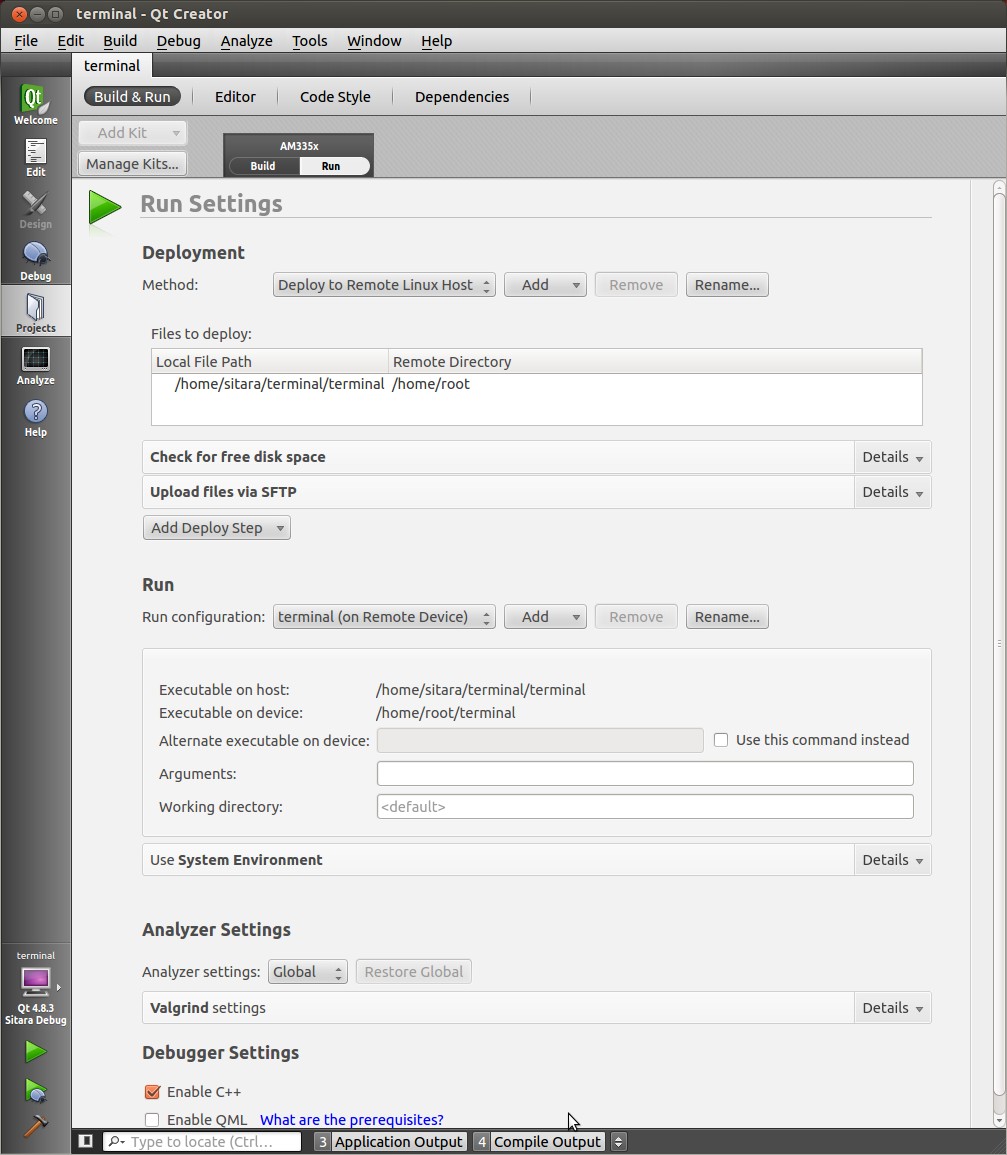

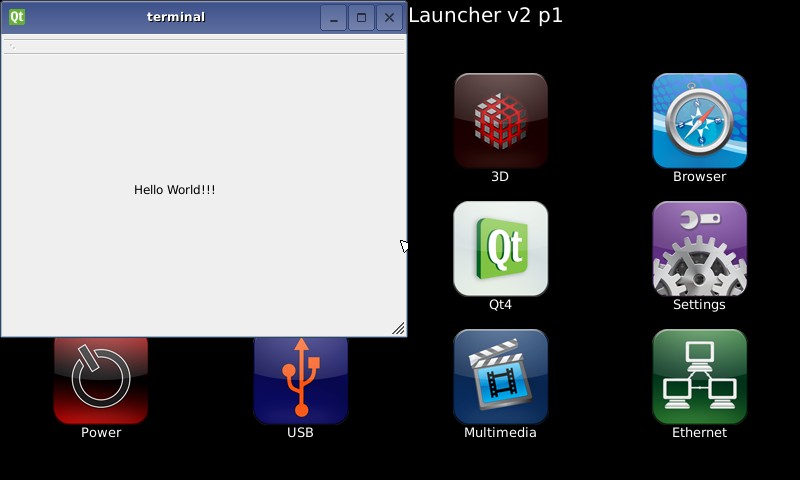

As mentioned above sometimes it is not appropriate to source the environment-setup script, or you only want to source it during a particular build but not affect your default environment. The way this is done in the SDK is to source the environment-setup script inside of the project Makefile so that it is used only during the build process.