|

TI Deep Learning Product User Guide

|

|

TI Deep Learning Product User Guide

|

TIDL is a comprehensive software product for acceleration of Deep Neural Networks (DNNs) on TI's embedded devices. It supports heterogeneous execution of DNNs across cortex-A based MPUs, TI’s latest generation C7x DSP and TI's DNN accelerator (MMA). TIDL is released as part of TI's Software Development Kit (SDK) along with additional computer vision functions and optimized libraries including OpenCV. TIDL is available on a variety of embedded devices from Texas Instruments as shown below:

| Device | SDK |

|---|---|

| TDA4VM | Processor SDK RTOS Processor SDK Linux for Edge AI |

TIDL is a fundamental software component of TI’s Edge AI solution. TI's Edge AI solution simplifies the whole product life cycle of DNN development and deployment by providing a rich set of tools and optimized libraries. DNN based product development requires two main streams of expertise:

TI's Edge AI solution provides the right set of tools for both of these categories:

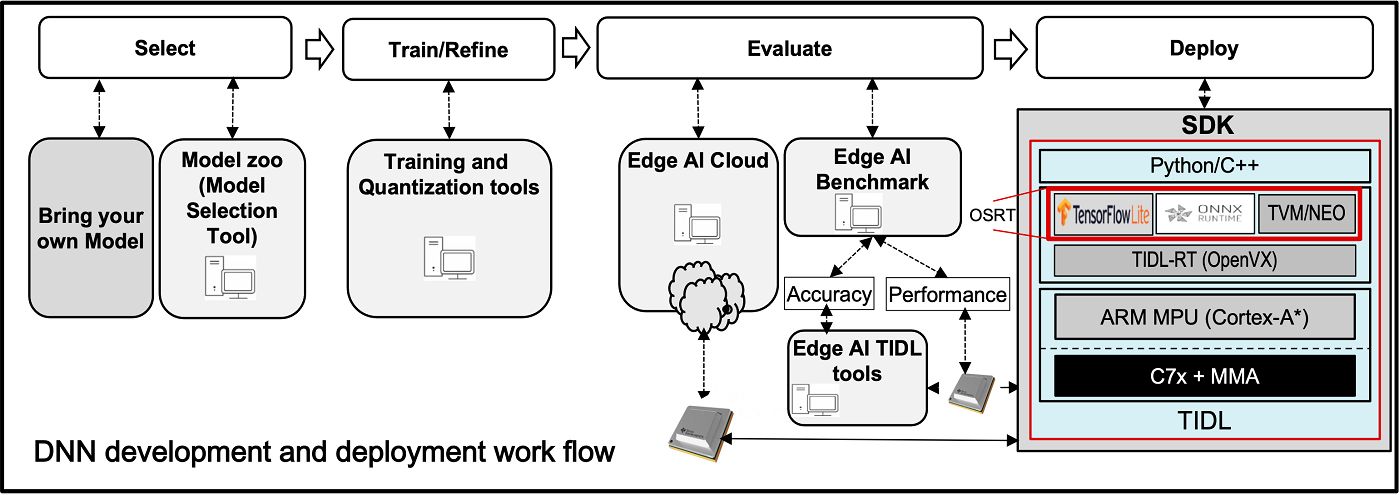

The figure below illustrates the work flow of DNN development and deployment on TI devices:

TIDL provides multiple deployment options with industry defined inference engines as listed below. These inference engines are being referred as Open Source Run Times (OSRT) in this document.

TIDL also provides an openVX based inference solution, being referred as TIDL-RT in this document. It supports execution of DNNs only on C7x-MMA and DNNs have to be constructed using the operators supported by TIDL-RT. OSRT makes use of TIDL-RT as part of its backend to offload sub graph(s) to C7x-MMA.

We recommend users to use OSRT for a better user experience and a richer coverage of neural networks. A comparison table with more criterias is provided below

| Criteria | TIDL-RT | OSRT |

|---|---|---|

| Operator Coverage | ~40 accelerated operators | All the operators supported by TFLite and ONNX |

| Inference Speed | Best | Similar to TIDL-RT if entire DNN is offloaded to C7x-MMA |

| Application Interface | C/C++ | C/C++ and Python |

| Ease of Use | Good | Better than TIDL-RT due to (A) Higher operator coverage (B) Industry standard APIs (C) Python support |

| Portability | Portable to any TI SOC with TIDL product support | TI SOC, non TI SOC with OSRT support enabled |

| Operating system | Linux (OOB offering), Minimal dependency on other HLOS so easy to migrate | Linux (OOB offering), Requires porting of open source run time engines (e.g. TFLiteRT, ONNXRT etc) to 3P OS from OS vendor |

| Safety | Suitable | Depends on (A) Proven in use for OSRT components and (B) Availability of these components by 3P OS vendors for safe OS |

** TDA4VM has cortex-A72 as its MPU, refer to the device TRM to know which cortex-A MPU it contains.