|

TI Deep Learning Product User Guide

|

|

TI Deep Learning Product User Guide

|

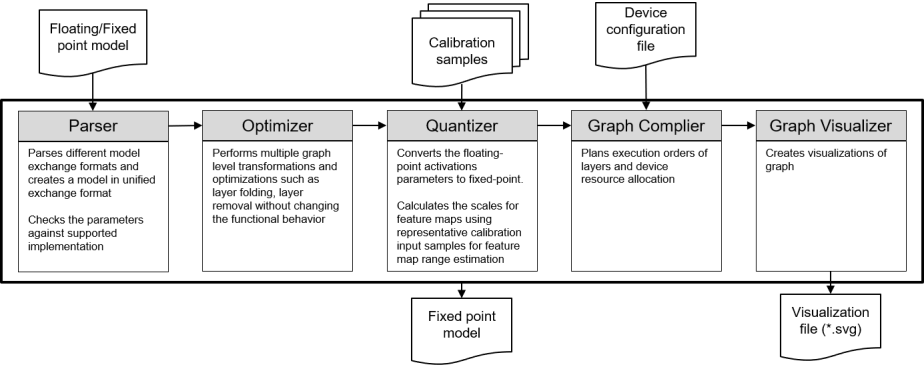

TIDL-RT Import (translation) tool can accept a pre-trained floating/fixed point model trained using BVLC-caffe,caffe-jacinto, TensorFlow, TFLite or models exported to ONNX format. Internally it performs various processing and at the end provides model to be inferred on TIDL-RT inference engine.

Below figure shows key processing blocks of this tool and their flow

The tool is available in "ti_dl/utils/tidlModelImport/out" and can be used to import user trained models with below command:

Note : Run the Import tool from the "ti_dl/utils/tidlModelImport" directory only. We recommend to use the file path as mentioned in example import configuration file. If you face any issues in file path(s), try with absolute paths.

Note : The trailing options are used to override the parameters in the import configuration file. In above example, the parameters resizeWidth and resizeHeight may have been 128 and 128, respectively in the configuration file (tidl_import_jacintonet11v2.txt), but with override options, import tool will run with the values mentioned in command.

Proto file from below version are used for validating pre-trained models. In most cases new version models also shall work since the basic operations like convolution, pooling etc don't change. Find more information on migrating to latest version if required in TIDL-RT Importer Design

Since the Tensorflow 2.0 is planning to drop support for frozen buffer, we recommend to users to migrate to TFlite model format for Tensorflow 1.x.x as well. TFLite model format is supported in both TF 1.x.x and TF 2.x

Fixed-point models are only supported for TFLite, and still needs calibration images as input

TIDL-RT importer accepts multiple configuration parameters and all of these can be supplied via a configuration file, see examples in same page. Most of the parameters are optional and applicable for advanced users. This section explains the purpose of each of this parameter in logically grouped section, however the importer accepts all of these parameters via a single file.

| Parameter | Default | Description |

|---|---|---|

| modelType | 0: Caffe | This parameter accepts an integer (0, 1, 2 or 3) indicating which model type is being imported. Following types are currently supported 0 : Caffe (.caffemodel and .prototxt files) 1 : TensorFlow (.pb files) 2 : ONNX (.onnx files) 3 : TFLite (.tflite files) |

| inputNetFile | MUST SET | Path to neural network file from training frameworks. Example "deploy.prototxt" from caffe or frozen binary protobuf with parameters from TensorFlow |

| inputParamsFile | MUST SET if Caffe model | Path to neural network parameter file (caffemodel) from BVLC caffe training framework. Not applicable for TensorFlow, TFLite and ONNX |

| outputNetFile | MUST SET | Path to neural network file produced from TIDL-RT importer having network structure and parameters |

| outputParamsFile | MUST SET | Path to neural network input and output buffer descriptor file produced from TIDL-RT importer. Refer TIDL-RT: Input and Output Tensors Format for more details |

| numParamBits | 8: 8-bit | Bit depth for model parameters like kernel coefficients, bias etc. Following values are currently supported 8 : 8-bit fixed-point 16 : 16-bit fixed-point 32 : 32-bit floating-point If this value is set to 32 bit then TIDL-RT will run inference in floating point. This feature is only supported in host emulation mode, and one cannot run the models which are imported with numParamBits = 32 on development board. |

| numFeatureBits | 8: 8-bit | Bit depth for feature maps of DNN, following values are currently supported 8 : 8-bit fixed-point 16 : 16-bit fixed-point |

| quantizationStyle | 2: TIDL_QuantStyleNP2Fixed | Quantization method used by quantization module of TIDL importer. Following values are currently supported 0: TIDL_QuantStyleFixed 1: TIDL_QuantStyleDynamic 2: TIDL_QuantStyleNP2Fixed 3: TIDL_QuantStyleP2Dynamic Refer TIDL-RT API Guide for more details |

| inQuantFactor | 1 | List to hold scale factor for each input tensor. If the input range used in training is [0:1] and the same is passed as tensor range of [0:255] to TIDL-RT then this parameters shall be 255. This shall not be set in config file when inDataNorm is 1 |

| inElementType | 0 (if numFeatureBits == 8), 2 (if numFeatureBits > 8) | List to hold element type for each input tensor. Refer TIDL Element Type in TIDL-RT API Guide for more details. Supported values are 0 : 8bit Unsigned, 1 : 8bit Signed, 2 : 16bit Unsigned, 3 : 16bit Signed, 6 : Single Precision Float |

| inWidth | List to hold width of each input tensor. If set, this value will overwrite the width from the neural net. If not set, the importer will use the original size in neural network. Note:It is expected that neural net can support different resolution other than what its trained for reliable behavior | |

| inHeight | List to hold height of each input tensor. If set, this value will overwrite the height from the neural net. If not set, the importer will use the original size in neural network. Note:It is expected that neural net can support different resolution other than what its trained for reliable behavior | |

| inNumChannels | List to hold "number of channels" of each input tensor. If set, this value will overwrite the number of channels from the neural net. If not set, the importer will use the original size in neural network. Note: It is expected that neural net can support different resolution other than what its trained for reliable behavior | |

| numBatches | 1 | Number of batches to be processed together, it can take values >= 1. By using this parameter, one can improve the throughput at cost of higher latency. Note: Please use this parameter carefully as it is not always necessary to have higher throughput with higher number of batches |

| inDataNamesList | List of input tensor names in network to be imported(use space/comma/tab to split). It is useful to import portion of network. If not set, importer will search the input net file for default inputs. | |

| outDataNamesList | List of output tensor names in network to be imported(use space/comma/tab to split). It is useful to import portion of network. If not set, importer will search the input net file for default outputs. |

In most cases, the input to the network gets processed using some image transform function. For example, mean subtraction, scaling or standard deviation, cropping etc. The parameters mentioned below, gets embedded in TIDL-RT's model file and are used during inference.

| Parameter | Default | Description |

|---|---|---|

| inDataNorm | 0: Disable | Enable / Disable Normalization on input tensor. Scale and mean values are applicable only if this is enabled. Please refer Input Normalization page to understand its usage. Note: This parameter adds a batchnorm layer to the TIDL network which can potentially be merged to following convolution layer if foldPreBnConv2D = 1. If this batchnorm layer is merged to convolution layer then this operation happens at no impact on processing time |

| inMean | List: Mean value needs to be subtracted for each channel of all input tensors. Only applicable when inDataNorm = 1. Please refer Input Normalization page to understand its usage | |

| inScale | List: Scale value needs to be multiplied after mean subtraction for each channel of all input tensors. Only applicable when inDataNorm = 1. Please refer Input Normalization page to understand its usage | |

| inDataPadInTIDL | 0: Disable | This parameter controls enable/disable of padding to be performed on input tensor in TIDL-RT inference. On enable, pad layer gets inserted in beginning of neural net if required. |

| inDataFormat | 1: RGB planar | List: Input tensor color format. 0: BGR planar, 1: RGB planar. Refer TIDL API guide for supported list of formats |

| inResizeType | 0: Normal | Image resize type . 0: normal resize, 1: keep aspect ratio. Refer TIDL API guide for supported formats list. Note: This resize is not part of TIDL-RT inference, instead its done in the test bench code. User is expected to take care of this resize in their final application before giving the input to TIDL-RT. |

| resizeWidth | If resizeWidth is set and resizeWidth >= inWidth, then first input image is resized to resizeWidth and center cropping of inWidth is done on this resized image to get data for inference. resizeWidth < inWidth is not a valid configuration. Note: This resize is not part of TIDL-RT inference, instead its done in the test bench code. User is expected to take care of this resize in their final application before giving the input to TIDL-RT. | |

| resizeHeight | If resizeHeight is set and resizeHeight >= inHeight, then first input image is resized to resizeHeight and center cropping of inHeight is done on this resized image to get data for inference. resizeHeight < inHeight is not a valid configuration. Note: This resize is not part of TIDL-RT inference, instead its done in the test bench code. User is expected to take care of this resize in their final application before giving the input to TIDL-RT. | |

| outputFeature16bitNamesList | This parameter is useful for mixed-precision inference of neural net. It is a list of names of the output layers (separated by comma or space or tab) as in the original model whose feature/activation output user wants to be in 16 bit. Note: If for a given layer feature/activations is in 16 bit then parameters will automatically become 16 bit and user need not specify them as part of params16bitNamesList. Since TIDL-RT importer merges certain layers, this list should correspond to the last layer after merging. User can find this mapping by executing the importer once without this parameter which generates <outputNetFile>.layer_info.txt where outputNetFile is same as given by the user in the import config file. This *.layer_info.txt file contains three columns the first one is the layer number, second one is unique data id and last one is the name as given in the original network's model format. This third column gives the name which will be present in the TIDL imported network after merging any layer. User should use this value for outputFeature16bitNamesList. | |

| params16bitNamesList | This parameter is useful for mixed-precision inference of neural net. It is a list of names of the output layers (separated by comma or space or tab) as in the original model whose parameters user wants to be in 16 bit. This is not the name of the parameter of the layer but is expected to be the output name of the layer. Note:If a given layers feature/activations is in 16 bit then parameters will automatically become 16 bit even if its not part of this list. Since TIDL-RT importer merges certain layers, this list should correspond to the last layer in the merged output. User can find this mapping by running the import tool once without this parameter which generates <outputNetFile>.layer_info.txt where outputNetFile is same as given by the user in the import config file. This *.layer_info.txt file contains three columns the first one is the layer number, second one is unique data id and last one is the name as given in the original network's model format. This third column gives the name which will be present in the TIDL imported network after merging any layer. User should use this value for params16bitNamesList. | |

| inYuvFormat | -1: Not used | This parameter is useful when network is trained with RGB input but the input available during inference is in YUV format. Following values are currently supported -1: No format conversion is performed and the input is directly used for neural net inference 0 (TIDL_inYuvFormatYuv420_NV12): YUV to RGB conversion performed considering input in YUV 4:2:0 NV12 format |

| foldPreBnConv2D | 1: Enable | This parameter controls folding batch norm layer with following convolution layer. Following values are currently supported 0: Disable 1: Enable, this will improve run time performance but may result in slight accuracy drop |

| metaArchType | -1: Not used | This parameter is applicable for neural networks of object detection task and is used to inform the underneath architecture used for post processing of object detection. Please refer TIDL-RT API Guide for supported meta architectures. If metaArch is not used, please leave it default. Please refer Meta Architectures Support for more details. |

| metaLayersNamesList | Configuration files describing the details of meta architecture, example pipeline.config for TF SSD. Please refer Meta Architectures Support for more details. |

As explained earlier, importer is front end utility for users to perform all processing which happens during compile stage. Internal to importer, it makes call to other modules which are independent executables. Parser, optimizer and quantizer are part of the importer whereas graph compiler, graph visualizer are independent modules being used by importer. Quantizer module of the importer also makes use of TIDL-RT inference engine for statistics collection and hence it needs path of it.

| Parameter | Default | Description |

|---|---|---|

| tidlStatsTool | ../../test/PC_dsp_test_dl_algo.out(.exe) | Host emulation executable file path of TIDL-RT inference. It is used by quantizer module for range collection. If not specified, uses from default location |

| perfSimTool | ../../utils/perfsim/ti_cnnperfsim.out(.exe) | Graph Compiler executable file path. If not specified, uses from default location |

| graphVizTool | ../../utils/tidlModelGraphviz/out/tidl_graphVisualiser.out(.exe) | Path of the tool to generate graphical visual output . If not specified, uses from default location |

| modelDumpTool | ../../utils/tidlModelDump/out/tidl_dump.out(.exe) | Similar to graphViz tool which provides the complete information about neural net after complete operation of importer. Only difference is that it is textual information instead of visual. It is optional parameter |

As explained in previous section, quantization module in importer makes use of host emulation mode of TIDL-RT. So importer also accepts the parameters related to TIDL-RT inference and prepares the configuration file for TIDL-RT host emulation inference. These parameters are described in this section

| Parameter | Default | Description |

|---|---|---|

| inData | Please refer the TIDL Sample Application doc | |

| inFileFormat | Please refer the TIDL Sample Application doc | |

| numFrames | Please refer the TIDL Sample Application doc | |

| postProcType | Please refer the TIDL Sample Application doc | |

| postProcDataId | Please refer the TIDL Sample Application doc | |

| quantRangeUpdateFactor | Please refer the TIDL Sample Application doc | |

| debugTraceLevel | 0 | Please refer the TIDL Sample Application doc |

| writeTraceLevel | 0 | Please refer the TIDL Sample Application doc |

| rawDataInElementType | same as inElementType | Please refer the TIDL Sample Application doc |

Quantizer is one of the critical module in importer and a dedicated page is provided for better explanation of it. Users are recommended to read the page to get a better idea of different control parameters related to quantization

| Parameter | Default | Description |

|---|---|---|

| calibrationOption | 0 | Refer Quantizer |

| activationRangeMethod | 0 : TIDL_ActivationRangeMethodHistogram | [Optional parameter] Various Activation range methods supported by TIDL. The following types are currently supported 0 : TIDL_ActivationRangeMethodHistogram This parameter accepts an integer indicating which activation range method to be used. This option is only applicable if calibrationOption is set to TIDL_CalibOptionActivationRange. The default value is "0" (Fixed) |

| weightRangeMethod | 1 : TIDL_WeightRangeMethodMedian | Various Weight range methods supported by TIDL. The following types are currently supported 0 : TIDL_WeightRangeMethodHistogram 1 : TIDL_WeightRangeMethodMedian This parameter accepts an integer indicating which weight range method to be used. This option is only applicable if calibrationOption is set to TIDL_CalibOptionWeightRange. |

| percentileActRangeShrink | 0.01 | Refer Quantizer |

| percentileWtRangeShrink | 0.01 | [Optional parameter] This parameter is only applicable when weightRangeMethod is TIDL_WeightRangeMethodHistogram. This is percentile of the total number of elements in a weight filter which needs to be discarded from both side of weight distribution. If input is unsigned then this is applied to only one side of weight distribution. For example percentileRangeShrink = 0.01, means to discard 1/10000 elements from both or one side of weight distribution. |

| biasCalibrationFactor | 0.05 | Refer Quantizer |

| biasCalibrationIterations | Refer Quantizer | |

| numFramesBiasCalibration | Refer Quantizer | |

| quantRangeExpansionFactor | 1.0 | Margin that needs to be applied on feature map Range. Example 1.2 would apply 20% margin to range values |

| Parameter | Default | Description |

|---|---|---|

| executeNetworkCompiler | 1 | Enable(1)/Disable(0) execution of graph compiler, graph compiler can only be disabled for host emulation reference flow to speed up execution but it is mandatory to keep this flag enabled for execution on hardware |

| compileConstraintsFlag | This is a control flag used to control internal behavior of graph compiler and created to apply workarounds in case user faces any issue. It is not expected for users to change it without guidance from TI | |

| perfSimConfig | ../../test/testvecs/config/import/device_base.cfg | Graph Compiler configuration file to provide device specific information, this file has description of the additional control parameters which are not described here and not accepted by importer |

| msmcSize | -1 | Size of the L3 (MSMC) memory in KB which can be used by TIDL-RT: -1 (default), in default case the size will be picked from the size given in perfSimConfig file. Any other value overrides the value mentioned in perfSimConfig file |

TIDL-RT natively supports only NCHW layout and fixed point inference, but in order to allow integration with other runtimes, it allows accepting input tensor in floating point and as well in NWHC layout. Similarly on output side, TIDL-RT can produce floating point tensor and NWHC layout. This is achieved by inserting a format conversion layer at appropriate end. Below table describes the configuration parameters

| Parameter | Default | Description |

|---|---|---|

| addDataConvertToNet | 0 | This is a bit field parameter, bit 0 would add for data convert layer at input and bit 1 would add in output |

| inZeroPoint | 0 | List to indicate zero point of each fixed point input tensor |

| inLayout | 0: TIDL_LT_NCHW | List to indicate data Layout of each input tensor. Following types are currently supported: 0 : TIDL_LT_NCHW 1 : TIDL_LT_NHWC |

| inTensorScale | 1.0 | List to indicate scale of each input tensor in float, This is alias for inQuantFactor |

| outElementType | 0: 8bit unsigned | List to indicate element type for each output tensor. Refer TIDL Element Type in TIDL-RT API Guide for more details. Supported values are 0 : 8bit Unsigned, 1 : 8bit Signed, 2 : 16bit Unsigned, 3 : 16bit Signed, 6 : Single Precision Float |

| outZeroPoint | 0 | List to indicate zero point of each fixed point output tensor |

| outLayout | 0: TIDL_LT_NCHW | List to indicate data Layout of each output tensor. Following types are currently supported: 0 : TIDL_LT_NCHW 1 : TIDL_LT_NHWC |

| outTensorScale | 1.0 | List to indicate scale of each output tensor in float. |

Configuration files used for validation of models are provided in the "ti_dl/test/testvecs/config/import/" folder for reference. A few example configuration files are given below: