9. SDK Components¶

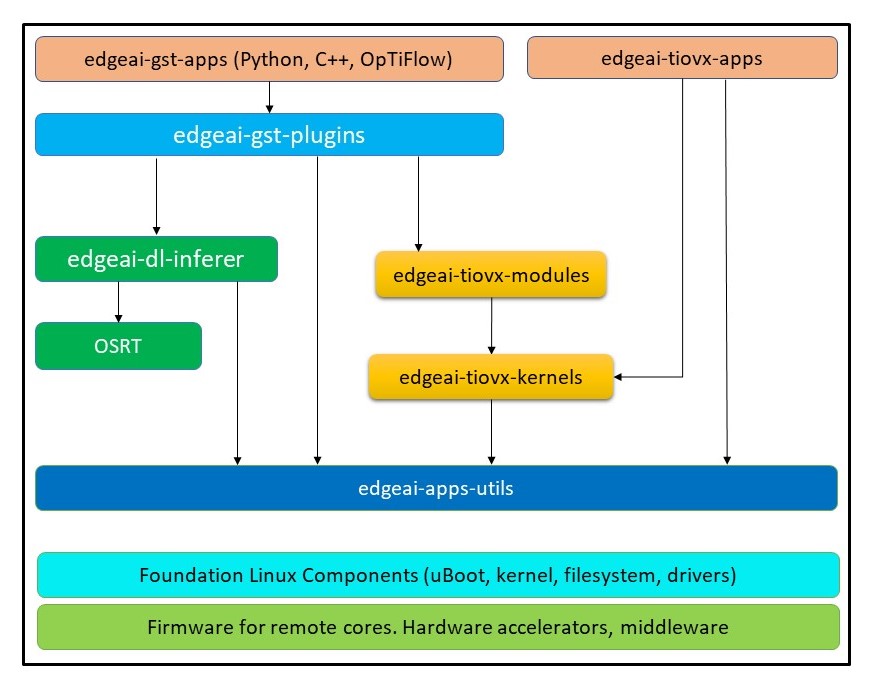

The Processor SDK Linux Edge AI for AM68A mainly comprises of three layers,

Edge AI application stack

Linux foundations

Firmware builder

9.1. Edge AI application stack¶

The Edge AI applications are designed for users to quickly evaluate various deep learning networks with real-time inputs on the TI SoCs. Users can evaluate pre-imported inference models or build a custom network for deployment on the device. Once a network is finalized for performance and accuracy it can be easily integrated in a GStreamer based applications for rapid prototyping and deployment. The Edge AI application stack can be split into below components which integrates well with the underlying foundational Linux components and interacts with remote core firmware for acceleration.

The entire Edge AI application stack can be downloaded on a PC and cross-compiled for the desired target. For more details on the setup, build and install steps please refer to edgeai-app-stack on GitHub

9.1.1. edgeai-gst-apps¶

These are plug-and-play deep learning applications which support running open source runtime frameworks such as TFLite, ONNX and NeoAI-DLR with a variety of input and output configurations.

The latest source code with fixes can be pulled from TI Edge AI GStreamer apps

9.1.2. edgeai-dl-inferer¶

This repo provides interface to TI OSRT library whose APIs can be used standalone or with an application like edgeai-gst-apps. It also provides the source of NV12 post processing library and utils which are used with some custom GStreamer plugins.

The latest source code with fixes can be pulled from TI Edge AI DL Inferer

9.1.3. edgeai-gst-plugins¶

This repo provides the source of custom GStreamer plugins which helps offload tasks to the hardware accelerators with the help of edgeai-tiovx-modules.

Source code and documentation TI Edge AI GStreamer plugins

9.1.4. edgeai-app-utils¶

This repo provides utility APIs for NV12 drawing and font rendering, reporting MPU and DDR performance, ARM Neon optimized kernels for color conversion, pre-processing and scaling.

Source code and documentation: TI Edge AI Apps utils

9.1.5. edgeai-tiovx-modules¶

This repo provides OpenVx modules which help access underlying hardware accelerators in the SoC and serves as a bridge between GStreamer custom elements and underlying OpenVx custom kernels.

Source code and documentation: TI Edge AI TIOVX modules

9.1.6. edgeai-tiovx-kernels¶

This repo provides OpenVx kernels which help accelerate color-convert, DL-pre-processing and DL-post-processing using ARMv8 NEON accelerator

Source code and documentation: TI Edge AI TIOVX kernels

9.1.7. edgeai-tiovx-apps¶

This repo provides a layer on top of OpenVX to easily create a OpenVX graph and connect them to v4l2 blocks to realize various complex usecases

Source code and documentation: TI Edge AI TIOVX Apps

9.2. Foundation Linux¶

The Edge AI app stack is built on top of foundation Linux components which includes, uBoot, Linux kernels, device drivers, multimedia codecs, GPU drivers and a lot more. The Foundation Linux is built using the Yocto project and sources publicly available to build the entire image completely from scratch. We also provide an installer, which packages pre-built Linux filesystem, board support package and tools to customize Linux layers of the software stack.

Click AM68A Linux Foundation components to explore more!

9.3. Firmware builder¶

AM68A firmware builder package is required only when dealing with low level software components such as remote core firmware, drivers to hardware accelerators, system memory map changes etc. For user space application development this is not required.

Access to FIRMWARE-BUILDER-AM68A is provided via MySecureSW and requires a login.

Click AM68A REQUEST LINK to request access.