4. Deep learning models¶

4.1. Model Downloader Tool¶

TI Edge AI Model Zoo is a large collection of deep learning models validated to work on TI processors for Edge AI. It hosts several pre-compiled model artifacts for TI hardware.

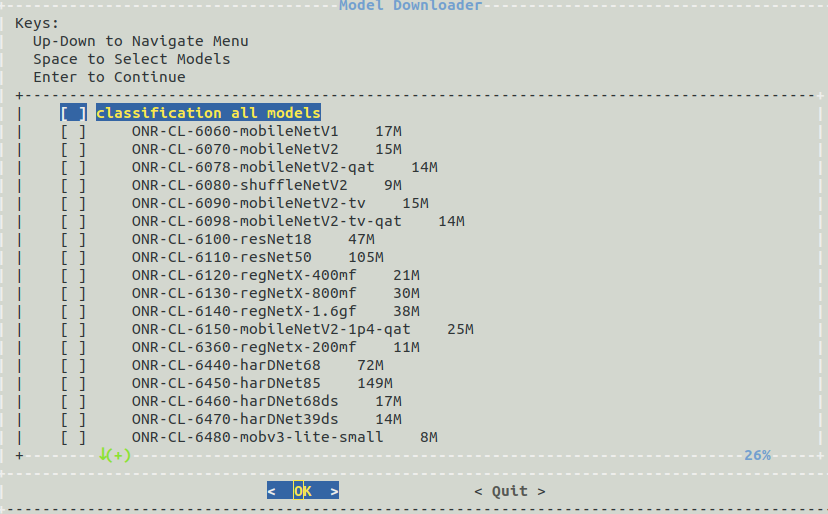

Use the Model Downloader Tool to download more models on target as shown,

/opt/edgeai-gst-apps# ./download_models.sh

The script will launch an interactive menu showing the list of available,

pre-imported models for download. The downloaded models will be placed

under /opt/model_zoo/ directory

Fig. 4.1 Model downloader tool menu option to download models¶

The script can also be used in a non-interactive way as shown below:

/opt/edgeai-gst-apps# ./download_models.sh --help

4.2. Model Development Tools¶

Edge AI Studio Model Composer is an integrated environment to allow end to end development of AI application with all necessary stages including data collection, annotation, training, compilation and deployment of machine learning models. It is bundled with optimal models from model zoo for user to select at different performance points. It allows development flow of bring your own data (BYOD) with TI recommended models.

Note

As of now object detection and classification tasks are supported. It uses EdgeAI-ModelMaker as a backend tool for training and compilation of models.

EdgeAI-ModelMaker is an end-to-end model development tool that integrates dataset handling, model training and model compilation and provides a simple config file interface that is friendly to beginners. Model training and Model compilation tools are automatically installed as part of the setup of the ModelMaker. Currently, it doesn’t have an integrated feature to annotate data, but can accept annotated dataset from another tool as explained in its documentation. The output of running the ModelMaker is a trained and compiled DNN model artifact package that has all the required side information and is to be ready to be consumed by this SDK. This is our recommended model development tool for beginners.

Note

The user needs to directly use the model compilation tools described below, only if he chooses not to use the ModelMaker or is working on a task type that is not supported by the ModelMaker.

4.3. Import Custom Models¶

The Processor SDK Linux for AM62Ax also supports importing pre-trained custom models to run inference on target. For custom model, it is recommended to validate the entire flow with simple file based examples provided in Edge AI TIDL tools

The SDK makes use of pre-compiled DNN (Deep Neural Network) models and performs inference using various OSRT (open source runtime) such as TFLite runtime, ONNX runtime and Neo AI-DLR. In order to infer a DNN, SDK expects the DNN and associated artifacts in the below directory structure.

TFL-OD-2010-ssd-mobV2-coco-mlperf-300x300

│

├── param.yaml

|

├── dataset.yaml

│

├── artifacts

│ ├── 264_tidl_io_1.bin

│ ├── 264_tidl_net.bin

│ ├── 264_tidl_net.bin.layer_info.txt

│ ├── 264_tidl_net.bin_netLog.txt

│ ├── 264_tidl_net.bin.svg

│ ├── allowedNode.txt

│ └── runtimes_visualization.svg

│

└── model

└── ssd_mobilenet_v2_300_float.tflite

4.3.1. DNN directory structure¶

Each DNN must have the following 4 components:

model: This directory contains the DNN being targeted to infer

artifacts: This directory contains the artifacts generated after the compilation of DNN for SDK. These artifacts can be generated and validated with simple file based examples provided in Edge AI TIDL Tools

param.yaml: A configuration file in yaml format to provide basic information about DNN, and associated pre and post processing parameters

dataset.yaml: A configuration file in yaml format needed for only classification and detection. It contains the mapping from output key to actual labels.

4.3.2. Param file format¶

Each DNN has it’s own pre-process, inference and post-process parameters to get the correct output. This information is typically available in the training software that was used to train the model. In order to convey this information to the SDK in a standardized fashion, we have defined a set of parameters that describe these operations. These parameters are in the param.yaml file.

Please see sample yaml files for various tasks such as image classification, semantic segmentation and object detection in edgeai-benchmark examples. Descriptions of various parameters are also in the yaml files. If users want to bring their own model to the SDK, then they need to prepare this information offline and get to the SDK. In next section we explain how to prepare this information

4.3.3. DNN compilation for SDK – Basic Instructions¶

The Processor SDK Linux for Edge AI supports three different runtimes to infer a DNN, and user can choose a run time depending on the format of DNN. We recommend users to use different run times and compare the performance and select the one which provides best performance. User can find the steps to generate the artifacts directory at Edge AI TIDL Tools

4.3.4. DNN compilation for SDK – Advanced Instructions¶

For beginners who are trying to compile models for the SDK, we recommend the basic instructions given in the previous section. However, DNNs have lot of variety and some models may need a different kind of preprocessing or postprocessing operations. In order to help customers deal with different kinds of models, we have prepared a model zoo in the repository edgeai-modelzoo

For the DNNs which are part of TI’s model zoo, one can find the compilation settings and pre-compiled model artifacts in edgeai-benchmark repository. Instructions are also given to compile custom models. When using edgeai-benchmark for model compilation, the yaml file is automatically generated and artifacts are packaged in the way SDK understands. Please follow the instructions in the repository to get started.