|

TI Deep Learning Product User Guide

|

|

TI Deep Learning Product User Guide

|

The Processor SDK implements TIDL offload support using the TFlite Delegates TFLite Delgate runtime

This heterogeneous execution enables:

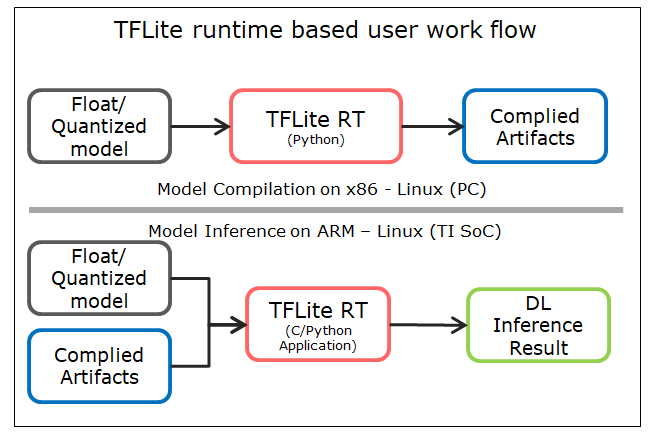

The diagram below illustrates the TFLite based work flow. The User needs to run the model compilation (sub-graph(s) creation and quantization) on PC and the generated artifacts can be used for inference on the device.

The Processor SDK package includes all the required python packages for runtime support.

Pre-requisite : PSDK RA should be installed on the Host Ubuntu 18.04 machine and able to run pre-built demos on EVM.

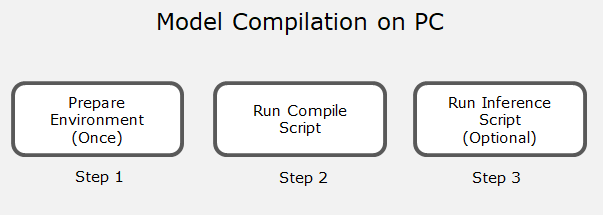

Following steps need to be followed : (Note - All below scripts to be run from ${PSDKRA_PATH}/tidl_xx_xx_xx_xx/ti_dl/test/tflrt/ folder)

Note

If you observe any issue in pip. Run below command to update pippython -m pip install --upgrade pip

The artifacts generated by python scripts in the above section can be inferred using either python or C/C++ APIs. The following steps are for running inference using python API. Refer Link for usage of C APIs for the same

Note : These scripts are only for basic functionally testing and performance check. Accuracy of the models can be benchmarked using the python module released here edgeai-benchmark

We also have IPython Notebooks for running inference on EVM. More details on this can be found here Link

An example call to interpreter from the python interface using delegate mechanism:

interpreter = tflite.Interpreter(model_path='path_to_model', \

experimental_delegates=[tflite.load_delegate('libtidl_tfl_delegate.so.1.0', delegate_options)])

'delegate_options' in the interpreter call comprise of the below options (required and optional):

The following options need to be specified by user while creating TFLite interpreter:

| Name | Value |

|---|---|

| tidl_tools_path | to be set to ${PSDKRA_PATH}/tidl_xx_xx_xx_xx/tidl_tools/ - Path from where to pick TIDL related tools |

| artifacts_folder | folder where user intends to store all the compilation artifacts |

The following options are set to default values, to be specified if modification needed by user. Below optional arguments are specific to model compilation and not applicable to inference except the 'debug_level'

| Name | Description | Default values |

|---|---|---|

| platform | "J7" | "J7" |

| version | TIDL version - open source runtimes supported from version 7.2 onwards | (7,3) |

| tensor_bits | Number of bits for TIDL tensor and weights - 8/16 | 8 |

| debug_level | 0 - no debug, 1 - rt debug prints, >=2 - increasing levels of debug and trace dump | 0 |

| max_num_subgraphs | offload up to <num> tidl subgraphs | 16 |

| deny_list | force disable offload of a particular operator to TIDL [^2] | "" - Empty list |

| accuracy_level | 0 - basic calibration, 1 - higher accuracy(advanced bias calibration), 9 - user defined [^3] | 1 |

| advanced_options:calibration_frames | Number of frames to be used for calibration - min 10 frames recommended | 20 |

| advanced_options:calibration_iterations | Number of bias calibration iterations [^1] | 50 |

| advanced_options:output_feature_16bit_names_list | List of names of the layers (comma separated string) as in the original model whose feature/activation output user wants to be in 16 bit [^1] [^4] | "" |

| advanced_options:params_16bit_names_list | List of names of the output layers (separated by comma or space or tab) as in the original model whose parameters user wants to be in 16 bit [^1] [^5] | "" |

| advanced_options:quantization_scale_type | 0 for non-power-of-2, 1 for power-of-2 | 0 |

| advanced_options:high_resolution_optimization | 0 for disable, 1 for enable | 0 |

| advanced_options:pre_batchnorm_fold | Fold batchnorm layer into following convolution layer, 0 for disable, 1 for enable | 1 |

| advanced_options:add_data_convert_ops | Adds the Input and Output format conversions to Model and performs the same in DSP instead of ARM. This is currently a experimental feature. | 0 |

| object_detection:confidence_threshold | Override "nms_score_threshold" parameter threshold in tflite detection post processing layer | Read from model |

| object_detection:nms_threshold | Override "nms_iou_threshold" parameter threshold in tflite detection post processing layer | Read from model |

| object_detection:top_k | Override "detections_per_class" parameter threshold in tflite detection post processing layer | Read from model |

| object_detection:keep_top_k | Override "max_detections" parameter threshold in tflite detection post processing layer | Read from model |

| ti_internal_nc_flag | internal use only | - |

Below options will be overwritten only if accuracy_level = 9, else will be discarded. For accuracy level 9, specified options will be overwritten, rest will be set to default values. For accuracy_level = 0/1, these are preset internally.

| Name | Description | Default values |

|---|---|---|

| advanced_options:activation_clipping | 0 for disable, 1 for enable [^1] | 1 |

| advanced_options:weight_clipping | 0 for disable, 1 for enable [^1] | 1 |

| advanced_options:bias_calibration | 0 for disable, 1 for enable [^1] | 1 |

| advanced_options:channel_wise_quantization | 0 for disable, 1 for enable [^1] | 0 |

[^1]: Advanced calibration can help improve 8-bit quantization. Please see TIDL Quantization for details.

[^2]: Denylist is a string of comma separated numbers which represent the operators as identified in tflite builtin ops. Please refer Tflite builtin ops , e.g. deny_list = "1, 2" to deny offloading 'AveragePool2d' and 'Concatenation' operators to TIDL.

[^3]: Advanced calibration options can be specified by setting accuracy_level = 9.

[^4]: Note that if for a given layer feature/activations is in 16 bit then parameters will automatically become 16 bit and user need not specify them as part of "advanced_options:params_16bit_names_list". Example format - "conv1_2, fire9/concat_1"

[^5]: This is not the name of the parameter of the layer but is expected to be the output name of the layer. Note that, if a given layers feature/activations is in 16 bit then parameters will automatically become 16 bit even if its not part of this list

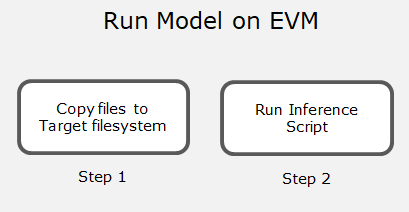

Pre-requisite: Compiled artifacts stored in artifacts folder as specified in step 2 of 'Model Compilation on PC' above

Following steps are needed to run C API based demo for tflite runtime:

cd ${PSDKRA_PATH}

git clone --single-branch -b r2.4 https://github.com/tensorflow/tensorflow.git

cd tensorflow

git checkout 582c8d236cb079023657287c318ff26adb239002

git am ../tidl_j7_xx_xx_xx_xx/ti_dl/tfl_delegate/0001-tflite-interpreter-add-support-for-custom-data.patch

./tensorflow/lite/tools/make/download_dependencies.sh

cd ..

export PSDK_INSTALL_PATH=$(pwd)

cd targetfs/usr/lib/

ln -s libtbb.so.2 libtbb.so

ln -s libtiff.so.5 libtiff.so

ln -s libwebp.so.7 libwebp.so

ln -s libopencv_highgui.so.4.1 libopencv_highgui.so

ln -s libopencv_imgcodecs.so.4.1 libopencv_imgcodecs.so

ln -s libopencv_core.so.4.1.0 libopencv_core.so

ln -s libopencv_imgproc.so.4.1.0 libopencv_imgproc.so

cd tidl_j7_xx_xx_xx_xx

make demos DIRECTORIES=tfl

# To run on EVM:

Mount ${PSDKR_PATH} on EVM

export LD_LIBRARY_PATH=/usr/lib

cd ${PSDKR_PATH}/tidl_j7_xx_xx_xx_xx/ti_dl/demos/out/J7/A72/LINUX/release/

./tidl_tfl_classification.out -m ../../../../../../test/testvecs/models/public/tflite/mobilenet_v1_1.0_224.tflite -l ../../../../../../test/testvecs/input/labels.txt -i ../../../../../../test/testvecs/input/airshow.bmp -a 1