|

TI Deep Learning Library User Guide

|

|

TI Deep Learning Library User Guide

|

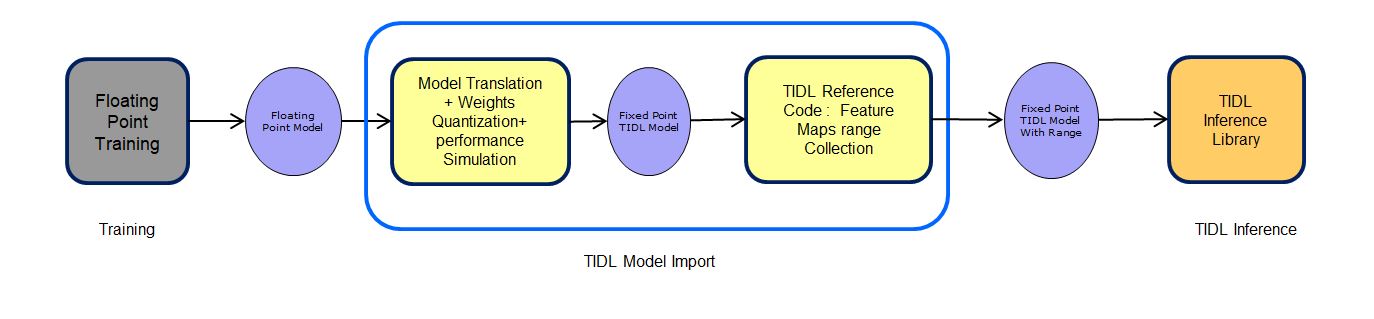

TIDL Translation (Import) tool can a accept a pre-trained floating point model trained using caffe-jacinto ,BVLC-caffe or tensorflow or models exported to ONNX format. The import step also generate output using the range collection tool. This output would be very similar to final expected output in the inference library. Validate the import step by comparing the output with expected result before trying the imported model on inference library

The tool available in "ti_dl/utils/tidlModelImport/out" can be used to import user trained models with below command

Note : Run the Import tool from the "ti_dl/utils/tidlModelImport" directory only. We recommend to use the file path as mentioned in example import configuration file. If you face any issues in any file path, try with absolute paths

Note : The trailing options can be used to override the parameters in the import configuration file. In the above example, the parameters resizeWidth and resizeHeight may have been 128 and 128, respectively, in the file tidl_import_j11.txt, but the override will run the import for values 512 and 512, respectively.

These parameters are important for model translation/import.

| Parameter | Default | Description |

|---|---|---|

| modelType | 0: Caffe | 0: Caffe, 1: TensorFlow, 2: ONNX, 3: tfLite |

| inputNetFile | MUST SET | Net definition from Training frames work. Example "deploy.prototxt" from caffe or frozen binary protobuf with parameters from tensorflow |

| inputParamsFile | MUST SET if Caffe model | Binary file for Model Parameters caffemodel from caffe. Not applicable for Tensorflow, TFLite and ONNX |

| outputNetFile | MUST SET | Output TIDL model with Net and Parameters |

| outputParamsFile | MUST SET | Input and output buffer descriptor file for TIDL ivision interface |

| numParamBits | 8bit | Bit depth for model parameters like Kernel, Bias etc., if this value is set to 32 bit then TIDL will run inferene in floating point, this feature is only supported in REF only host emulation flow. |

| numFeatureBits | 8bit | Bit depth for Layer activation; Max supported is 16. |

| quantizationStyle | 2: TIDL_QuantStyleNP2Fixed | Quantization method. 2: Linear Mode. 3: Power of 2 scales |

| inQuantFactor | 1.0 | List: Scale factor of input feature, if the input range used in training is 0 to 1 and the same is passed as tensor range of 0 - 255 to TIDL then this parameters shall be 255. This shall not be set in config file when inDataNorm is 1 |

| inElementType | 0: 8bit unsigned | List: Format for each input feature, 0 : 8bit Unsigned, 1 : 8bit Signed. |

| inWidth | List: each input tensors width. If set, this value will overwrite the input size. If not set, the import tool will use the original size of input net file. This value will pass on to Range Collection. | |

| inHeight | List: each input tensors Height. If set, this value will overwrite the input size. If not set, the import tool will use the original size of input net file. This value will pass on to Range Collection. | |

| inNumChannels | List: each input tensors Number of channels. If set, this value will overwrite the input size. If not set, the import tool will use the original size of input net file. This value will pass on to Range Collection. | |

| foldBnInConv2D | 1: Enable | Merge batch Norm layer weight and scale in previous convolution layer if possible. Currently this is always enabled. |

| foldPreBnConv2D | 1: Enable | 0: Disable, 1: Merge batch Norm layer weight and scale in next convolution layer if possible. This will improve performance if set, 2: Will merge batchnorm in next convolution and also add a pad layer before it with pad value equal to per channel mean of the batchnorm layer - this may give slight accuracy improvement over foldPreBnConv2D = 1, but at some performance cost |

| metaArchType | -1: Not used | Meta Architecture used by the network TIDL_metaArchCaffeJacinto = 0, TIDL_metaArchTFSSD = 1. If metaArch is not used, please leave it default. |

| metaLayersNamesList | Configuration files describing the details of Meta Arch, Example pipeline.config for TF SSD. Set this value if metaArchType == 0 or 1. | |

| inDataNamesList | List of input tensor names in network to be imported(use space/comma/tab to split). Will be useful to import portion of network. If not set, import tool will search the input net file for default inputs. | |

| outDataNamesList | List of output tensor names in network to be imported(use space/comma/tab to split). Will be useful to import portion of network. If not set, import tool will search the input net file for default outputs. | |

| outputFeature16bitNamesList | List of names of the layers (separated by comma or space or tab) as in the original model whose feature/activation output user wants to be in 16 bit. Note that if for a given layer feature/activations is in 16 bit then parameters will automatically become 16 bit and user need not specify them as part of params16bitNamesList. As TIDL merges certain layers, this list should correspond to the last layer in the merged output. User can find this mapping by running the import tool once without this parameter which generates <outputNetFile>.layer_info.txt where outputNetFile is same as given by the user in the import config file. This *.layer_info.txt file contains three columns the first one is the layer number, second one is unique data id and last one is the name as given in the original network's model format. This third column gives the name which will be present in the TIDL imported network after merging any layer. User should use this value for outputFeature16bitNamesList. | |

| params16bitNamesList | List of names of the output layers (separated by comma or space or tab) as in the original model whose parameters user wants to be in 16 bit. This is not the name of the parameter of the layer but is expected to be the output name of the layer. Note that, if a given layers feature/activations is in 16 bit then parameters will automatically become 16 bit even if its not part of this list. As TIDL merges certain layers, this list should correspond to the last layer in the merged output. User can find this mapping by running the import tool once without this parameter which generates <outputNetFile>.layer_info.txt where outputNetFile is same as given by the user in the import config file. This *.layer_info.txt file contains three columns the first one is the layer number, second one is unique data id and last one is the name as given in the original network's model format. This third column gives the name which will be present in the TIDL imported network after merging any layer. User should use this value for params16bitNamesList. | |

| inYuvFormat | -1: Not used | YUV Image format when network is trained with RGB input but the input is YUV. YUV to RGB conversion is folded as a part of existing convolution layer wherever possible or a new 1x1 convolution is added. Currently only YUV420 is supported. |

In most cases the input to network would be processed using some image transform functions. Example, Mean subtraction, Scaling or Standard deviation, cropping etc. The below parameters are embed in TIDL model file, so it can be used during inference.

| Parameter | Default | Description |

|---|---|---|

| inDataNorm | 0: Disable | Enable / Disable Normalization on input tensor. Scale and mean values are applicable only if this is enabled |

| inMean | List: Mean value needs to be subtracted for each channel of all input tensors. Only applicable when inDataNorm = 1 | |

| inScale | List: Scale value needs to be multiplied after means subtract for each channel of all input tensors. Only applicable when inDataNorm = 1 | |

| inDataPadInTIDL | 0: Disable | Enable / Disable padding of the input tensor within TIDL. If enabled, pad layer will be inserted after input layer if any of the following layers is a consumer of input layer and has non zero pad requirement (i) Convolution (ii) Batchnorm to be pre-merged into convolution (iii) Pooling. In case of batchnorm merged in convolution, padded values will be equal to the per channel mean of the batchnorm layer; else zero |

| inDataFormat | 1: RGB planar | List: Input tensor color format. 0: BGR planar, 1: RGB planar. Refer TIDL API guide for supported formats list |

| inResizeType | 0: Normal | Image resize type . 0: normal resize, 1: keep aspect & ratio. Refer TIDL API guide for supported formats list |

| resizeWidth | Image cropping is performed if resizeWidth > imWidth. If not set, resize is disabled. Import tool will use inWidth & inHeight. | |

| resizeHeight | Image cropping is performed if resizeHeight > inHeight. If not set, resize is disabled. Import tool will use inWidth & inHeight. |

| Parameter | Default | Description |

|---|---|---|

| perfSimTool | ../../utils/perfsim/ti_cnnperfsim.out(.exe) | Network Compiler executable file path. If not specified, uses from default location |

| perfSimConfig | ../../test/testvecs/config/import/device_base.cfg | Network Compiler Configuration file. Refer Network Compiler guide for configuration parameters |

| graphVizTool | ../../utils/tidlModelGraphviz/out/tidl_graphVisualiser.out(.exe) | Graphic visualize tool executable file path. If not specified, uses from default location |

| Parameter | Default | Description |

|---|---|---|

| tidlStatsTool | ../../test/PC_dsp_test_dl_algo.out(.exe) | TIDL PC Reference executable file path for range collection. If not specified, uses from default location |

| inData | Please refer the TIDL Sample Application doc | |

| inFileFormat | Please refer the TIDL Sample Application doc | |

| numFrames | Please refer the TIDL Sample Application doc | |

| postProcType | Please refer the TIDL Sample Application doc | |

| postProcDataId | Please refer the TIDL Sample Application doc | |

| quantRangeUpdateFactor | Please refer the TIDL Sample Application doc |

Configuration files used for validation of models are provided in the "ti_dl/test/testvecs/config/import/" folder for reference. Below are few example configuration file