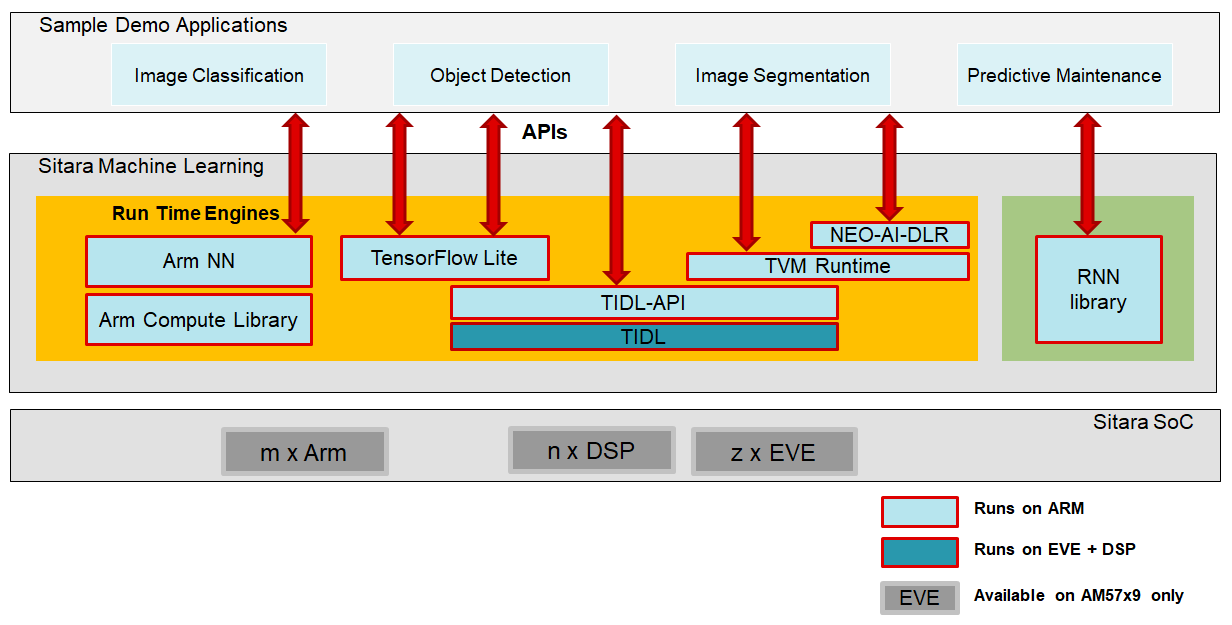

3.9. Machine Learning¶

Sitara Machine Learning toolkit brings machine learning to the edge by enabling machine learning inference on all Sitara devices (Arm only, Arm + specialized hardware accelerators). It is provided as part of TI’s Processor SDK Linux, free to download and use. Sitara machine learning today consists of TI Deep Learning (TIDL), Neo-AI-DLR, TVM runtime, TensorFlow Lite, Arm NN, and RNN library.

Neo-AI Deep Learning Runtime (DLR)

- Neo-AI-DLR is a new open source machine learning runtime for on-device inference.

- Supports Keras, Tensorflow, TFLite, GluonCV, MXNet, Pytorch, ONNX, and XGBoost models optimized automatically by Amazon SageMaker Neo or TVM compiler.

- Supports all Cortex-A ARM cores (AM3x, AM4x, AM5x, AM6x Sitara devices).

- On AM5729 and AM5749 devices, uses TIDL to accelerate supported models automatically.

- Open source deep learning runtime for on-device inference, supporting models compiled by TVM compiler.

- Available on all Cortex-A ARM cores (AM3x, AM4x, AM5x, AM6x Sitara devices).

- Open source deep learning runtime for on-device inference.

- Runs on all Cortex-A ARM cores (AM3x, AM4x, AM5x, AM6x Sitara devices).

- Imports Tensorflow Lite models.

- Uses TIDL import tool to create TIDL offloadable Tensorflow Lite models, which can be executed via Tensorflow Lite runtime with TIDL acceleration on AM5729 and AM5749 devices.

- Open source inference engine available from Arm.

- Runs on all Cortex-A ARM cores (AM3x, AM4x, AM5x, AM6x Sitara devices).

- Imports Caffe, ONNX, TensorFlow, and TensorFlow Lite models.

- Provides Long Short-Term Memory (LSTM) and fully connected layers in a standalone library to allow for rapid prototyping of inference applications that require Recurrent Neural Networks.

- Runs on all Cortex-A ARM cores (AM3x, AM4x, AM5x, AM6x Sitara devices).

- Integrated into TI’s Processor SDK Linux in an OOB demo for Predictive Maintenance.